Category Archives: Deep Learning

BERT vs GPT Models: Differences, Examples

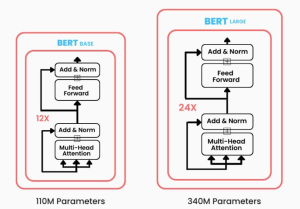

Have you been wondering what sets apart two of the most prominent transformer-based machine learning models in the field of NLP, Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformers (GPT)? While BERT leverages encoder-only transformer architecture, GPT models are based on decoder-only transformer architecture. In this blog, we will delve into the core architecture, training objectives, real-world applications, examples, and more. By exploring these aspects, we’ll learn about the unique strengths and use cases of both BERT and GPT models, providing you with insights that can guide your next LLM-based NLP project or research endeavor. Differences between BERT vs GPT Models BERT, introduced in 2018, marked a significant …

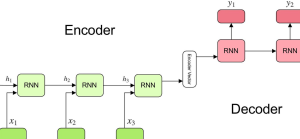

Demystifying Encoder Decoder Architecture & Neural Network

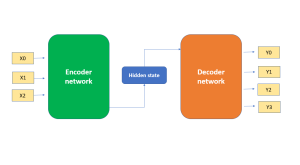

In the field of AI / machine learning, the encoder-decoder architecture is a widely-used framework for developing neural networks that can perform natural language processing (NLP) tasks such as language translation, text summarization, and question-answering systems, etc which require sequence-to-sequence modeling. This architecture involves a two-stage process where the input data is first encoded (using what is called an encoder) into a fixed-length numerical representation, which is then decoded (using a decoder) to produce an output that matches the desired format. In this blog, we will explore the inner workings of the encoder-decoder architecture, how it can be used to solve real-world problems, and some of the latest developments in …

Machine Learning Definition, Examples, Method, Types

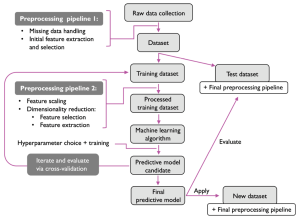

Last updated: 3rd Jan, 2024 Machine learning is a machine’s ability to learn from data. It has been around for decades, but machine learning is now being applied in nearly every industry and job function. In this blog post, we’ll cover a detailed introduction to what is machine learning (ML) including different definitions. We will also learn about different types of machine learning tasks, algorithms, etc along with real-world examples. What is machine learning & how does it work? Definition 1: Simply speaking, machine learning can be defined as an approach to model our beliefs about real-world events. For example, let’s say a person came to a doctor with a …

Large Language Models (LLMs) & Semantic Search: Examples

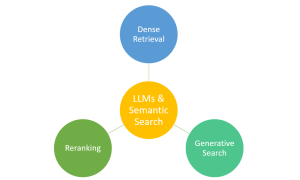

Have you ever marveled at how typing a few words into a search engine yields exactly the information you’re looking for from the vast expanse of the web? This is largely thanks to the advancements in semantic search, bolstered by technologies like Large Language Models (LLMs). Semantic search, which focuses on understanding the intent and contextual meaning behind queries, benefits from LLMs to provide more accurate and relevant results. However, it’s important to note that traditional search engines also rely on a sophisticated mix of algorithms, indexing, and ranking systems. LLMs complement these systems by enhancing their ability to interpret complex queries, making your search experience more intuitive and effective. …

Generative AI Examples, Use Cases, Applications

Last updated: 12th Dec, 2023 Machine learning, particularly in the field of Generative AI or generative modeling, has seen significant advancements recently. Generative AI involves algorithms that create new data samples and is widely recognized for its ability to produce not only coherent text but also highly realistic images, videos, and music. One of the most popular Generative AI example applications includes Large Language Models (LLMs) like GPT-3 and GPT-4, which are specialized in tasks like text generation, summarization, and machine translation. This technology has gained immense popularity due to its diverse applications and the impressive realism of the content it generates. As a data scientist, it is crucial to …

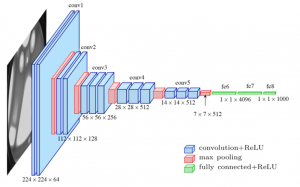

Different Types of CNN Architectures Explained: Examples

Last updated: 4th Dec, 2023. In the fast-paced world of computer vision and image processing, the problem of image classification consistently stands out: the ability to effectively recognize and classify images. As we continue to digitize and automate our world, the demand for systems that can understand and interpret visual data is growing at an unprecedented rate. The challenge is not just about recognizing images – it’s about doing so accurately and efficiently. Traditional machine learning methods often fall short, struggling to handle the complexity and high dimensionality of image data. This is where Convolutional Neural Networks (CNNs) comes to rescue. And, there are different types of CNN architectures based …

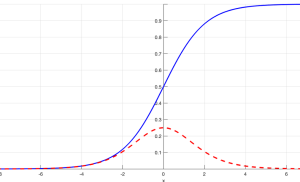

Activation Functions in Neural Networks: Concepts, Examples

Last updated: 24th Nov, 2023 The activation functions are critical to understanding neural networks. There are many activation functions available for data scientists to choose from, when training neural networks. So, it can be difficult to choose which activation function will work best for their needs. In this blog post, we look at different activation functions and provide examples of when they should be used in different types of neural networks. If you are starting on deep learning and wanted to know about different types of activation functions, you may want to bookmark this page for quicker access in the future. What are activation functions in neural networks? In a …

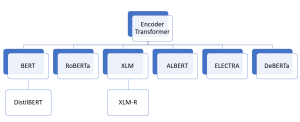

Encoder Only Transformer Models Quiz / Q&A

Are you intrigued by the revolutionary world of transformer architectures? Have you ever wondered how encoder-only transformer models like BERT, ELECTRA, or DeBERTa have reshaped the landscape of Natural Language Processing (NLP)? The rapid advancement of machine learning has led to the creation of numerous transformer architectures, each with unique features, applications, and underlying mechanics. Whether you’re a data scientist, machine learning engineer, generative AI enthusiast, or a student eager to deepen your understanding, this quiz offers an engaging and informative way to assess your knowledge and sharpen your skills. It would also help you prepare for your interviews on this topic. Encoder-only transformer models have become a cornerstone in …

Encoder-only Transformer Models: Examples

How can machines accurately classify text into categories? What enables them to recognize specific entities like names, locations, or dates within a sea of words? How is it possible for a computer to comprehend and respond to complex human questions? These remarkable capabilities are now a reality, thanks to encoder-only transformer architectures like BERT. From text classification and Named Entity Recognition (NER) to question answering and more, these models have revolutionized the way we interact with and process language. In the realm of AI and machine learning, encoder-only transformer models like BERT, DistilBERT, RoBERTa, and others have emerged as game-changing innovations. These models not only facilitate a deeper understanding of …

LLMs & Semantic Search Course by Andrew NG, Cohere & Partners

Andrew Ng, a renowned name in the world of deep learning and AI, has joined forces with Cohere, a pioneer in natural language processing technologies. Alongside him are Jay Alammar, a well-known educator and visualizer of machine learning concepts, and Serrano Academy, an esteemed institution dedicated to AI research and education. Together, they have launched an insightful course titled “Large Language Models with Semantic Search.” This collaboration represents a fusion of expertise aimed at addressing the growing needs of semantic search in various applications. In an era where keyword search has dominated the search landscape, the need for more sophisticated, content-aware search capabilities is becoming increasingly evident. Content-rich platforms like …

Quiz: BERT & GPT Transformer Models Q&A

Are you fascinated by the world of natural language processing and the cutting-edge generative AI models that have revolutionized the way machines understand human language? Two such large language models (LLMs), BERT and GPT, stand as pillars in the field, each with unique architectures and capabilities. But how well do you know these models? In this quiz blog, we will challenge your knowledge and understanding of these two groundbreaking technologies. Before you dive into the quiz, let’s explore an overview of BERT and GPT. BERT (Bidirectional Encoder Representations from Transformers) BERT is known for its bidirectional processing of text, allowing it to capture context from both sides of a word …

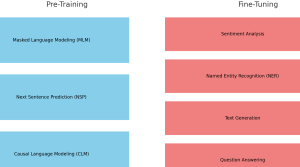

Pre-training vs Fine-tuning in LLM: Examples

Are you intrigued by the inner workings of large language models (LLMs) like BERT and GPT series models? Ever wondered how these models manage to understand human language with such precision? What are the critical stages that transform them from simple neural networks into powerful tools capable of text prediction, sentiment analysis, and more? The answer lies in two vital phases: pre-training and fine-tuning. These stages not only make language models adaptable to various tasks but also bring them closer to understanding language the way humans do. In this blog, we’ll dive into the fascinating journey of pre-training and fine-tuning in LLMs, complete with real-world examples. Whether you are a …

Vanishing Gradient Problem in Deep Learning: Examples

Ever found yourself wondering why your deep learning (deep neural network) model is simply refusing to learn? Or struggled to comprehend why your deep neural network isn’t reaching the accuracy you expected? The culprit behind these issues might very well be the infamous vanishing gradient problem, a common hurdle in the field of deep learning. Understanding and mitigating the vanishing gradient problem is a must-have skill in any data scientist‘s arsenal. This is due to the profound impact it can have on the training and performance of deep neural networks. In this blog post, we will delve into the heart of this issue, learning the calculus behind neural networks and …

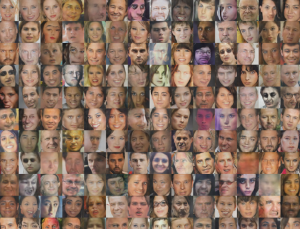

DCGAN Architecture Concepts, Real-world Examples

Have you ever wondered how AI can create lifelike images that are virtually indistinguishable from reality? Well, there is a neural network architecture, Deep Convolutional Generative Adversarial Network (DCGAN) that has revolutionized image generation, from medical imaging to video game design. DCGAN’s ability to create high-resolution, visually stunning images has brought it into great usage across numerous real-world applications. From enhancing data augmentation in medical imaging to inspiring artists with novel artworks, DCGAN‘s impact transcends traditional machine learning boundaries. In this blog, we will delve into the fundamental concepts behind the DCGAN architecture, exploring its key components and the ingenious interplay between its generator and discriminator networks. Together, these components …

Generative Adversarial Network (GAN): Concepts, Examples

In this post, you will learn concepts & examples of generative adversarial network (GAN). The idea is to put together key concepts & some of the interesting examples from across the industry to get a perspective on what problems can be solved using GAN. As a data scientist or machine learning engineer, it would be imperative upon us to understand the GAN concepts in a great manner to apply the same to solve real-world problems. This is where GAN network examples will prove to be helpful. What is Generative Adversarial Network (GAN)? We will try and understand the concepts of GAN with the help of a real-life example. Imagine that …

How does Dall-E 2 Work? Concepts, Examples

Have you ever wondered how generative AI is converting words into images? Or how generative AI models create a picture of something you’ve only described in words? Creating high-quality images from textual descriptions has long been a challenge for artificial intelligence (AI) researchers. That’s where DALL-E and DALL-E 2 comes in. In this blog, we will look into the details related to Dall-E 2. Developed by OpenAI, DALL-E 2 is a cutting-edge AI model that can generate highly realistic images from textual descriptions. So how does DALL-E 2 work, and what makes it so special? In this blog post, we’ll explore the key concepts and techniques behind DALL-E 2, including …

I found it very helpful. However the differences are not too understandable for me