Tag Archives: LLMs

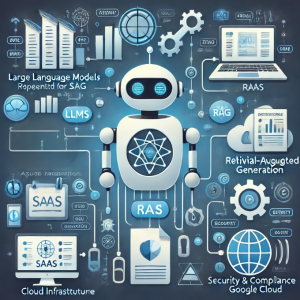

Build AI Chatbots for SAAS Using LLMs, RAG, Multi-Agent Frameworks

Software-as-a-Service (SaaS) providers have long relied on traditional chatbot solutions like AWS Lex and Google Dialogflow to automate customer interactions. These platforms required extensive configuration of intents, utterances, and dialog flows, which made building and maintaining chatbots complex and time-consuming. The need for manual intent classification and rule-based conversation logic often resulted in rigid and limited chatbot experiences, unable to handle dynamic user queries effectively. With the advent of generative AI, SaaS providers are increasingly adopting Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and multi-agent frameworks such as LangChain, LangGraph, and LangSmith to create more scalable and intelligent AI-driven chatbots. This blog explores how SaaS providers can leverage these technologies …

Creating a RAG Application Using LangGraph: Example Code

Retrieval-Augmented Generation (RAG) is an innovative generative AI method that combines retrieval-based search with large language models (LLMs) to enhance response accuracy and contextual relevance. Unlike traditional retrieval systems that return existing documents or generative models that rely solely on pre-trained knowledge, RAG technique dynamically integrates context as retrieved information related to query with LLM outputs. LangGraph, an advanced extension of LangChain, provides a structured workflow for developing RAG applications. This guide will walk through the process of building a RAG system using LangGraph with example implementations. Setting Up the Environment To get started, we need to install the necessary dependencies. The following commands will ensure that all required LangChain …

Building a RAG Application with LangChain: Example Code

The combination of Retrieval-Augmented Generation (RAG) and powerful language models enables the development of sophisticated applications that leverage large datasets to answer questions effectively. In this blog, we will explore the steps to build an LLM RAG application using LangChain. Prerequisites Before diving into the implementation, ensure you have the required libraries installed. Execute the following command to install the necessary packages: Setting Up Environment Variables LangChain integrates with various APIs to enable tracing and embedding generation, which are crucial for debugging workflows and creating compact numerical representations of text data for efficient retrieval and processing in RAG applications. Set up the required environment variables for LangChain and OpenAI: Step …

Building an OpenAI Chatbot with LangChain

Have you ever wondered how to use OpenAI APIs to create custom chatbots? With advancements in large language models (LLMs), anyone can develop intelligent, customized chatbots tailored to specific needs. In this blog, we’ll explore how LangChain and OpenAI LLMs work together to help you build your own AI-driven chatbot from scratch. Prerequisites Before getting started, ensure you have Python (version 3.8 or later) installed and the required libraries. You can install the necessary packages using the following command: Setting Up OpenAI API Key To use OpenAI’s services, you need an API key, which you can obtain by signing up at OpenAI’s website (OpenAI) and generating a key from the …

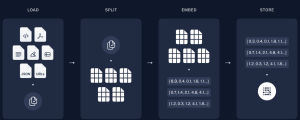

How Indexing Works in LLM-Based RAG Applications

When building a Retrieval-Augmented Generation (RAG) application powered by Large Language Models (LLMs), which combine the ability to generate human-like text with advanced retrieval mechanisms for precise and contextually relevant information, effective indexing plays a pivotal role. It ensures that only the most contextually relevant data is retrieved and fed into the LLM, improving the quality and accuracy of the generated responses. This process reduces noise, optimizes token usage, and directly impacts the application’s ability to handle large datasets efficiently. RAG applications combine the generative capabilities of LLMs with information retrieval, making them ideal for tasks such as question-answering, summarization, or domain-specific problem-solving. This blog will walk you through the …

Agentic AI Design Patterns Examples

In the ever-evolving landscape of agentic AI workflows and applications, understanding and leveraging design patterns is crucial for building effective and innovative solutions. Agentic AI design patterns provide structured approaches to solving complex problems. They enhance the capabilities of AI agents by enabling reasoning, planning, collaboration, and tool integration. For instance, you can think of these patterns as a blueprint for constructing a well-oiled team of specialists in a workplace—each with unique roles and tools, working in harmony to tackle a project efficiently and innovatively. Imagine a team of engineers collaborating on designing a new car, where one member focuses on aerodynamics, another on engine performance, and a third on …

List of Agentic AI Resources, Papers, Courses

In this blog, I aim to provide a comprehensive list of valuable resources for learning Agentic AI, which refers to developing intelligent systems capable of perception, autonomous decision-making, reasoning, and interaction in dynamic environments. These resources include tutorials, research papers, online courses, and practical tools to help you deepen your understanding of this emerging field. This blog will continue to be updated with relevant and popular papers periodically, based on emerging trends and the significance of newly published works in the field. Additionally, feel free to suggest any papers that you would like to see included in this list. This curated list highlights some of the most impactful and insightful …

OpenAI GPT Models in 2024: What’s in it for Data Scientists

For data scientists and machine learning researchers, 2024 has been a landmark year in AI innovation. OpenAI’s latest advancements promise enhanced reasoning capabilities and multimodal processing, setting new industry benchmarks. Let’s dive into these milestones and their practical implications for data scientists. May 2024: Launch of GPT-4o OpenAI introduced GPT-4o (“o” for “omni”), a multimodal powerhouse designed for text, image, and audio processing. With faster response times and improved performance across multilingual and vision tasks, GPT-4o offers a great tool for developing advanced AI applications. Early adopters have reported up to 40% efficiency gains in tasks requiring multimodal analysis (Smith et al., 2024). GPT-4o’s ability to process and integrate multi-modal …

Collaborative Writing Use Cases with ChatGPT Canvas

ChatGPT Canvas is a cutting-edge, user-friendly platform that simplifies content creation and elevates collaboration. Whether drafting detailed research papers, crafting visually engaging presentations, or writing professional emails, ChatGPT Canvas has the tools to make your work efficient and impactful. This guide explores leveraging Canvas effectively, tailored for college-level users and professionals alike. Developing Blogs, Articles or Research Essays Research essays, blogs & articless demand clarity, depth, and methodical organization. ChatGPT Canvas streamlines the entire process by offering tools to explore topics, verify facts, and refine your arguments. Its readability and visual integration features ensure that your essays are both compelling and accessible. Topic Exploration: Use web searches to dive into …

When to Use ChatGPT O1 Model

Knowing when to use the LLM such as the ChatGPT O1 model is key to unlocking its full potential. For example, the O1 model is particularly beneficial in scenarios such as analyzing large datasets for patterns in genomics, designing experiments to test novel chemical reactions, or creating algorithms to optimize workflows in computational biology. These applications highlight its ability to address diverse and intricate challenges. Designed to address complex, multifaceted challenges, the O1 model shines when diverse expertise—spanning data analysis, experimental design, coding, and beyond—is required. Let’s delve into these capabilities to understand when and how they can be effectively applied to drive groundbreaking advancements across various fields. Data Analysis …

Agentic Reasoning Design Patterns in AI: Examples

In recent years, artificial intelligence (AI) has evolved to include more sophisticated and capable agents, such as virtual assistants, autonomous robots, and conversational large language models (LLMs) agents. These agents can think, act, and collaborate to achieve complex goals. Agentic Reasoning Design Patterns help explain how these agents work by outlining the essential strategies that AI agents use for reasoning, decision-making, and interacting with their environment. What is an AI Agent? An AI agent, particularly in the context of LLM agents, is an autonomous software entity capable of perceiving its environment, making decisions, and taking actions to achieve specific goals. LLMs enable these agents to understand natural language and reason …

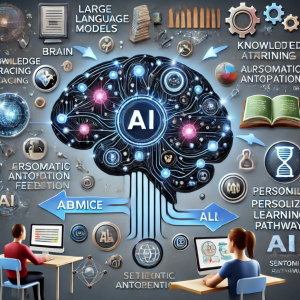

LLMs for Adaptive Learning & Personalized Education

Adaptive learning helps in tailoring learning experiences to fit the unique needs of each student. In the quest to make learning more personalized, Large Language Models (LLMs) with their capability to understand and generate human-like text, offer unprecedented opportunities to adaptively support and enhance the learning process. In this blog, we will explore how adaptive learning can leverage LLMs by integrating Knowledge Tracing (KT), Semantic Representation Learning, and Automated Knowledge Concept Annotation to create a highly personalized and effective educational experience. What is Adaptive Learning? Adaptive learning refers to a method of education that levereges technology to adjust the type and difficulty of learning content based on individual student performance. …

Completion Model vs Chat Model: Python Examples

In this blog, we will learn about the concepts of completion and chat large language models (LLMs) with the help of Python examples. What’s the Completion Model in LLM? A completion model is a type of LLM that takes a text input and generates a text output, which is called a completion. In other words, a completion model is a type of LLM that generates text that continues from a given prompt or partial input. When provided with an initial piece of text, the model uses its trained knowledge to predict and generate the most likely subsequent text. A completion model can generate summaries, translations, stories, code, lyrics, etc depending on …

LLM Hosting Strategy, Options & Cost: Examples

As part of laying down application architecture for LLM applications, one key focus area is LLM deployments. Related to LLM deployment is laying down LLM hosting strategy as part of which different hosting options need to be looked at, and evaluated based on various criteria including cost and appropriate hosting should be selected. In this blog, we will learn about different hosting options for different kinds of LLM and related strategies. LLM Hosting Cost depends on the type of LLM Needed What is going to be the cost related to LLM hosting depends upon the type of LLM we need for our application. LLM Hosting Cost for Proprietary Models If …

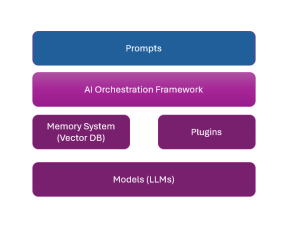

Application Architecture for LLM Applications: Examples

Large language models (LLMs), also termed large foundation models (LFMs), in recent times have been enabling the creation of innovative software products that are solving a wide range of problems that were unimaginable until recent times. Different stakeholders in the software engineering and AI arena need to learn about how to create such LLM-powered software applications. And, the most important aspect of creating such apps is the application architecture of such LLM applications. In this blog, we will learn about key application architecture components for LLM-based applications. This would be helpful for product managers, software architects, LLM architects, ML engineers, etc. LLMs in the software engineering landscape are also termed …

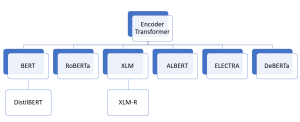

Encoder-only Transformer Models: Examples

How can machines accurately classify text into categories? What enables them to recognize specific entities like names, locations, or dates within a sea of words? How is it possible for a computer to comprehend and respond to complex human questions? These remarkable capabilities are now a reality, thanks to encoder-only transformer architectures like BERT. From text classification and Named Entity Recognition (NER) to question answering and more, these models have revolutionized the way we interact with and process language. In the realm of AI and machine learning, encoder-only transformer models like BERT, DistilBERT, RoBERTa, and others have emerged as game-changing innovations. These models not only facilitate a deeper understanding of …

I found it very helpful. However the differences are not too understandable for me