“Everything should be made as simple as possible, but not simpler.” – Albert Einstein

Consider this: According to a recent study by IDC, data scientists spend approximately 80% of their time cleaning and preparing data for analysis, leaving only 20% of their time for the actual tasks of analysis, modeling, and interpretation. Does this sound familiar to you? Are you frustrated by the amount of time you spend on complex data wrangling and model tuning, only to find that your machine learning model doesn’t generalize well to new data?

As data scientists, we often find ourselves in a predicament. We strive for the highest accuracy and predictive power in our models, which often leads us towards more complex methodologies. Deep learning, ensemble models, high dimensional feature spaces – all promise better performance but often at the expense of understanding and simplicity. Yet, as our models become more complicated, we also face the risk of overfitting and losing the ability to generalize to new data.

How do we strike a balance between simplicity and accuracy? How can we ensure that our models generalize well and are not just overfitting to our training data? This is where Occam’s Razor, a principle from philosophy, comes in handy and finds its application in the realm of machine learning. In this blog, we’ll explore the concept of Occam’s Razor and its crucial role in machine learning. We’ll discuss how adhering to this philosophy can alleviate some of your frustrations as a data scientist, by guiding you towards simpler, more interpretable models that still perform well. By understanding and applying Occam’s Razor, you’ll be better equipped to build effective models, make the most of your time, and contribute more value in your role as a data scientist.

What’s Occam’s Razor w.r.t Machine Learning?

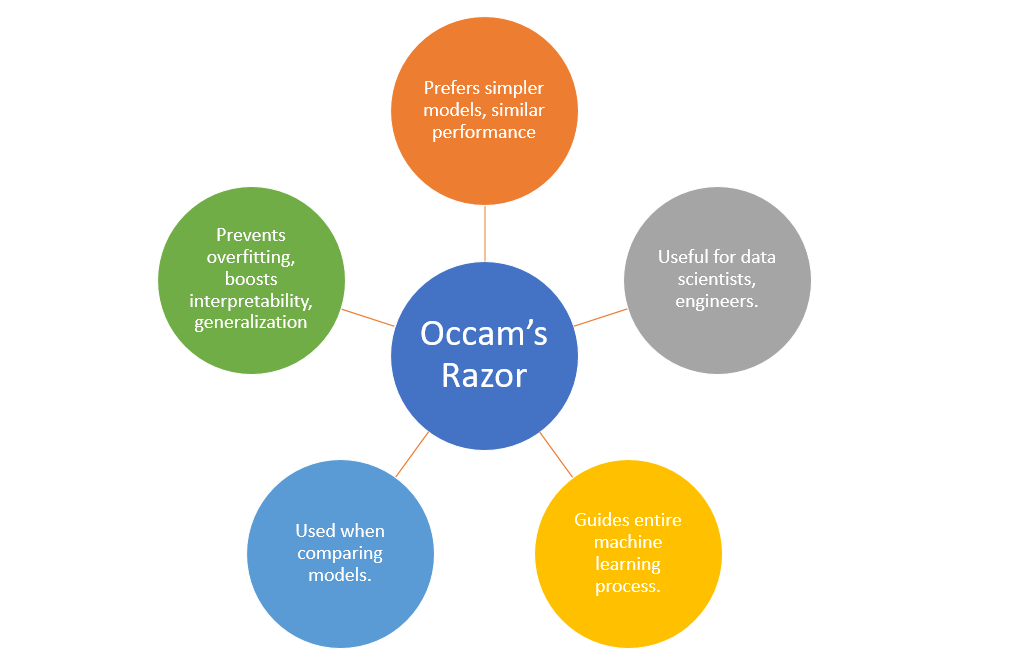

Have you ever wondered why we sometimes get lost in complex solutions when a simple one can solve the problem? This brings us to an important concept in machine learning, Occam’s Razor.

Occam’s Razor is a principle that likes simplicity. It says that the simplest solution is usually the best one. In machine learning, this means that if we have two models that work about as well as each other, we should choose the simpler one.

Who needs to know about Occam’s Razor? Anyone who works with machine learning models should know about it. This includes data scientists and machine learning engineers. Occam’s Razor can help you make good decisions when you’re choosing between different models. It can stop you from picking a model that’s too complicated when a simpler one would do the job. Occam’s Razor can be used in all parts of machine learning. Whether you’re deciding which features to use in your model, which algorithm to use, or how to fine-tune your model, Occam’s Razor can guide you. It tells you to choose simplicity and avoid overfitting.

When should you think about Occam’s Razor? Any time you’re comparing models. If two models work equally well, the simpler one – the one that’s easier to understand or has fewer parts – is usually the better choice.

Why is Occam’s Razor so useful in machine learning? It helps us avoid overfitting, which is when a model works well on the training data but not on new data. By choosing simpler models, we make sure our model learns the pattern in the data, not the noise. Also, simpler models are usually easier to understand and explain, which is important in many industries.

Techniques for Applying Occam’s Razor in Machine Learning

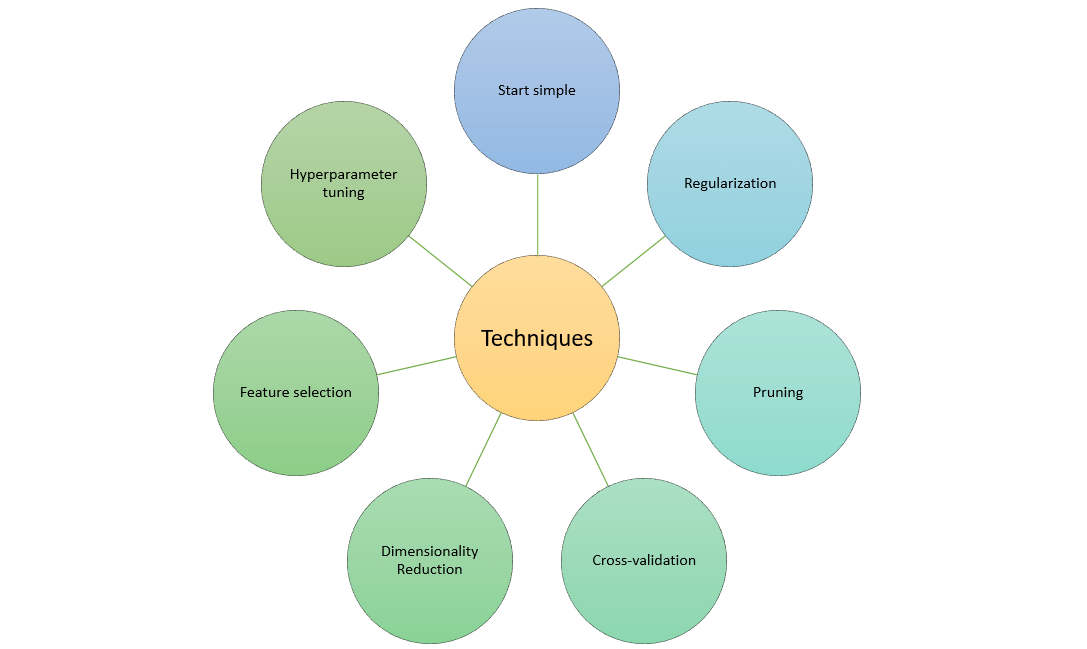

Staying aligned with the philosophy of Occam’s Razor in the context of machine learning involves choosing simpler models when possible and using techniques that prevent overfitting. The following are some of the techniques we can apply to stay aligned with Occam’s Razor while building machine learning model:

- Start with Simpler Models: Rather than starting with a complex model, start with a simpler one. You could begin with a linear regression or decision tree before moving to more complex models like random forests or neural networks. This gives you a baseline to compare against and helps you understand if the additional complexity is justified.

- Regularization: Regularization techniques such as L1 (Lasso) and L2 (Ridge) can help prevent overfitting by adding a penalty term to the loss function that constrains the magnitude of the parameters. This discourages the model from relying too heavily on any one feature and makes the model simpler and more generalizable.

- Pruning: Pruning techniques are used in decision trees and neural networks to remove unnecessary complexity. In decision trees, pruning can remove unimportant branches. In neural networks, pruning can remove unnecessary weights or neurons.

- Cross-Validation: Cross-validation helps you understand how well your model generalizes to unseen data. If a model performs well on the training data but poorly on the validation data, it’s likely overfitting, which indicates that the model might be too complex.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) or t-SNE can reduce the number of features in your data, simplifying the model and helping to prevent overfitting.

- Feature Selection: By selecting only the most important features for your model, you can reduce complexity and improve interpretability. Techniques for feature selection include mutual information, correlation coefficients, and recursive feature elimination.

- Hyperparameter Tuning: Many machine learning models have hyperparameters that control their complexity. For example, the depth of a decision tree, or the penalty term in a regularized regression. Tuning these hyperparameters can help you find the right balance between simplicity and accuracy.

Why Understanding Occam’s Razor is Important for Data Scientists

The following represents some of the benefits of understanding and applying Occam’s Razor for data scientists

- Enhancing Interpretability: Simpler models are often more interpretable, which means it’s easier to understand how they’re making predictions. This can be important for trust, transparency, and even legal reasons in certain industries. For example, in healthcare or finance, being able to explain why a model made a certain prediction could be crucial.

- Avoiding Overfitting: As mentioned before, complex models can often fit the training data very well, but they can also capture the noise in the data, leading to overfitting. An overfitted model performs well on the training data but poorly on unseen data, which is a problem because the goal of machine learning is to make accurate predictions on new, unseen data. By keeping models simpler, data scientists can reduce the risk of overfitting.

- Improving Generalizability: Simpler models are more likely to generalize well to unseen data. This is because they are less likely to fit the noise in the training data and more likely to capture the underlying trend or relationship.

- Reducing Computational Resources: Simpler models typically require less computational resources to train and predict. This can be a significant advantage in real-world settings, where resources might be limited or expensive.

Conclusion

In the ever-evolving field of machine learning, it’s easy to be drawn towards increasingly complex models and techniques. However, as we’ve explored in this blog post, the principle of Occam’s Razor reminds us of the value of simplicity. From building more generalizable models to enhancing interpretability, keeping our models as simple as possible (but no simpler) can yield significant benefits.

Occam’s Razor is more than just a philosophical principle—it’s a practical tool for every data scientist and machine learning engineer. By starting with simpler models, employing techniques like regularization, pruning, cross-validation, dimensionality reduction, feature selection, and careful hyperparameter tuning, we can stay aligned with this timeless philosophy. Thank you for taking the time to read this blog post, and I hope that you found it informative and useful. As you navigate your machine learning projects, don’t forget to keep Occam’s Razor in mind.

If you enjoyed this blog post and found it useful, I would love to hear from you. Please feel free to leave a comment below with your thoughts, questions, or your own experiences with Occam’s Razor in your machine learning journey. If you believe this post could benefit others, we encourage you to share it with your colleagues, friends, or anyone else you think might find it interesting.

- Completion Model vs Chat Model: Python Examples - June 30, 2024

- LLM Hosting Strategy, Options & Cost: Examples - June 30, 2024

- Application Architecture for LLM Applications: Examples - June 25, 2024

I found it very helpful. However the differences are not too understandable for me