Last updated: 12th Dec, 2023

Machine learning, particularly in the field of Generative AI or generative modeling, has seen significant advancements recently. Generative AI involves algorithms that create new data samples and is widely recognized for its ability to produce not only coherent text but also highly realistic images, videos, and music. One of the most popular Generative AI example applications includes Large Language Models (LLMs) like GPT-3 and GPT-4, which are specialized in tasks like text generation, summarization, and machine translation. This technology has gained immense popularity due to its diverse applications and the impressive realism of the content it generates.

As a data scientist, it is crucial to understand different aspects of generative AI / modeling and its various example applications. This powerful tool has been used in a wide range of fields, including computer vision, natural language processing (NLP), drug discovery or for that matter any field where there is a need to generate new data samples to build the new product. By learning generative AI, data scientists can develop cutting-edge generative models that can simulate complex systems, generate new content, and even discover new patterns and relationships in data.

In this blog post, we will dive into the world of generative AI / modeling in machine learning and explore some of its most examples popular in current times. We will also discuss some of the popular techniques used in generative modeling, such as encoder-decoder architectures (autoencoders, variational autoencoders, etc), generative adversarial networks (GANs), etc. By the end of this blog post, you will have a solid understanding of generative AI and why it is an essential concept for any data scientist to learn. So let’s get started!

What is Generative AI / Modeling? How does it work?

Generative AI is a kind of machine learning techniques that involve the creation of new data samples from the trained models. These models can also be called as the generative models. In other words, generative models learn the underlying patterns and structures of a given dataset and can generate new samples that resemble the original data. Let’s understand with few examples.

For example, let’s consider the task of generating realistic-looking images of faces. A generative model (such as autoencoders) can be trained on a large dataset of real images of faces, which it uses to learn the underlying patterns and features that define a face. The model then generates new images of faces that resemble the ones in the original dataset. The generative models capture the complex relationships (in form of latent / hidden state representations) between the various elements that make up an image of a face, such as the shape of the eyes, nose, mouth, and hair, as well as the lighting, shading, and other environmental factors.

Another example is in the realm of text generation, as seen with Large Language Models (LLMs) like GPT-3 and GPT-4, where these generative AI LLMs can write essays, poems, or even generate code, based on the patterns they’ve learned from vast text datasets.

This is how one can understand how generative modeling works:

- Lets say we have a set of observations, say, images of faces or text.

- These face images or text must have been created using some unknown data distribution, say, $P_{data}$.

- What is required is use the face images or text to train a model, say, $P_{model}$ which can create samples of new face images or text which look similar to the data it has been trained on.

- The following will be some of the properties of this generative model, $P_{model}$

- The generative model can be used to create new samples as desired.

- The model would said to have high accuracy if the generated samples look like it has been drawn from the trained sample. On the other hand, the model would have lower accuracy if the generated sample does not look like it has been drawn from trained data set.

Types of Generative AI Models

The following are some of the popular types of generative AI models. These models differ in their architecture and learning approach, but all aim to generate new data that resembles the training data.

- Generative Adversarial Networks (GANs): These involve two neural networks, a generator and a discriminator, which work against each other to improve the quality of generated data, often used in realistic image generation.

- Variational Autoencoders (VAEs): VAEs are used for generating new data points by learning a compressed representation of the input data, commonly applied in image processing and generation.

- Encoder-Decoder Transformer Architecture (e.g., T5, BART): These models are designed for tasks like text translation, summarization, and question-answering, where both input and output are sequences of data.

- Encoder-Only Transformer Architecture (e.g., BERT): Primarily used for understanding and processing input data, such as for language understanding tasks (LLMs), but not typically used for generative purposes like the other models mentioned.

- Autoregressive (Decoder-Only Transformer such as GPT): These models predict the next item in a sequence, making them powerful for tasks like text generation (LLMs).

- Flow-Based Models: These models, such as Normalizing Flows, are designed to explicitly model the distribution of data, allowing for both efficient generation and density estimation.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) Networks: Earlier used in sequence generation tasks like text and music before the rise of transformer models.

- Hybrid Models: Some newer architectures combine elements of different models (like GANs and VAEs) to leverage the strengths of each in generating complex data.

Generative AI & Real-life Examples / Use Cases

Generative AI have a wide range of applications in various fields such as image and video generation, natural language processing, music generation, and more. For example, GANs can be used to generate realistic images of objects or faces, VAEs can be used for data compression or to generate new samples with controlled attributes, and autoregressive models can be used for text generation or speech synthesis.

In this section, we will explore examples related to how generative AI models can be used in real-world scenarios associated with various business domains including art, music, healthcare, finance, procurement and more.

- Content Creation (Text, Images): One of the primary applications of generative AI in content creation is the text generation. This includes anything from product descriptions and news articles to social media posts and chatbot responses. And, large language models (LLMs) play a key role in achieving text generation using prompts. Large language models are trained on large datasets of text, such as books, articles, and other written content. One of the classic example of generative AI for text generation is ChatGPT that can generate coherent and contextually relevant responses to user queries in natural language, mimicking human-like conversation. ChatGPT is built on top of multiple state-of-the-art large language models that has been trained on a large corpus of text data, allowing it to generate text that is grammatically correct and semantically meaningful. It can be used in a variety of applications, such as customer service chatbots, virtual assistants, and even for creative writing and storytelling.

In addition to text generation, generative AI can also be used to create visual content. By using generative AI to create visual content, companies can save time and resources on manual content creation, while also creating high-quality and engaging content that can help to attract and retain customers. - Music creation: Generative models have been used in the field of music to create original compositions, generate new sounds, and aid in music analysis. In music composition, generative models can be used to create original pieces of music by learning from existing music data sets. These models can be trained to generate music that follows certain stylistic or structural rules, or to produce music that is completely unique.

- Drug Discovery: One way generative models are used in drug discovery is through the generation of molecules that are optimized for a particular property or activity, such as potency or selectivity. For example, a generative model can be trained on a large dataset of known drugs and their corresponding properties to learn the patterns and features that are important for drug activity. The model can then be used to generate new molecules that are optimized for specific properties of interest, which can then be tested for their effectiveness in treating a particular disease.

- Finance: Generative models can also be used in fraud detection by generating synthetic data that simulates fraudulent activities. This synthetic data can then be used to train machine learning models to detect and prevent fraud. The models can learn from the synthetic data to identify patterns and anomalies in real-world data that may indicate fraudulent behavior.

- Procurement: Generative models can be used in the field of procurement to create contracts based on existing contract data. For example, suppose a procurement team wants to create a new contract for a specific type of product or service, such as IT consulting services. They can use generative AI / models to analyze the patterns and language used in existing IT consulting contracts and generate a new contract that closely matches the requirements of the procurement team. To achieve this, the procurement team can train the generative model with a large dataset of existing IT consulting contracts, which the model can then use to learn the common patterns and features of such contracts. For example, the generative model can learn that most IT consulting contracts include standard clauses related to deliverables, timelines, payment terms, and intellectual property rights. Based on this learning, the model can generate a new contract that includes these clauses, while also customizing them to reflect the specific requirements of the procurement team. The model can then be trained to generate new contracts that incorporate these patterns and features, while also customizing the language and terms to suit the specific needs of the procurement team.

Generative AI Modeling Example: RNN / LSTM / Transformers based Encoder-Decoder Architecture

Recurrent Neural Networks (RNNs) can be used as neural network component in encoder decoder architecture to create a generative model that can learn the patterns in a given text corpus and generate new text that is similar to the training data. Note that one can also use transformer architectures instead of RNN as encoder and decoder blocks. The RNN is a type of neural network that can handle sequential data such as text. The basic idea behind an RNN is to use the output of a previous time step as input to the current time step, allowing the network to capture temporal dependencies in the input data.

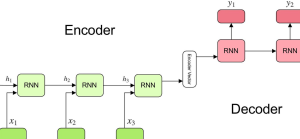

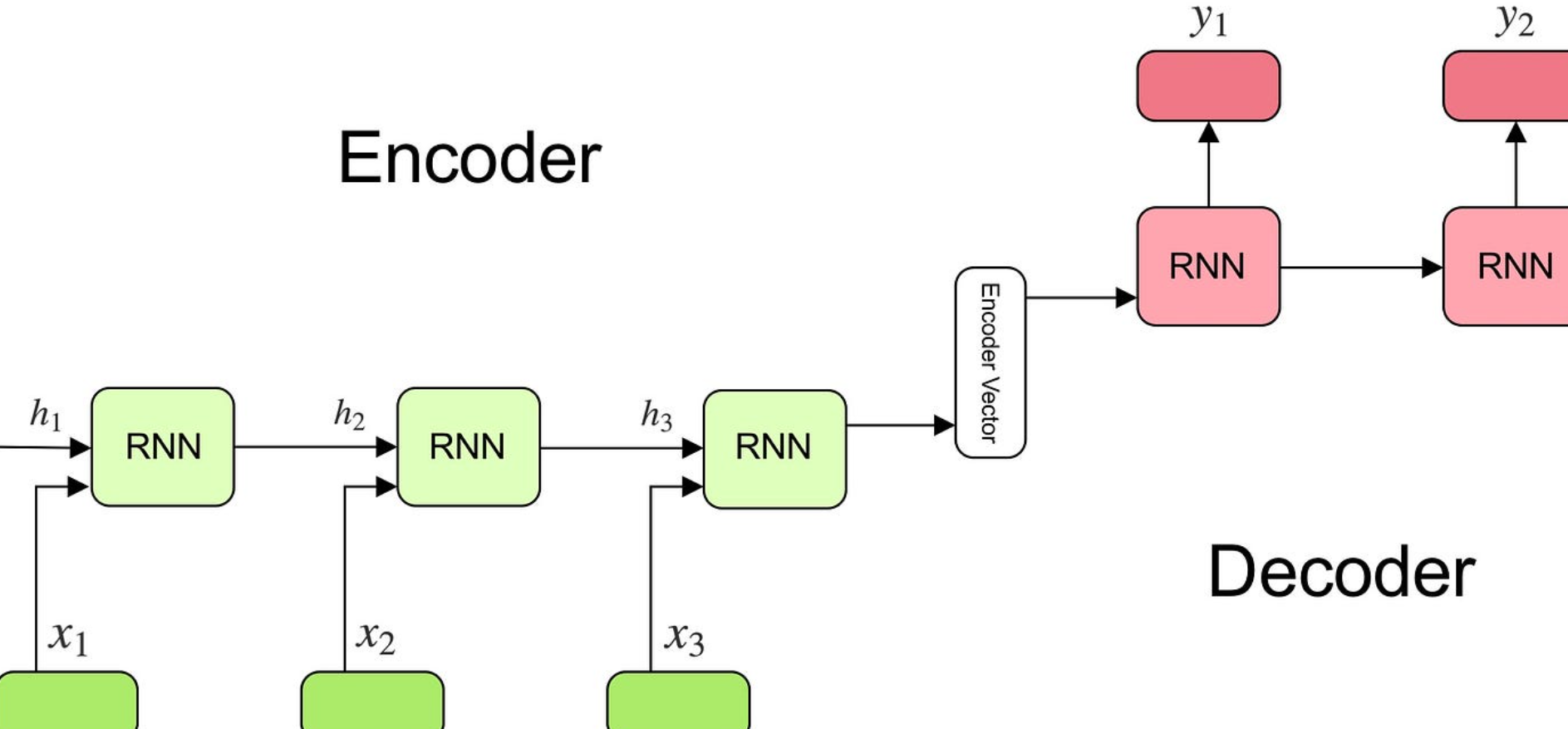

The RNN-based generative model can be trained on a corpus of text data by breaking the text into sequences of fixed length. Each sequence is then fed to the encoder having RNN. The text when fed to encoder is transformed into latent representation (final hidden state). Then, there is a decoder with RNN which is passed this latent representation. The decoder then generates a prediction the new sequence.

Once the RNN-based encoder decoder network is trained, it can be used to generate new text. This process is repeated iteratively to generate a complete text.

The picture below represents an encoder decoder architecture built using RNN. This generates language translation.

Consider a language translation task where we want to translate a sentence from English to French. The encoder RNN as shown in the above picture would first read the English sentence and produce a fixed-size vector representation (encoder vector) of it. The decoder RNN would then use this vector to generate the corresponding French sentence, one word at a time (y1, y2, etc). The decoder RNN would use the context of the previously generated words to determine the next word in the sequence, and this process would continue until the entire French sentence is generated.

Note that encoder-decoder architecture can leverage other neural network architectures, such as Long Short-Term Memory (LSTM) and Transformers, to improve its performance in various applications. These architectures have unique features that make them suitable for different tasks and data types.

LSTM is a type of RNN that is designed to handle long-term dependencies in sequential data, such as text or speech. It has a memory cell that can store information over long periods, allowing it to capture long-range dependencies in the input data. This makes LSTM a popular choice for language modeling, speech recognition, and other tasks that require understanding of context and structure in sequential data. As a matter of fact, in the example shown above, you can also use LSTM in place of RNN in the encoder decoder architecture.

Encoder decoder architecture have recently started using transformer neural network architecture. Transformers are a more recent architecture that has gained popularity in natural language processing tasks, such as language translation and text generation. Transformers are designed to process entire sequences of input data in parallel, rather than sequentially like RNNs. This makes them faster and more efficient, and allows them to capture complex relationships between the input and output data.

Videos for learning more about Generative AI

The following is a list of select few Youtube videos I gathered to get you an idea of what is generative AI and what can we do with it.

Conclusion

In conclusion, generative modeling is a powerful technique in machine learning that allows us to generate new data from a given dataset. By understanding the underlying patterns and structures in the input data, we can use generative models to create new samples that closely resemble the original data. We have seen several examples of how generative AI has a wide range of applications in various industries such as finance, healthcare, procurement, and music. In finance, generative AI models can be used for predicting stock prices and identifying fraud. In healthcare, generative AI models can be used to generate synthetic medical images for training machine learning models. In procurement, generative AI models can be used to manage contracts, optimize supply chain management and reduce costs. And in music, generative models can be used to generate new songs and improve music recommendation systems. Some of the most popular approaches to generative AI modeling is using Recurrent Neural Networks (RNNs), LSTM, transformers. RNNs are particularly well-suited for modeling sequential data such as text and music, and they have been used successfully in many applications such as language modeling and text generation. If you want to learn more, please drop a message and I will reach out to you.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

[…] you fascinated by the potential of generative artificial intelligence (AI)? Are you looking for the latest insights and knowledge in the field of AI and its creative […]

[…] Generative AI is revolutionizing various domains, from natural language processing to image recognition. Two concepts that are fundamental to these advancements are Transfer Learning and Fine Tuning. Despite their interconnected nature, they are distinct methodologies that serve unique purposes when training large language models (LLMs) to achieve different objectives. In this blog, we will explore the differences between Transfer Learning and Fine Tuning, learning about their individual characteristics and how they come into play in real-world scenarios with the help of examples. […]