Tag Archives: generative ai

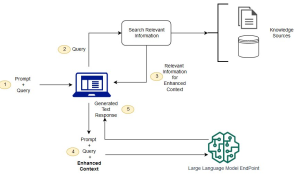

Retrieval Augmented Generation (RAG) & LLM: Examples

Last updated: 25th Jan, 2025 Have you ever wondered how to seamlessly integrate the vast knowledge of Large Language Models (LLMs) with the specificity of domain-specific knowledge stored in file storage, relational databases, graph databases, vector databases, etc? As the world of LLMs continues to evolve, the need for more sophisticated and contextually relevant responses from LLMs becomes paramount. Lack of contextual knowledge can result in LLM hallucination thereby producing inaccurate, unsafe, and factually incorrect responses. This is where question & context augmentation to prompts is used for contextually sensitive answer generation with LLMs, and, the retrieval-augmented generation method, comes into the picture. For data scientists and product managers keen …

Completion Model vs Chat Model: Python Examples

In this blog, we will learn about the concepts of completion and chat large language models (LLMs) with the help of Python examples. What’s the Completion Model in LLM? A completion model is a type of LLM that takes a text input and generates a text output, which is called a completion. In other words, a completion model is a type of LLM that generates text that continues from a given prompt or partial input. When provided with an initial piece of text, the model uses its trained knowledge to predict and generate the most likely subsequent text. A completion model can generate summaries, translations, stories, code, lyrics, etc depending on …

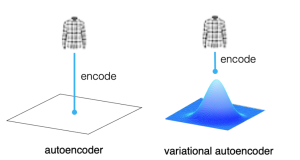

Autoencoder vs Variational Autoencoder (VAE): Differences, Example

Last updated: 12th May, 2024 In the world of generative AI models, autoencoders (AE) and variational autoencoders (VAEs) have emerged as powerful unsupervised learning techniques for data representation, compression, and generation. While they share some similarities, these algorithms have unique properties and applications that distinguish them. This blog post aims to help machine learning / deep learning enthusiasts understand these two methods, their key differences, and how they can be utilized in various data-driven tasks. We will learn about autoencoders and VAEs, understanding their core components, working mechanisms, and common use cases. We will also try and understand their differences in terms of architecture, objectives, and outcomes. What are Autoencoders? …

Transfer Learning vs Fine Tuning LLMs: Differences

Last updated: 23rd Jan, 2024 Two NLP concepts that are fundamental to large language models (LLMs) are transfer learning and fine-tuning pre-trained LLMs. Rather, true fine-tuning can also be termed as full fine-tuning because transfer learning is also a form of fine-tuning. Despite their interconnected nature, they are distinct methodologies that serve unique purposes when training foundation LLMs to achieve different objectives. In this blog, we will explore the differences between transfer Learning and full fine-tuning, learning about their characteristics and how they come into play in real-world scenarios related to natural language understanding (NLU) and natural language generation (NLG) tasks with the help of examples. We will also learn …

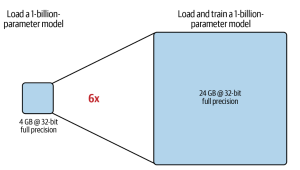

LLM Training & GPU Memory Requirements: Examples

As data scientists and MLOps Engineers, you must have come across the challenges related to managing GPU requirements for training and deploying large language models (LLMs). In this blog, we will delve deep into the intricacies of GPU memory demands when dealing with LLMs. We’ll learn with the help of various examples to better understand how GPU memory impacts the performance and feasibility of training these LLMs. Whether you’re planning to train a foundation (pre-trained) model or fine-tuning an existing model, the insights are aimed to guide you through the crucial considerations of GPU memory allocation. Greater details can be found in this book: Generative AI on AWS. Understanding GPU …

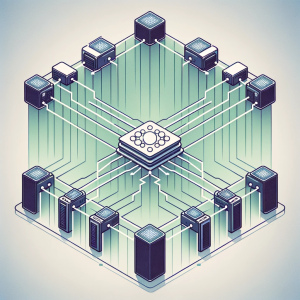

Distributed LLM Training & DDP, FSDP Patterns: Examples

Training large language models (LLMs) like GPT-4 requires the use of distributed computing patterns as there is a need to work with vast amounts of data while training with LLMs having multi-billion parameters vis-a-vis limited GPU support (NVIDIA A100 with 80 GB currently) for LLM training. In this blog, we will delve deep into some of the most important distributed LLM training patterns such as distributed data parallel (DDP) and Fully sharded data parallel (FSDP). The primary difference between these patterns is based on how the model is split or sharded across GPUs in the system. You might want to check out greater details in this book: Generative AI on …

Transformer Architecture Types: Explained with Examples

Are you fascinated by the power of deep learning large language models that can generate creative writing, answer complex questions, etc? Ever wondered how these LLMs understand and process human language with such finesse? At the heart of these remarkable achievements lies a machine learning model architecture that has revolutionized the field of Natural Language Processing (NLP) – the Transformer architecture and its types. But what makes Transformer models so special? From encoding sentences into numerical embeddings to employing attention mechanisms that capture the relationships between words, we will dissect different types of Transformer architectures, provide real-world examples, and even dive into the mathematics that governs its operation. Let’s explore …

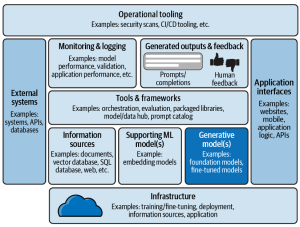

Blueprint: Deploying Generative AI Applications

In this blog, we will learn about a comprehensive framework for the deployment of generative AI applications, breaking down the essential components that architects must consider. Learn more about this topic from this book: Generative AI on AWS. The following is a solution / technology architecture that represents a blueprint for deploying generative AI applications. The following is an explanation of the different components of this architectural viewpoint:

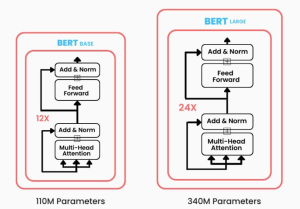

BERT vs GPT Models: Differences, Examples

Have you been wondering what sets apart two of the most prominent transformer-based machine learning models in the field of NLP, Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformers (GPT)? While BERT leverages encoder-only transformer architecture, GPT models are based on decoder-only transformer architecture. In this blog, we will delve into the core architecture, training objectives, real-world applications, examples, and more. By exploring these aspects, we’ll learn about the unique strengths and use cases of both BERT and GPT models, providing you with insights that can guide your next LLM-based NLP project or research endeavor. Differences between BERT vs GPT Models BERT, introduced in 2018, marked a significant …

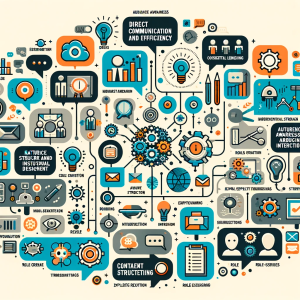

ChatGPT Prompts Best Practices: Examples

In this blog, you will learn the best practices you can adopt when writing prompts for ChatGPT. Here is the list: Direct Communication and Efficiency Audience Awareness and Contextual Understanding Interactive and Engaging Prompting Prompt Structure and Instructional Design Natural and Unbiased Interaction Content Creation and Revision Role-Assigning and Scripting Explicit Requirements and Mimicry

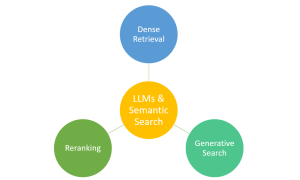

Large Language Models (LLMs) & Semantic Search: Examples

Have you ever marveled at how typing a few words into a search engine yields exactly the information you’re looking for from the vast expanse of the web? This is largely thanks to the advancements in semantic search, bolstered by technologies like Large Language Models (LLMs). Semantic search, which focuses on understanding the intent and contextual meaning behind queries, benefits from LLMs to provide more accurate and relevant results. However, it’s important to note that traditional search engines also rely on a sophisticated mix of algorithms, indexing, and ranking systems. LLMs complement these systems by enhancing their ability to interpret complex queries, making your search experience more intuitive and effective. …

Procurement Analytics Use Cases Examples

Last updated: 26th Nov, 2023 The procurement analytics applications is seeing tremendous growth in last few years. With so much data available, advancement in data analytics and related technology field, and the need for digital transformation across procurement organizations, it’s important to know how procurement analytics can help you make better business decisions. This blog will cover procurement analytics and key use cases examples from advanced analytics field such as machine learning, AI, generative AI that will be useful for business stakeholders such as category managers, sourcing managers, supplier relationship managers, business analysts/product managers, and data scientists to implement different use cases using machine learning. The use cases around data-driven decision …

First Principles Thinking using ChatGPT

Have you ever wondered why an object such as a chair is shaped the way it is, or why it’s even needed in the first place? What mystery unravels when we dig into the very essence of everyday objects and concepts around us? Navigating through a universe having well-established beliefs and customary wisdom, the hunt for innovative answers and deciphering the secrets hidden behind the everyday becomes not just a curiosity, but a necessity. This is where first principles thinking comes to the rescue. I have posted a detailed blog on First principles thinking – First principles thinking: Concepts & Examples. In this blog, let’s explore how we can utilize …

Generative AI Framework for Product Managers: Examples

Ever wondered how you as a product manager can stay ahead in the competitive era fuelled by technological advancements such as generative AI? Are you constantly grappling with the pressure to deliver groundbreaking solutions in line with your business goals? As a product manager, wouldn’t it be revolutionary to have some kind of a playbook that simplifies these challenges? What exactly can generative AI do for modern product managers? Which areas of your daily struggles can it alleviate, and what AI frameworks are best suited for your unique challenges? In this blog, we will dive into the potential of Generative AI vis-a-vis real-life use cases for product managers. Use Cases: …

OpenAI Python API Example for NLP Tasks

Ever wondered how you can leverage the power of OpenAI’s GPT-3 and GPT-3.5 (from Jan 2024 onwards) directly in your Python application? Are you curious about generating human-like text with just a few lines of code? This blog post will walk you through an example Python code snippet that utilizes OpenAI’s Python API for different NLP tasks such as text generation. Check out my other post on how to use Langchain framework for text generation using OpenAI GPT models. OpenAI Python APIs The OpenAI Python API is an interface that allows you to interact with OpenAI’s language models, including their GPT-3 model. The following are different popular models that you …

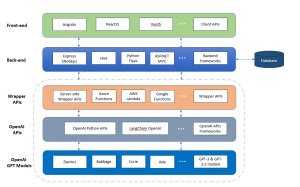

Architecting a Generative AI Platform for GPT-based LLM Apps

Have you ever wondered how to build a scalable Generative AI platform based on OpenAI GPT models that can serve different applications? Are you a data scientist, product manager, or software engineer looking to understand the intricacies of the architecture of such a scalable generative AI platform? This blog aims to demystify the architectural building blocks needed to create a robust GPT-based platform. By the end, you will have a clear roadmap for architecting, designing, and implementing your own GPT-based large language models (LLMs) applications platform. Generative AI Platform Architecture for GPT-based LLM Apps The following is the technology architecture of generative AI platform which can leverage OpenAI GPT based …

I found it very helpful. However the differences are not too understandable for me