Are you intrigued by the inner workings of large language models (LLMs) like BERT and GPT series models? Ever wondered how these models manage to understand human language with such precision? What are the critical stages that transform them from simple neural networks into powerful tools capable of text prediction, sentiment analysis, and more?

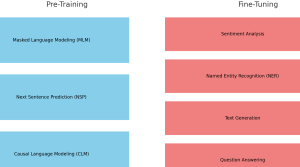

The answer lies in two vital phases: pre-training and fine-tuning. These stages not only make language models adaptable to various tasks but also bring them closer to understanding language the way humans do. In this blog, we’ll dive into the fascinating journey of pre-training and fine-tuning in LLMs, complete with real-world examples. Whether you are a data scientist, machine learning engineer, or simply an AI enthusiast, unraveling these aspects will provide a deeper insight into how LLMs work and how can they can be leveraged for different custom tasks.

What’s Pre-training Task in LLM Modeling?

Pre-training is the process of training a model on a large corpus of text, usually containing billions of words. This phase helps the model to learn the structure of the language, grammar, and even some facts about the world. It’s like teaching the model the basic rules and nuances of a language. Imagine teaching a child the English language by reading a vast number of books, articles, and web pages. The child learns the syntax, semantics, and common phrases but may not yet understand specific technical terms or domain-specific knowledge.

Key Characteristics

- Data: Uses a large, general corpus (e.g., Wikipedia, Common Crawl).

- Objective: Learn the underlying patterns of the language.

- Model: A large neural network is trained from scratch or from an existing base model.

- Outcome: A general-purpose model capable of understanding language but not specialized in any specific task.

The pre-training tasks can be understood in a better manner with the help of examples in relation to models like BERT and GPT. Check out the post, BERT & GPT Models: Differences, Examples, to learn more about BERT and GPT models. Here’s how the pre-training tasks get implemented for both BERT and GPT series of models.

BERT (Bidirectional Encoder Representations from Transformers)

BERT is known for its bidirectional understanding of the text. During pre-training, BERT focuses on the following tasks:

1. Masked Language Modeling (MLM):

- Task: Randomly mask some of the words in the input and predict them based on the surrounding context.

- Real-World Example: If given the sentence “The cat sat on the ___,” the model learns to predict the missing word as “mat” by understanding the context.

- Objective: This helps BERT understand the bidirectional context of words and improves its ability to understand relationships between different parts of a sentence.

2. Next Sentence Prediction (NSP):

- Task: Determines if two sentences logically follow each other in a text.

- Real-World Example: Given the sentences “I have a cat.” and “It loves to purr.”, the model learns that these sentences are likely to be consecutive.

- Objective: This task enables BERT to understand the relationship between consecutive sentences, improving its understanding of narrative flow and coherence.

GPT (Generative Pre-trained Transformers)

GPT, unlike BERT, is trained using a unidirectional approach. Its pre-training task is:

1. Autoregressive or Causal Language Modeling (CLM):

- Task: Predicts the next word in a sentence based on all the previous words, making it a unidirectional task.

- Real-World Example: Given the sentence “The dog wagged its,” the model learns to predict the next word as “tail.”

- Objective: This task helps GPT understand the sequential nature of language, enabling it to generate coherent and contextually relevant text.

What’s Fine-tuning Task in LLM modeling?

Fine-tuning comes after pre-training. Once the model has learned the general characteristics of the language as part of pre-training, it is further trained on a smaller, domain-specific dataset to specialize it for a particular task or subject area. Continuing the child analogy, now that the child understands English, they are taught specific subjects like biology or law. They learn the unique terms, concepts, and ways of thinking in these fields.

Key Characteristics

- Data: Uses a smaller, task-specific corpus (e.g., medical texts for a healthcare application).

- Objective: Specialize the model for a particular task or domain.

- Model: The pre-trained model is further trained, often with a smaller learning rate.

- Outcome: A specialized model capable of performing specific tasks like classification, sentiment analysis, etc.

Fine-tuning is a crucial step that adapts pre-trained models like BERT and GPT to specific tasks or domains. Here are examples of fine-tuning tasks commonly applied to BERT and GPT models:

BERT (Bidirectional Encoder Representations from Transformers)

1. Sentiment Analysis:

- Task: Determining the sentiment or emotion expressed in a piece of text.

- Real-World Example: Fine-tuning BERT to analyze customer reviews on a product and categorize them as positive, negative, or neutral.

- Objective: Enables businesses to understand customer opinions and make informed decisions.

2. Named Entity Recognition (NER):

- Task: Identifying and classifying named entities such as people, organizations, locations, etc.

- Real-World Example: Fine-tuning BERT to extract and categorize entities in news articles, like recognizing “Apple” as a company.

- Objective: Useful in information extraction, summarization, and other text analysis tasks.

GPT (Generative Pre-trained Transformers)

1. Text Generation:

- Task: Generating coherent and contextually relevant text based on a given prompt.

- Real-World Example: Fine-tuning GPT to write creative stories or poems based on an initial sentence or theme.

- Objective: Enhances content creation, marketing, and other creative writing tasks.

2. Question Answering:

- Task: Providing precise answers to specific questions based on a given context or document.

- Real-World Example: Fine-tuning GPT to answer questions related to a legal document, helping lawyers quickly find relevant information.

- Objective: Facilitates information retrieval and supports decision-making in various domains.

Difference between Pre-training & Fine-tuning Tasks in LLM

The following summarizes the differences between pre-training and fine-tuning tasks when training LLMs:

| Aspect | Pre-Training | Fine-Tuning |

|---|---|---|

| Data | Large, general corpus | Smaller, domain-specific dataset |

| Objective | Understand general language patterns | Specialize in a specific task/domain |

| Training | From scratch or from an existing base | Further training of a pre-trained model |

| Outcome | General-purpose language understanding | Task/domain-specific performance |

Summary

- Pre-training builds the foundational knowledge of the language, akin to teaching a child the basics of English.

- Fine-tuning specializes in this knowledge for specific tasks or domains, similar to teaching a subject like biology or law.

- Together, these two stages enable the creation of highly effective and adaptable language models that can be tailored for various applications.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I found it very helpful. However the differences are not too understandable for me