Category Archives: Python

Python – Extract Text from HTML using BeautifulSoup

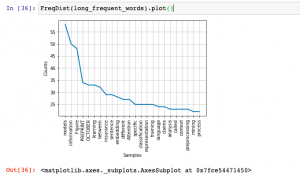

In this post, you will learn about how to use Python BeautifulSoup and NLTK to extract words from HTML pages and perform text analysis such as frequency distribution. The example in this post is based on reading HTML pages directly from the website and performing text analysis. However, you could also download the web pages and then perform text analysis by loading pages from local storage. Python Code for Extracting Text from HTML Pages Here is the Python code for extracting text from HTML pages and perform text analysis. Pay attention to some of the following in the code given below: URLLib request is used to read the html page …

Python – Extract Text from PDF file using PDFMiner

In this post, you will get a quick code sample on how to use PDFMiner, a Python library, to extract text from PDF files and perform text analysis. I will be posting several other posts in relation to how to use other Python libraries for extracting text from PDF files. In this post, the following topic will get covered: How to set up PDFMiner Python code for extracting text from PDF file using PDFMiner Setting up PDFMiner Here is how you would set up PDFMiner.six. You could execute the following command to get set up with PDFMiner while working in Jupyter notebook: Python Code for Extracting Text from PDF file …

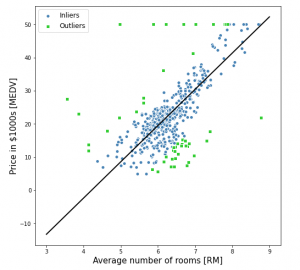

RANSAC Regression Explained with Python Examples

In this post, you will learn about the concepts of RANSAC regression algorithm along with Python Sklearn example for RANSAC regression implementation using RANSACRegressor. RANSAC regression algorithm is useful for handling the outliers dataset. Instead of taking care of outliers using statistical and other techniques, one can use RANSAC regression algorithm which takes care of the outlier data. In this post, the following topics are covered: Introduction to RANSAC regression RANSAC Regression Python code example Introduction to RANSAC Regression RANSAC (RANdom SAmple Consensus) algorithm takes linear regression algorithm to the next level by excluding the outliers in the training dataset. The presence of outliers in the training dataset does impact …

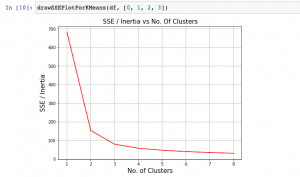

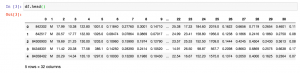

K-means Clustering Elbow Method & SSE Plot – Python

In this plot, you will quickly learn about how to find elbow point using SSE or Inertia plot with Python code and You may want to check out my blog on K-means clustering explained with Python example. The following topics get covered in this post: What is Elbow Method? How to create SSE / Inertia plot? How to find Elbow point using SSE Plot What is Elbow Method? Elbow method is one of the most popular method used to select the optimal number of clusters by fitting the model with a range of values for K in K-means algorithm. Elbow method requires drawing a line plot between SSE (Sum of Squared errors) …

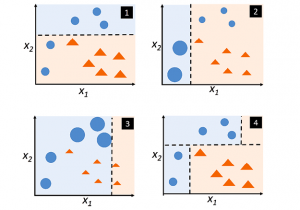

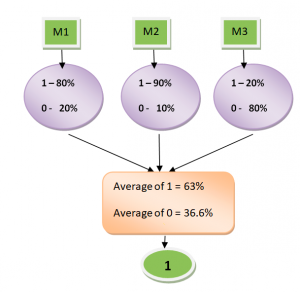

Adaboost Algorithm Explained with Python Example

In this post, you will learn about boosting technique and adaboost algorithm with the help of Python example. You will also learn about the concept of boosting in general. Boosting classifiers are a class of ensemble-based machine learning algorithms which helps in variance reduction. It is very important for you as data scientist to learn both bagging and boosting techniques for solving classification problems. Check my post on bagging – Bagging Classifier explained with Python example for learning more about bagging technique. The following represents some of the topics covered in this post: What is Boosting and Adaboost Algorithm? Adaboost algorithm Python example What is Boosting and Adaboost Algorithm? As …

Hard vs Soft Voting Classifier Python Example

In this post, you will learn about one of the popular and powerful ensemble classifier called as Voting Classifier using Python Sklearn example. Voting classifier comes with multiple voting options such as hard and soft voting options. Hard vs Soft Voting classifier is illustrated with code examples. The following topic has been covered in this post: Voting classifier – Hard vs Soft voting options Voting classifier Python example Voting Classifier – Hard vs Soft Voting Options Voting Classifier is an estimator that combines models representing different classification algorithms associated with individual weights for confidence. The Voting classifier estimator built by combining different classification models turns out to be stronger meta-classifier that balances out the individual …

PyTorch – How to Load & Predict using Resnet Model

In this post, you will learn about how to load and predict using pre-trained Resnet model using PyTorch library. Here is arxiv paper on Resnet. Before getting into the aspect of loading and predicting using Resnet (Residual neural network) using PyTorch, you would want to learn about how to load different pretrained models such as AlexNet, ResNet, DenseNet, GoogLenet, VGG etc. The PyTorch Torchvision projects allows you to load the models. Note that the torchvision package consists of popular datasets, model architectures, and common image transformations for computer vision. Here is the command: The output of above will list down all the pre-trained models available for loading and prediction. You may …

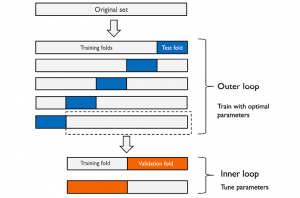

Python – Nested Cross Validation for Algorithm Selection

In this post, you will learn about nested cross validation technique and how you could use it for selecting the most optimal algorithm out of two or more algorithms used to train machine learning model. The usage of nested cross validation technique is illustrated using Python Sklearn example. When it is about selecting models trained with a particular algorithm with most optimal combination of hyper parameters, you can adopt the model tuning techniques such as some of the following: Grid search Randomized search Validation curve The following topics get covered in this post: Why nested cross-validation? Nested cross-validation with Python Sklearn example Why Nested Cross-Validation? Nested cross-validation technique is used for estimating …

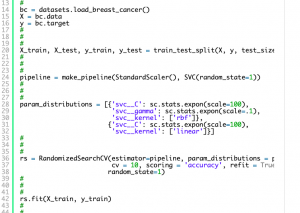

Randomized Search Explained – Python Sklearn Example

In this post, you will learn about one of the machine learning model tuning technique called Randomized Search which is used to find the most optimal combination of hyper parameters for coming up with the best model. The randomized search concept will be illustrated using Python Sklearn code example. As a data scientist, you must learn some of these model tuning techniques to come up with most optimal models. You may want to check some of the other posts on tuning model parameters such as the following: Sklearn validation_curve for tuning model hyper parameters Sklearn GridSearchCV for tuning model hyper parameters In this post, the following topics will be covered: What and why …

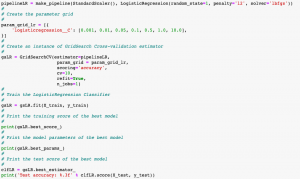

Grid Search Explained – Python Sklearn Examples

In this post, you will learn about another machine learning model hyperparameter optimization technique called as Grid Search with the help of Python Sklearn code examples. In one of the earlier posts, you learned about another hyperparamater optimization technique namely validation curve. As a data scientist, it will be useful to learn some of these model tuning techniques (tuning hyperparameters) as it would help us select most appropriate models with most appropriate parameters. The following are some of the topics covered in this post: What & Why of grid search? Grid search with Python Sklearn examples What & Why of Grid Search? Grid Search technique helps in performing exhaustive search over specified parameter (hyper parameters) values for …

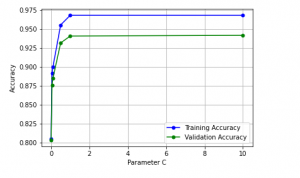

Validation Curves Explained – Python Sklearn Example

In this post, you will learn about validation curves with Python Sklearn example. You will learn about how validation curves can help diagnose or assess your machine learning models in relation to underfitting and overfitting. On the similar topic, I recommend you reading one of the previous post on assessing overfitting and underfitting titled Learning curves explained with Python Sklearn example. The following gets covered in this post: Why validation curves? Python Sklearn example for validation curves Why Validation Curves? As like learning curve, the validation curve also helps in diagnozing the model bias vs variance. The validation curve plot helps in selecting most appropriate model parameters (hyper-parameters). Unlike learning …

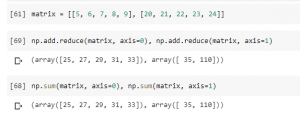

Python – 5 Sets of Useful Numpy Unary Functions

In this post, you will learn about some of the 5 most popular or useful set of unary universal functions (ufuncs) provided by Python Numpy library. As data scientists, it will be useful to learn these unary functions by heart as it will help in performing arithmetic operations on sequential-like objects. These functions can also be termed as vectorized wrapper functions which are used to perform element-wise operations. The following represents different set of popular functions: Basic arithmetic operations Summary statistics Sorting Minimum / maximum Array equality Basic Arithmetic Operations The following are some of the unary functions whichc an be used to perform arithmetic operations: add, subtract, multiply, divide, …

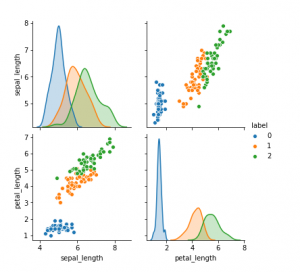

What, When & How of Scatterplot Matrix in Python

In this post, you will learn about some of the following in relation to scatterplot matrix. Note that scatter plot matrix can also be termed as pairplot. Later in this post, you would find Python code example in relation to using scatterplot matrix / pairplot (seaborn package). What is scatterplot matrix? When to use scatterplot matrix / pairplot? How to use scatterplot matrix in Python? What is Scatterplot Matrix? Scatter plot matrix is a matrix (or grid) of scatter plots where each scatter plot in the grid is created between different combinations of variables. In other words, scatter plot matrix represents bi-variate or pairwise relationship between different combinations of variables …

When to use LabelEncoder – Python Example

In this post, you will learn about when to use LabelEncoder. As a data scientist, you must have a clear understanding on when to use LabelEncoder and when to use other encoders such as One-hot Encoder. Using appropriate type of encoders is key part of data preprocessing in machine learning model building lifecycle. Here are some of the scenarios when you could use LabelEncoder without having impact on model. Use LabelEncoder when there are only two possible values of a categorical features. For example, features having value such as yes or no. Or, maybe, gender feature when there are only two possible values including male or female. Use LabelEncoder for …

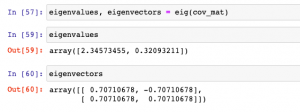

Eigenvalues & Eigenvectors with Python Examples

In this post, you will learn about how to calculate Eigenvalues and Eigenvectors using Python code examples. Before getting ahead and learning the code examples, you may want to check out this post on when & why to use Eigenvalues and Eigenvectors. As a machine learning Engineer / Data Scientist, you must get a good understanding of Eigenvalues / Eigenvectors concepts as it proves to be very useful in feature extraction techniques such as principal components analysis. Python Numpy package is used for illustration purpose. The following topics are covered in this post: Creating Eigenvectors / Eigenvalues using Numpy Linalg module Re-creating original transformation matrix from eigenvalues & eigenvectors Creating Eigenvectors / Eigenvalues using Numpy In …

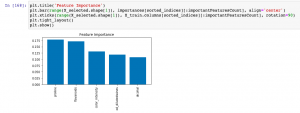

Sklearn SelectFromModel for Feature Importance

In this post, you will learn about how to use Sklearn SelectFromModel class for reducing the training / test data set to the new dataset which consists of features having feature importance value greater than a specified threshold value. This method is very important when one is using Sklearn pipeline for creating different stages and Sklearn RandomForest implementation (such as RandomForestClassifier) for feature selection. You may refer to this post to check out how RandomForestClassifier can be used for feature importance. The SelectFromModel usage is illustrated using Python code example. SelectFromModel Python Code Example Here are the steps and related python code for using SelectFromModel. Determine the feature importance using …

I found it very helpful. However the differences are not too understandable for me