In this post, you will learn about one of the machine learning model tuning technique called Randomized Search which is used to find the most optimal combination of hyper parameters for coming up with the best model. The randomized search concept will be illustrated using Python Sklearn code example. As a data scientist, you must learn some of these model tuning techniques to come up with most optimal models. You may want to check some of the other posts on tuning model parameters such as the following:

- Sklearn validation_curve for tuning model hyper parameters

- Sklearn GridSearchCV for tuning model hyper parameters

In this post, the following topics will be covered:

- What and why of Randomized Search?

- Randomized Search explained with Python Sklearn example

What & Why of Randomized Search

Randomized Search is a yet another technique for sampling different hyper parameters combination in order to find the optimal set of parameters which will give the model with most optimal performance / score. As like Grid search, randomized search is the most widely used strategies for hyper-parameter optimization. Unlike Grid Search, randomized search is much more faster resulting in cost-effective (computationally less intensive) and time-effective (faster – less computational time) model training.

It is found that the randomized search is more efficient for hyper-parameter optimization than the grid search. Grid search experiments allocate too many trials to the exploration of dimensions that do not matter and suffer from poor coverage in dimensions that are important. Read this paper for more details – Random search for hyper parameter optimization.

In this post, randomized search is illustrated using sklearn.model_selection RandomizedSearchCV class while using SVC class from sklearn.svm package.

Randomized Search explained with Python Sklearn example

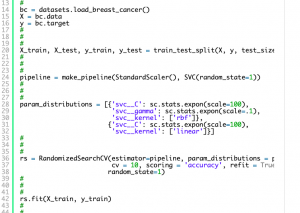

In this section, you will learn about how to use RandomizedSearchCV class for fitting and scoring the model. Pay attention to some of the following:

- Pipeline estimator is used with steps including StandardScaler and SVC algorithm.

- Sklearn dataset related to Breast Cancer is used for training the model.

- For each parameter, a distribution over possible values is used. The scipy.stats module is used for creating the distribution of values. In the example below, exponential distribution is used to create random value for parameters such as inverse regularization parameter C and gamma.

- Cross-validation generator is passed to RandomizedSearchCV. In the example given in this post, the default such as StratifiedKFold is used by passing cv = 10

- Another parameter, refit = True, is used which refit the the best estimator to the whole training set automatically.

- The scoring parameter is set to ‘accuracy’ to calculate the accuracy score.

- Method, fit, is invoked on the instance of RandomizedSearchCV with training data (X_train) and related label (y_train).

- Once the RandomizedSearchCV estimator is fit, the following attributes are used to get vital information:

- best_score_: Gives the score of the best model which can be created using most optimal combination of hyper parameters

- best_params_: Gives the most optimal hyper parameters which can be used to get the best model

- best_estimator_: Gives the best model built using the most optimal hyperparameters

import pandas as pd

import numpy as np

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import RandomizedSearchCV

from sklearn.model_selection import GridSearchCV

from sklearn.svm import SVC

import scipy as sc

#

# Load the Sklearn breast cancer dataset

#

bc = datasets.load_breast_cancer()

X = bc.data

y = bc.target

#

# Create training and test split

#

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1, stratify=y)

#

# Create the pipeline estimator

#

pipeline = make_pipeline(StandardScaler(), SVC(random_state=1))

#

# Create parameter distribution using scipy.stats module

#

param_distributions = [{'svc__C': sc.stats.expon(scale=100),

'svc__gamma': sc.stats.expon(scale=.1),

'svc__kernel': ['rbf']},

{'svc__C': sc.stats.expon(scale=100),

'svc__kernel': ['linear']}]

#

# Create an instance of RandomizedSearchCV

#

rs = RandomizedSearchCV(estimator=pipeline, param_distributions = param_distributions,

cv = 10, scoring = 'accuracy', refit = True, n_jobs = 1,

random_state=1)

#

# Fit the RandomizedSearchCV estimator

#

rs.fit(X_train, y_train)

#

#

#

print('Test Accuracy: %0.3f' % rs.score(X_test, y_test))

One can find the best parameters, score using the following command:

#

# Print best parameters

#

print(rs.best_params_)

#

# Print the best score

#

print(rs.best_score_)

Conclusions

Here are some of the learning from this post on randomized search:

- Randomized search is used to find optimal combination of hyper parameters for creating the best model

- Randomized search is a model tuning technique. Other techniques include grid search.

- Sklearn RandomizedSearchCV can be used to perform random search of hyper parameters

- Random search is found to search better models than grid search in cost-effective (less computationally intensive) and time-effective (less computational time) manner.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I found it very helpful. However the differences are not too understandable for me