Tag Archives: machine learning

Underwriting & Machine Learning Models Examples

Are you curious about how AI / machine learning is revolutionizing the underwriting process? Have you ever wondered how machine learning models are reshaping risk assessment and decision-making in industries like insurance, lending, and securities? Underwriting has long been a critical process for assessing risks and making informed decisions, but with the advent of machine learning, the possibilities have expanded exponentially. By harnessing the immense capabilities of machine learning algorithms and the abundance of data available, organizations can extract actionable insights, achieve higher accuracy, and streamline their underwriting practices like never before. In this blog, we will learn about how machine learning models can be used effectively for underwriting processes, …

Loan Eligibility / Approval & Machine Learning: Examples

It is no secret that the loan industry is a multi-billion dollar industry. Lenders make money by charging interest on loans, and borrowers want to get the best loan terms possible. In order to qualify for a loan, borrowers are typically required to provide information about their income, assets, and credit score. This process can be time consuming and frustrating for both lenders and borrowers. In this blog post, we will discuss how AI / machine learning can be used to predict loan eligibility. As data scientists, it is of great importance to understand some of challenges in relation to loan eligibility and how machine learning models can be built …

Credit Risk Modeling & Machine Learning Use Cases

Have you ever wondered how banks and financial institutions decide who to lend money to, or how much to lend? The secret lies in credit risk modeling, a sophisticated approach that evaluates the likelihood of a borrower defaulting on their loan. Through in-depth analysis of historical data and borrower’s credit behavior, these models play a pivotal role in guiding lending decisions, managing risks, and ultimately, driving profitability. In the face of growing financial complexities, traditional methods are often insufficient. That’s where machine learning comes into play that helps better anticipate credit risk. By automating the identification of patterns within data, patterns that often go unnoticed by human analysis, machine learning …

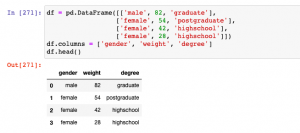

One-hot Encoding Concepts & Python Examples

Have you ever encountered categorical variables in your data analysis or machine learning projects? These variables represent discrete qualities or characteristics, such as colors, genders, or types of products. While numerical variables can be directly used as inputs for machine learning algorithms, categorical variables require a different approach. One common technique used to convert categorical variables into a numerical representation is called one-hot encoding, also known as dummy encoding. When working with machine learning algorithms, categorical variables need to be transformed into a numerical representation to be effectively used as inputs. This is where one-hot encoding comes to rescue. In this post, you will learn about One-hot Encoding concepts and …

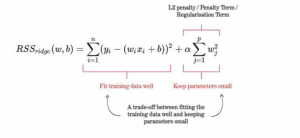

Ridge Regression Concepts & Python example

Ridge regression is a type of linear regression that penalizes ridge coefficients. This technique can be used to reduce the effects of multicollinearity in ridge regression, which may result from high correlations among predictors or between predictors and independent variables. In this tutorial, we will explain ridge regression with a Python example. What is Ridge Regression? Ridge regression is a powerful technique in machine learning that addresses the issue of overfitting in linear models. In linear regression, we aim to model the relationship between a response variable and one or more predictor variables. However, when there are multiple variables that are highly correlated, the model can become too complex and …

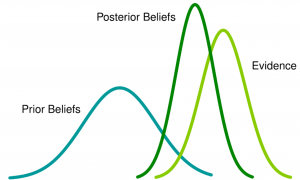

Bayesian Machine Learning Applications Examples

Have you ever wondered how machines can make decisions with uncertainty? What if there was an approach in machine learning that not only learned from data but also quantified and managed uncertainty in a principled way? Enter the realm of Bayesian machine learning. Bayesian machine learning is one of the most powerful modeling technique in predictive analytics. It marries the probabilistic reasoning with machine learning algorithms. Bayes’ theorem, which was first introduced by Reverend Thomas Bayes in 1763, provides a way to infer probabilities from observations. Bayesian machine learning has become increasingly popular because it can be used for real-world applications such as spam filtering (NLP), credit card fraud detection, …

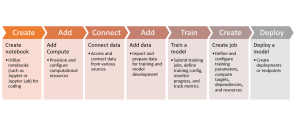

Azure Machine Learning Studio: Getting Started

Azure Machine Learning Studio is a powerful cloud-based platform that brings the world of machine learning to your fingertips. Whether you’re a data scientist, a developer, or a business professional, Azure Machine Learning Studio provides a user-friendly and collaborative environment to build, train, and deploy machine learning models with ease. This blog post serves as a quick tutorial to help you get started with Azure Machine Learning Studio. From setting up your workspace to exploring key features and best practices, we will walk you through the essential steps to embark on your machine learning journey. Azure ML Studio – Machine Learning Pipeline Before we can proceed with the tasks in …

Machine Learning NPTEL Online Courses List 2023

Machine learning is a rapidly evolving field that has gained immense popularity in recent years. As technology continues to advance, the demand for professionals with expertise in machine learning continues to soar. If you’re someone who is interested in diving deep into the world of machine learning or looking to enhance your existing knowledge, the NPTel courses are an excellent avenue to explore. The National Programme on Technology Enhanced Learning (NPTel) is a joint initiative by the Indian Institutes of Technology (IITs) and the Indian Institute of Science (IISc). It offers a wide range of online courses across various disciplines, including computer science and engineering. In this blog, we will …

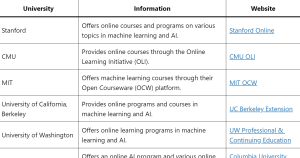

Online US Degree Courses & Programs in AI / Machine Learning

Data Science & AI / Machine learning has emerged as a transformative field, revolutionizing industries and shaping the future of technology. As the demand for professionals skilled in machine learning continues to rise, top universities in the United States (USA) have recognized the need to offer online degree courses and programs in this dynamic field. Through these online offerings, students can now access world-class education and earn prestigious degrees from the comfort of their own homes, while benefiting from the expertise of renowned faculty members. In this blog post, we present a curated list of leading US universities that provide online degree courses and programs in machine learning. Whether you …

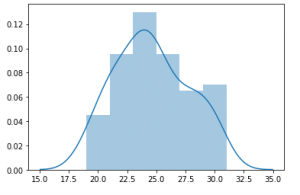

Binomial Distribution Explained with Examples

Have you ever wondered how to predict the number of successes in a series of independent trials? Or perhaps you’ve been curious about the probability of achieving a specific outcome in a sequence of yes-or-no questions. If so, we are essentially talking about the binomial distribution. It’s important for data scientists to understand this concept as binomials are used often in business applications. The binomial distribution is a discrete probability distribution that applies to binomial experiments (experiments with binary outcomes). It’s the number of successes in a specific number of trials. Sighting a simple yet real-life example, the binomial distribution may be imagined as the probability distribution of a number …

Model Cards Example Machine Learning

Have you ever wondered how to make your machine learning models more transparent, understandable, and accountable? Are you looking to implement responsible AI practices including ways and means to review and improve your existing model documentation? If so, you will learn about the concept of model cards, a powerful tool for documenting important details about machine learning models. You will learn the concepts with concrete examples and best practices that can serve as a guide for implementing or improving model cards in your organizations. The model card example can be seen as an standard template for model card which gets used in various different companies such as Google. What are …

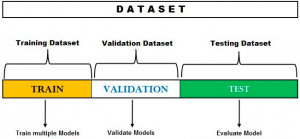

Hold-out Method for Training Machine Learning Models

The hold-out method for training the machine learning models is a technique that involves splitting the data into different sets: one set for training, and other sets for validation and testing. The hold-out method is used to check how well a machine learning model will perform on the new data. In this post, you will learn about the hold-out method used during the process of training the machine learning model. Do check out my post on what is machine learning? concepts & examples for a detailed understanding of different aspects related to the basics of machine learning. Also, check out a related post on what is data science? When evaluating …

Google Unveils Next-Gen LLM, PaLM-2

Google’s breakthrough research in machine learning and responsible AI has culminated in the development of their next-generation large language model (LLM), PaLM 2. This model represents a significant evolution in natural language processing (NLP) technology, with the capability to perform a broad array of advanced reasoning tasks, including code and math, text classification and question answering, language translation, and natural language generation. The unique combination of compute-optimal scaling, an improved dataset mixture, and model architecture enhancements is what powers PaLM 2’s exceptional capabilities. This combination allows the model to achieve superior performance than its predecessors, including the original PaLM, across all tasks. PaLM 2 was built with Google’s commitment to …

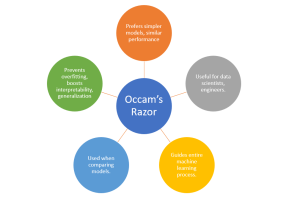

Occam’s Razor in Machine Learning: Examples

“Everything should be made as simple as possible, but not simpler.” – Albert Einstein Consider this: According to a recent study by IDC, data scientists spend approximately 80% of their time cleaning and preparing data for analysis, leaving only 20% of their time for the actual tasks of analysis, modeling, and interpretation. Does this sound familiar to you? Are you frustrated by the amount of time you spend on complex data wrangling and model tuning, only to find that your machine learning model doesn’t generalize well to new data? As data scientists, we often find ourselves in a predicament. We strive for the highest accuracy and predictive power in our …

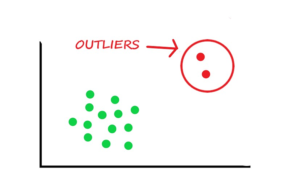

Outlier Detection Techniques in Python: Examples

In the realm of data science, mastering outlier detection techniques is paramount for ensuring data integrity and robust machine learning model performance. Outliers are the data points which deviate significantly from the norm. The outliers data points can greatly impact the accuracy and reliability of statistical analyses and machine learning models. In this blog, we will explore a variety of outlier detection techniques using Python. The methods covered will include statistical approaches like the z-score method and the interquartile range (IQR) method, as well as visualization techniques like box plots and scatter plots. Whether you are a data science enthusiast or a seasoned professional, it is important to grasp these …

Lime Machine Learning Python Example

Today when core businesses have started relying on machine learning (ML) models predictions, interpreting complex models has become a necessary requirement of AI governance (responsible AI). Data scientists are often asked to explain the inner workings of a machine learning models for understanding how the decisions are made. The Problem? Many of these models stand out as “black boxes“, delivering predictions without any comprehensible reasoning. This lack of transparency (especially in healthcare & finance use cases) can lead to mistrust in model predictions and inhibit the practical application of machine learning in fields that require a high degree of interpretability. It could lead to erroneous decision-making, or worse, legal and …

I found it very helpful. However the differences are not too understandable for me