Have you ever encountered categorical variables in your data analysis or machine learning projects? These variables represent discrete qualities or characteristics, such as colors, genders, or types of products. While numerical variables can be directly used as inputs for machine learning algorithms, categorical variables require a different approach. One common technique used to convert categorical variables into a numerical representation is called one-hot encoding, also known as dummy encoding. When working with machine learning algorithms, categorical variables need to be transformed into a numerical representation to be effectively used as inputs. This is where one-hot encoding comes to rescue.

In this post, you will learn about One-hot Encoding concepts and code examples using Python programming language. One-hot encoding is also called as dummy encoding. In this post, OneHotEncoder class of sklearn.preprocessing will be used in the code examples. As a data scientist or machine learning engineer, you must learn the one-hot encoding techniques as it comes very handy while training machine learning models.

What is One-Hot Encoding?

One-hot encoding is a process whereby categorical variables are converted into a form that can be provided as an input to machine learning models. It is an essential preprocessing step for many machine learning tasks. The goal of one-hot encoding is to transform data from a categorical representation to a numeric representation. The categorical variables are firstly encoded as ordinal, then each integer value is represented as a binary vector that is all zero values except the index of the integer, which is marked with a 1. In other words, this is a technique which is used to convert or transform a categorical feature having string labels into K numerical features in such a manner that the value of one out of K (one-of-K) features is 1 and the value of rest (K-1) features is 0. It is also called as dummy encoding as the features created as part of these techniques are dummy features which don’t represent any real world features. Rather they are created for encoding the different values of categorical feature using dummy numerical features.

Suppose we have a dataset that includes information about various fruits, including their names, colors, and whether they are organic or not. Here’s a sample representation of the dataset:

| Fruit | Color | Organic |

|---|---|---|

| Apple | Red | Yes |

| Banana | Yellow | No |

| Orange | Orange | Yes |

| Grapes | Purple | Yes |

| Mango | Yellow | No |

To perform one-hot encoding on the “Fruit” column, we would create separate columns for each unique fruit type. Let’s assume the dataset contains five different fruit types: Apple, Banana, Orange, Grapes, and Mango. We will create new columns for each fruit, with a value of 1 indicating the presence of that fruit in a particular row and 0 otherwise.

The transformed dataset after one-hot encoding would look like this:

| Fruit | Color | Organic | Apple | Banana | Orange | Grapes | Mango |

|---|---|---|---|---|---|---|---|

| Apple | Red | Yes | 1 | 0 | 0 | 0 | 0 |

| Banana | Yellow | No | 0 | 1 | 0 | 0 | 0 |

| Orange | Orange | Yes | 0 | 0 | 1 | 0 | 0 |

| Grapes | Purple | Yes | 0 | 0 | 0 | 1 | 0 |

| Mango | Yellow | No | 0 | 0 | 0 | 0 | 1 |

As you can see, each fruit type now has its own column, and the corresponding values indicate whether that fruit is present in a particular row. This representation allows machine learning algorithms to effectively interpret and analyze categorical data.

Advantages & Disadvantages of One-hot Encoding

One-hot encoding is a widely used technique in the realm of categorical variable preprocessing. While it offers several advantages, it also has a few limitations to consider. Let’s explore both the advantages and disadvantages of one-hot encoding.

The following is the advantages of one-hot encoding:

- Retains information: One-hot encoding preserves the categorical information in a structured and interpretable format. By creating separate binary columns for each category, it ensures that the original categories are retained in a numerical representation. This allows machine learning algorithms to utilize the encoded data effectively.

- Suitable for small cardinality: One-hot encoding works exceptionally well for categorical features with a small number of distinct categories. It creates a concise and easily interpretable matrix where each category is represented by a single binary column. For example, when representing the days of the week (7 categories), each day is encoded with a binary vector such as [1, 0, 0, 0, 0, 0, 0] for Sunday, making it convenient for machine learning algorithms to handle.

- Simplifies model interpretation: One-hot encoding provides clear and intuitive interpretations of the encoded features. The binary nature of the encoding allows for straightforward assessments of the presence or absence of specific categories in a given data instance. This simplifies model interpretation and makes it easier to identify the impact of different categories on the model’s predictions.

The following represents some of the disadvantages of one-hot encoding:

- High cardinality challenges: One of the main challenges of one-hot encoding arises when dealing with categorical features that have a high cardinality, meaning a large number of distinct categories. For instance, when trying to encode millions of customer IDs, the resulting one-hot encoded matrix can become extremely large and sparse. This can lead to increased computational complexity and memory requirements, making it impractical for certain machine learning algorithms to handle.

- Ignores relationships between categories: Another limitation of one-hot encoding is that it treats categorical variables as independent entities. It fails to capture any inherent relationships or dependencies that might exist among the categories. For instance, if there is a specific hierarchical or ordinal relationship between the categories, one-hot encoding does not reflect this relationship in the encoded features.

It’s important to consider these advantages and disadvantages while deciding whether to use one-hot encoding for a particular dataset and machine learning task. While it is a powerful technique for handling categorical variables, the limitations associated with high cardinality and the assumption of independence among categories should be taken into account. In some cases, alternative encoding methods like ordinal encoding or target encoding may be more suitable to address these limitations and capture relationships between categories.

One-hot Encoding Python Example

One-hot encoding can be performed using the Pandas library in Python. The Pandas library provides a function called “get_dummies” which can be used to one-hot encode data. It is discussed in detail later in this blog post. There are other techniques such as usage of OneHotEncoder class of sklearn.preprocessing module which can be used for one-hot encoding. One-hot encoded data is often referred to as dummy data. Dummy data is easier for machine learning models to work with because it eliminates the need for special handling of categorical variables.

The primary need for using one-hot encoding technique is to transform or convert the categorical features into numerical features such that machine learning libraries can use the values to train the model. Although, many machine learning library internally converts them, but it is recommended to convert these categorical features explicitly into numerical features (dummy features). Let’s understand the concept using an example given below.

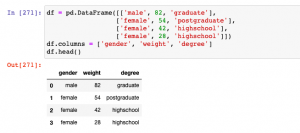

Here is a Pandas data frame which consists of three features such as gender, weight and degree. You may note that two of the features, gender and degree have non-numerical values. They are categorical features. They need to be converted into numerical features.

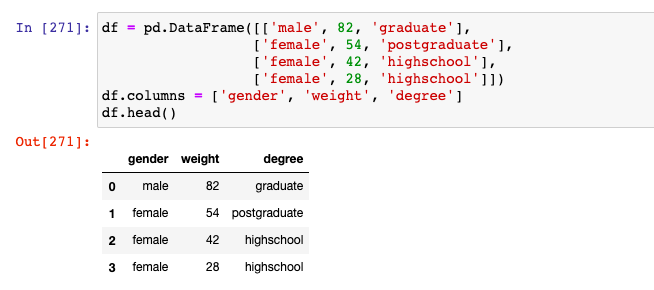

The above data frame when transformed into one-hot encoding technique will look like the following. Note that the categorical feature, gender, got transformed into two dummy features such as gender_male and gender_female. In the same manner, the categorical feature, degree, got transformed into two dummy features such as degree_graduate and degree_highschool. Note that in every row, only one of the dummy feature belonging to a specific feature will have value 1. The other feature will have value as 0. For example, when degree_graduate takes value as 1, other two related features such as degree_highschool and degree_postgraduate will have value as 0.

In the following sections, you will learn about how to use class OneHotEncoder of sklearn.preprocessing to do one-hot encoding of the categorical features.

OneHotEncoder for Single Categorical Feature

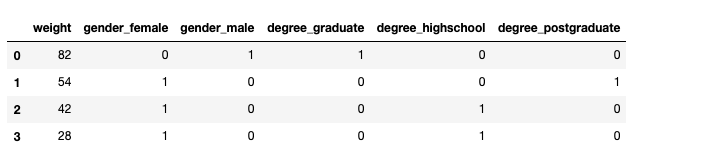

One-hot encoding for single categorical feature can be achieved using OneHotEncoder. The following code example illustrates the transformation of categorical feature such as gender that has two values. Note some of the following:

- When OneHotEncoder is instantiated with empty constructor function, the gender value gets converted into two feature columns.

- When OneHotEncoder is instantiated with drop=’first’, one of the dummy feature is dropped. This is because the value of remaining features when all 0’s will represent the dummy feature which got dropped. This is used to avoid multi-collinearity which can be an issue for certain methods (for instance, methods that require matrix inversion).

Here is the code sample for OneHotEncoder

from sklearn.preprocessing import OneHotEncoder

#

# Instantiate OneHotEncoder

#

ohe = OneHotEncoder()

#

# One-hot encoding gender column

#

ohe.fit_transform(df.degree.values.reshape(-1, 1)).toarray()

#

# OneHotEncoder with drop assigned to first

#

ohe = OneHotEncoder(drop='first')

#

# One-hot encoding gender column

#

ohe.fit_transform(df.degree.values.reshape(-1, 1)).toarray()

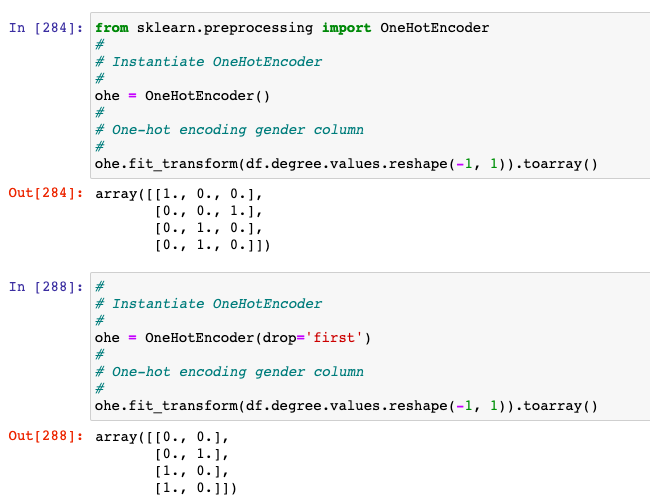

This is how the execution would look like in Jupyter Notebook:

The example below demonstrates using OneHotEncoder to transform the degree feature having more than 2 values.

ColumnTransformer & OneHotEncoder for Multiple Categorical Features

When there is a need for encoding multiple categorical features, OneHotEncoder can be used with ColumnTransformer. ColumnTransformer applies transformers to columns of an array or pandas DataFrame. The ColumnTransformer estimator allows different columns or column subsets of the input to be transformed separately and the features generated by each transformer will be concatenated to form a single feature space.

Here is the code sample which represents the usage of ColumnTransformer class from sklearn.compose module for transforming one or more categorical features using OneHotEncoder.

from sklearn.compose import ColumnTransformer

ct = ColumnTransformer([('one-hot-encoder', OneHotEncoder(), ['gender', 'degree'])], remainder='passthrough')

#

# For OneHotEncoder with drop='first', the code would look like the following

#

ct2 = ColumnTransformer([('one-hot-encoder', OneHotEncoder(drop='first'), ['gender', 'degree'])], remainder='passthrough')

#

# Execute Fit_Transform

#

ct.fit_transform(df)

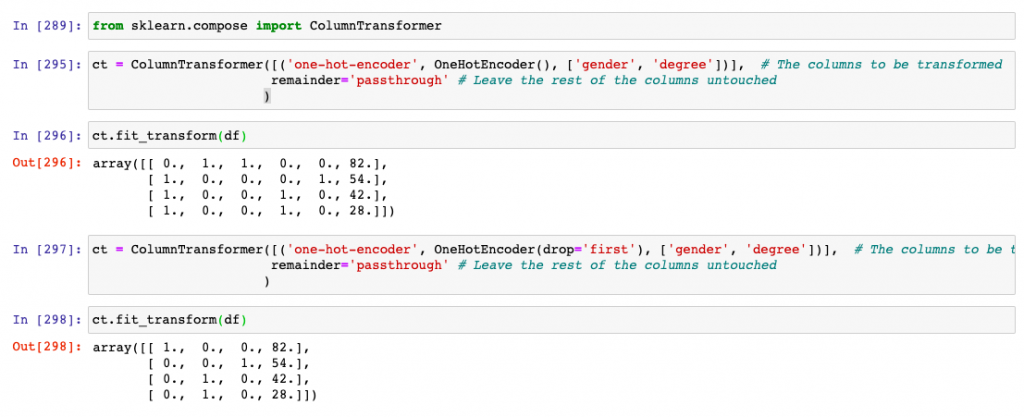

This is how the execution would look like in Jupyter Notebook:

Pandas get_dummies API for one-hot encoding

Pandas get_dummies API can also be used for transforming one or more categorical features into dummy numerical features. This is one of the most preferred way of one-hot-encoding due to simplicity of the method / API usage. Here is the code sample:

#

# Transform feature gender and degree using one-hot-encoding

#

pd.get_dummies(df, columns=['gender', 'degree'])

#

# Transform feature gender and degree using one-hot-encoding; Drop the first dummy feature

#

pd.get_dummies(df, columns=['gender', 'degree'], drop_first=True)

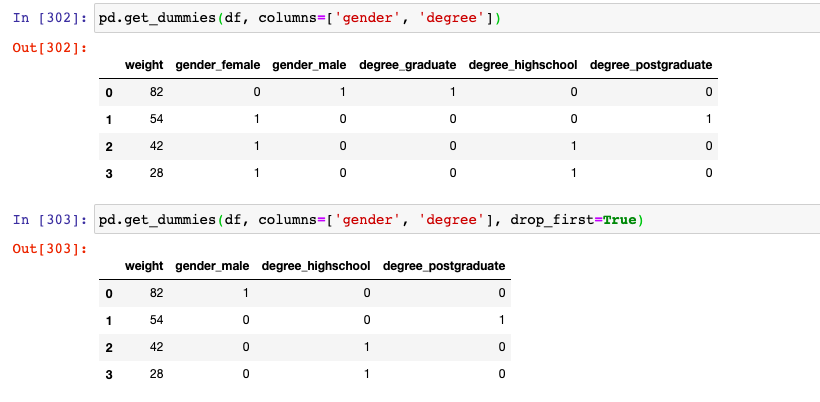

Here is how the code and outcome would look like.

Conclusion

Here is the summary of this post:

- One-hot encoding can be used to transform one or more categorical features into numerical dummy features useful for training machine learning model.

- One-hot encoding is also called dummy encoding due to the fact that the transformation of categorical features results into dummy features.

- OneHotEncoder class of sklearn.preprocessing module is used for one-hot encoding.

- ColumnTransformer class of sklearn.compose can be used for transforming multiple categorical features.

- Pandas get_dummies can be used for one-hot encoding.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I am a beginner in machine learning. Was learning data preprocessing following Udemy courses, got confused by one hot encoding. Your explanation is clear and super easy to follow. Thanks for this beginner-friendly blog!

Thank you