Tag Archives: nlp

Bag of Words in NLP & Machine Learning: Examples

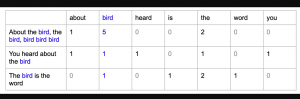

Last updated: 6th Jan, 2024 Most machine learning algorithms require numerical input for training the models. Bag of words (BoW) effectively converts text data into numerical feature vectors, making it compatible with a wide range of machine learning algorithms, from linear classifiers like logistic regression to complex ones like neural networks. In this post, you will learn about the concepts of bag-of-words model and how to train a text classification model using Python Sklearn. Some of the most common text classification problems includes sentiment analysis, spam filtering etc. In these problems, one can apply bag-of-words technique to train machine learning models for text classification. It will be good to understand the …

Text Clustering Real-World Applications: Examples

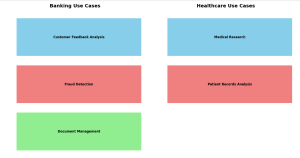

How often have you wondered about the vast amounts of unstructured data around us and its untapped potential? How can businesses sift through thousands of customer reviews, documents, or feedback to derive actionable insights? What if there was a way to automatically group similar pieces of text, helping organizations quickly identify patterns and trends? Enter text clustering. A subset of text analytics, text clustering is an unsupervised machine learning task that divides a set of texts into clusters or groups. This ensures that texts in the same group are more similar to each other than to those in other groups. A powerful tool for deciphering insights from unstructured data, text …

Find Topics of Text Clustering: Python Examples

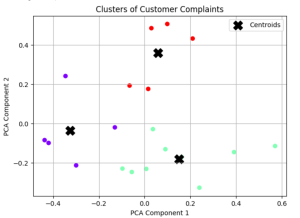

Have you ever clustered a collection of texts and wondered what predominant topics underlie each group? How can you pinpoint the essence of each cluster comprising of large volume of words? Is there a way to succinctly represent the core topic of each cluster using Python? Text clustering is a powerful technique in natural language processing (NLP) that groups documents into clusters based on their content. Once you’ve clustered your data, a natural follow-up question arises: “What are these clusters about?” In this article, we’ll discuss two different methods to find the dominant topics of text clusters using Python. Meanwhile, check out my post on text clustering – Text Clustering …

OpenAI Python API Example for NLP Tasks

Ever wondered how you can leverage the power of OpenAI’s GPT-3 and GPT-3.5 (from Jan 2024 onwards) directly in your Python application? Are you curious about generating human-like text with just a few lines of code? This blog post will walk you through an example Python code snippet that utilizes OpenAI’s Python API for different NLP tasks such as text generation. Check out my other post on how to use Langchain framework for text generation using OpenAI GPT models. OpenAI Python APIs The OpenAI Python API is an interface that allows you to interact with OpenAI’s language models, including their GPT-3 model. The following are different popular models that you …

Architecting a Generative AI Platform for GPT-based LLM Apps

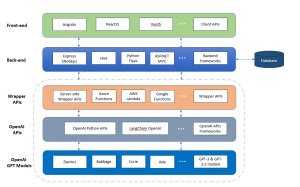

Have you ever wondered how to build a scalable Generative AI platform based on OpenAI GPT models that can serve different applications? Are you a data scientist, product manager, or software engineer looking to understand the intricacies of the architecture of such a scalable generative AI platform? This blog aims to demystify the architectural building blocks needed to create a robust GPT-based platform. By the end, you will have a clear roadmap for architecting, designing, and implementing your own GPT-based large language models (LLMs) applications platform. Generative AI Platform Architecture for GPT-based LLM Apps The following is the technology architecture of generative AI platform which can leverage OpenAI GPT based …

Text Clustering Python Examples: Steps, Algorithms

Text clustering has swiftly emerged as a cornerstone in data-driven decision-making across industries. But what exactly is text clustering, and how can it transform the way businesses operate? How does it convert unstructured text into actionable insights? What are the core steps involved in text clustering, and how are they interlinked? What algorithms are pivotal in implementing text clustering effectively? In this blog, we will unravel these questions, diving deep into the systematic steps of text clustering, its underlying algorithms, and real-world examples that bring this technique to life. Whether you’re a product manager seeking to leverage data analytics or a data scientist curious to learn key steps of text …

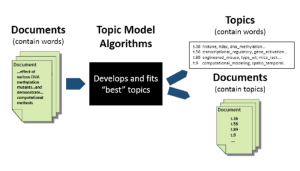

Topic Modeling LDA Python Example

Are you overwhelmed by the endless streams of text data and looking for a way to unearth the hidden themes that lie within? Have you ever wondered how platforms like Google News manage to group similar articles together, or how businesses extract insights from vast volumes of customer reviews? The answer to these questions might be simpler than you think, and it’s rooted in the world of Topic Modeling. Introducing Latent Dirichlet Allocation (LDA) – a powerful algorithm that offers a solution to the puzzle of understanding large text corpora. LDA is not just a buzzword in the data science community; it’s a mathematical tool that has found applications in …

Encoder Only Transformer Models Quiz / Q&A

Are you intrigued by the revolutionary world of transformer architectures? Have you ever wondered how encoder-only transformer models like BERT, ELECTRA, or DeBERTa have reshaped the landscape of Natural Language Processing (NLP)? The rapid advancement of machine learning has led to the creation of numerous transformer architectures, each with unique features, applications, and underlying mechanics. Whether you’re a data scientist, machine learning engineer, generative AI enthusiast, or a student eager to deepen your understanding, this quiz offers an engaging and informative way to assess your knowledge and sharpen your skills. It would also help you prepare for your interviews on this topic. Encoder-only transformer models have become a cornerstone in …

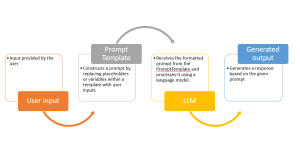

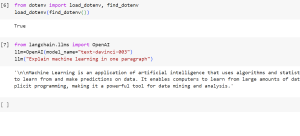

LLM Chain OpenAI Python Example

Have you ever wondered how to fully utilize large language models (LLMs) in our natural language processing (NLP) applications, like we do with ChatGPT? Would you not want to create an application such as ChatGPT where you write some prompt and it gives you back output such as text generation or summarization. While learning to make a direct API call to an OpenAI LLMs is a great start, we can build full fledged applications serving our end user needs. And, building prompts that adapt to user input dynamically is one of the most important aspect of an LLM app. That’s where LangChain, a powerful framework, comes in. In this blog, …

Langchain ChatGPT Hello World Python Example

Have you ever wondered how to build applications that not only utilize large language models (LLMs) but are also capable of interacting with their environment and connecting to other data sources. If so, then LangChain is the answer! In this blog, we will learn about what is LangChain, what are its key aspects, how does it work. We will also quickly review the concepts of prompt, tokens and temperature when using the OpenAI API. We will the learn about creating a ‘Hello World’ Python program using LangChain and OpenAI’s Large Language Models (LLMs) such as GPT-3 models. What is LangChain Framework? LangChain is a dynamic framework specifically designed for the …

Huggingface Arxiv Dataset: Python Example

Working with large and specific datasets is a common requirement in the field of natural language processing (NLP) and machine learning. The Arxiv dataset, containing metadata such as titles, abstracts, years, and categories of research papers, is an invaluable resource for researchers and data scientists. How can we easily load this dataset and extract the required information? In this blog post, we will explore a Python example using the Hugging Face library to load the Arxiv dataset and extract specific metadata. Python Code for Loading Huggingface Arxiv Dataset The following are the steps to load Hugging face Arxiv dataset using python code: Real-World Application Use Cases: Analyzing Research Papers Imagine …

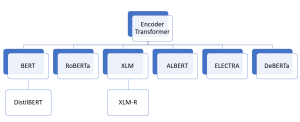

Encoder-only Transformer Models: Examples

How can machines accurately classify text into categories? What enables them to recognize specific entities like names, locations, or dates within a sea of words? How is it possible for a computer to comprehend and respond to complex human questions? These remarkable capabilities are now a reality, thanks to encoder-only transformer architectures like BERT. From text classification and Named Entity Recognition (NER) to question answering and more, these models have revolutionized the way we interact with and process language. In the realm of AI and machine learning, encoder-only transformer models like BERT, DistilBERT, RoBERTa, and others have emerged as game-changing innovations. These models not only facilitate a deeper understanding of …

LLMs & Semantic Search Course by Andrew NG, Cohere & Partners

Andrew Ng, a renowned name in the world of deep learning and AI, has joined forces with Cohere, a pioneer in natural language processing technologies. Alongside him are Jay Alammar, a well-known educator and visualizer of machine learning concepts, and Serrano Academy, an esteemed institution dedicated to AI research and education. Together, they have launched an insightful course titled “Large Language Models with Semantic Search.” This collaboration represents a fusion of expertise aimed at addressing the growing needs of semantic search in various applications. In an era where keyword search has dominated the search landscape, the need for more sophisticated, content-aware search capabilities is becoming increasingly evident. Content-rich platforms like …

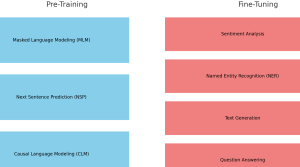

Pre-training vs Fine-tuning in LLM: Examples

Are you intrigued by the inner workings of large language models (LLMs) like BERT and GPT series models? Ever wondered how these models manage to understand human language with such precision? What are the critical stages that transform them from simple neural networks into powerful tools capable of text prediction, sentiment analysis, and more? The answer lies in two vital phases: pre-training and fine-tuning. These stages not only make language models adaptable to various tasks but also bring them closer to understanding language the way humans do. In this blog, we’ll dive into the fascinating journey of pre-training and fine-tuning in LLMs, complete with real-world examples. Whether you are a …

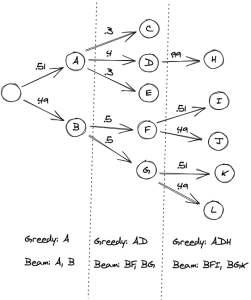

Greedy Search vs Beam Search Decoding: Concepts, Examples

Have you ever wondered how machine learning models transform their intricate calculations into clear, human-readable language? Or how your smartphone knows exactly what you’re going to type next before you even start typing? These everyday marvels are powered by a critical component of natural language processing (NLP) known as ‘decoding methods‘. But how do these methods work, and why are there different types? In the vast field of machine learning, a primary challenge in natural language processing tasks is converting a model’s computational output into an understandable and coherent text. Whether it’s autocompleting your sentences, translating text from one language to another, or generating a news article, these tasks involve …

NLP: Huggingface Transformers Code Examples

Do you want to build cutting-edge NLP models? Have you heard of Huggingface Transformers? Huggingface Transformers is a popular open-source library for NLP, which provides pre-trained machine learning models and tools to build custom NLP models. These models are based on Transformers architecture, which has revolutionized the field of NLP by enabling state-of-the-art performance on a range of NLP tasks. In this blog post, I will provide Python code examples for using Huggingface Transformers for various NLP tasks such as text classification (sentiment analysis), named entity recognition, question answering, text summarization, and text generation. I used Google Colab for testing my code. Before getting started, get set up with transformers …

I found it very helpful. However the differences are not too understandable for me