Have you ever wondered how to build a scalable Generative AI platform based on OpenAI GPT models that can serve different applications? Are you a data scientist, product manager, or software engineer looking to understand the intricacies of the architecture of such a scalable generative AI platform? This blog aims to demystify the architectural building blocks needed to create a robust GPT-based platform. By the end, you will have a clear roadmap for architecting, designing, and implementing your own GPT-based large language models (LLMs) applications platform.

Generative AI Platform Architecture for GPT-based LLM Apps

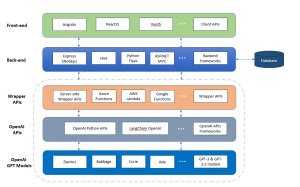

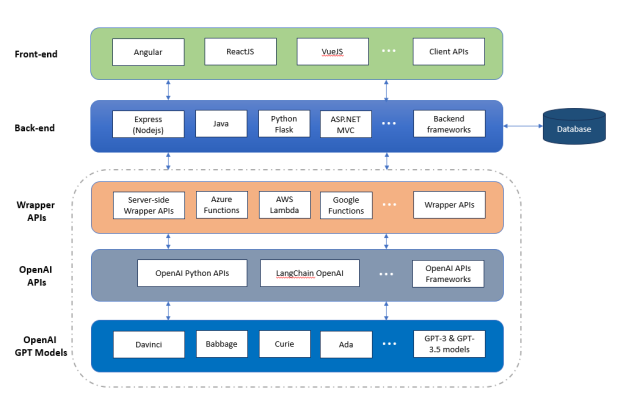

The following is the technology architecture of generative AI platform which can leverage OpenAI GPT based models for LLM use cases related to text generation, text completion, text summarization, conversation, etc.

The following are key building blocks of the generative AI platform represented in the above diagram. Note that the wrapper APIs, OpenAI APIs and GPT models shown in the above picture (within dashed line) form the part of the platform. They would be mostly same and enhanced from time-to-time. There can be different client / frontend and server / backend apps. For smaller organizations, one can also form the server side application as part of the platform. What will vary will be mostly client side or front end apps.

- Frontend or client side app: Provides an interface for end-users to interact with the platform, allowing them to input prompts. One can build client side apps using Angular, ReactJS, VueJS or plain client APIs. In a modern software ecosystem, “client-side app” doesn’t necessarily mean an application that’s used directly by the end-users. In a broader context, a client-side app can be any application that consumes services or resources from another application. This can include:

- Partner Apps: These are applications developed by business partners who need to integrate closely with your services. They may be using your APIs to access certain functionalities, services, or data. For example, an e-commerce website may have a partner app that handles payments or inventory.

- Apps from Different Departments: Within a large organization, different departments may develop their own applications for specific purposes. For example, the marketing department may have an analytics app, while the HR department has a separate app for employee management. Both these apps could be clients to a centralized database or authentication service.

- Backend or server side app: Server side apps act as the middleware that processes user inputs (prompts), interacts with generative AI models using wrapper APIs, and returns the generated outputs. For a smaller organization, a single, well-designed server-side app can manage multiple client-side applications, reducing the overhead of building and maintaining separate back-ends for each. Thus, it can become part of platform and fall under dashed line in above picture. One can build server side or backend apps using Java, Python Flask, NodeJS Express or other server side frameworks. One can containerize and deploy such server side apps on container cluster such as Kubernetes.

- Wrapper APIs: Wrapper APIs serve as an intermediary layer between your application and OpenAI’s API, standardizing and simplifying the API calls. They abstract the complexity of invoking OpenAI APIs, thus enabling easier integration with the server-side application. Wrapper APIs can ideally be built using serverless functions such as Azure function, AWS Lambda function, Google cloud functions etc. It helps in managing the scalability, availability, and maintenance of the API layer, freeing developers to focus on code and functionality. Serverless function abstracts away the server management, allowing auto-scaling based on demand.

- OpenAI APIs: OpenAI APIs provide a standardized way to interact with various GPT models. You can either use OpenAI APIs directly or use LangChain modules to invoke OpenAI APIs.

- OpenAI GPT models: OpenAI GPT models power the core capabilities of the OpenAI platform, from natural language processing (GPT-3 and GPT3.5 models) to image generation (Dall E) and code synthesis (Codex). You can read my blog regarding GPT 3 models titled as OpenAI GPT-3 Models List: Explained with Examples.

Conclusion

Navigating the complex landscape of generative AI platforms can be a daunting task, especially for smaller organizations with limited resources. However, as we’ve explored in this comprehensive guide, a well-thought-out architecture can alleviate many of these challenges. By understanding the pivotal roles of different components—from the front-end app that interfaces with users to the server-side app that acts as the workhorse—we can design a scalable, efficient, and cost-effective platform. For smaller organizations, the idea of leveraging a single server-side app to serve multiple client-side applications is particularly compelling. It allows for streamlined operations, reduced costs, and quicker deployment of new features or applications. With the help of serverless technologies, even the Wrapper API layer can be efficiently managed, freeing up developers to focus on what truly matters: building powerful, AI-driven applications that solve real-world problems.

Whether you’re a data scientist seeking to deploy machine learning models, a product manager aiming to bring an AI-driven service to market, or a software engineer tasked with building a robust application, understanding these architectural principles is crucial. It provides you not just with a blueprint for current projects, but also equips you with the knowledge to adapt and evolve in the ever-changing world of generative AI.

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

- What is Embodied AI? Explained with Examples - May 11, 2025

- Retrieval Augmented Generation (RAG) & LLM: Examples - February 15, 2025

I found it very helpful. However the differences are not too understandable for me