Have you ever wondered how to fully utilize large language models (LLMs) in our natural language processing (NLP) applications, like we do with ChatGPT? Would you not want to create an application such as ChatGPT where you write some prompt and it gives you back output such as text generation or summarization. While learning to make a direct API call to an OpenAI LLMs is a great start, we can build full fledged applications serving our end user needs. And, building prompts that adapt to user input dynamically is one of the most important aspect of an LLM app. That’s where LangChain, a powerful framework, comes in. In this blog, we will delve into the concept of LangChain and showcase its usage through a practical example of an LLM Chain.

What’s LLM Chain? How does it work?

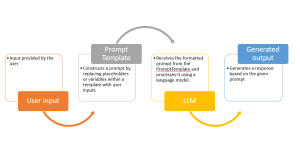

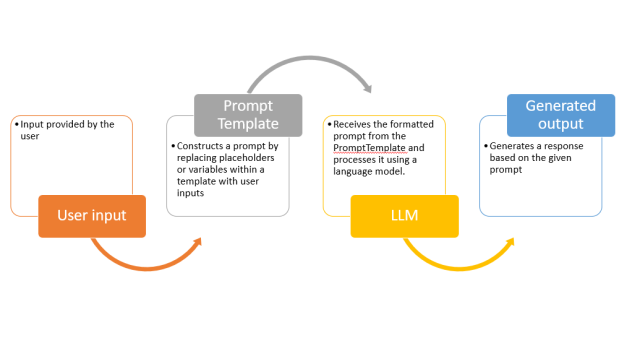

An LLM Chain, short for Large Language Model Chain, is a powerful concept within the LangChain framework that combines different primitives and large language models (LLMs) to create a sequence of operations for natural language processing (NLP) tasks such as completion, text generation, text classification, etc. It allows to orchestrate a series of steps to process user input, generate prompts (using PromptTemplate), and obtain meaningful outputs from an LLM. The LLM chain acts as a pipeline (as shown below), where the output of one step serves as the input to the next step. This chaining mechanism enables you to perform complex operations by breaking them down into smaller, manageable steps.

The following is a representation of how LLM Chain works:

In the diagram above, the LLM Chain consists of three main components:

- User Input: This represents the input provided by the user. It can be any form of text or information that serves as the basis for generating the desired output. For example, a prompt can be – “Explain machine learning concept in one paragraph”.

- PromptTemplate: The PromptTemplate is a primitive in the LLM Chain. It takes the user input and dynamically constructs a prompt by replacing placeholders or variables within a template. The prompt is designed to elicit the specific information needed for the task.

- LLM: The LLM (Language Model) is another component of the LLM Chain. It receives the formatted prompt from the PromptTemplate and processes it using a language model. The LLM generates a response or output based on the given prompt and its internal knowledge and understanding of language patterns.

By chaining the steps together, we can build powerful NLP applications that go beyond simple API calls to LLMs.

LLM Chain OpenAI / ChatGPT Example

In this section, we will learn about how to create a LLM Chain as described in the previous section to build our application. Below executing the code below, you may want to set up the environment by executing the following code.

!pip install langchain==0.0.275

!pip install openai

!pip install azure-core

The following code can be used to execute your first LLM Chain. Replace Open API key with your own key. The code below uses a dummy key

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

from langchain.chains import LLMChain

# Step 1: Import the necessary modules

import os

# Step 2: Set the OpenAI API key

os.environ["OPENAI_API_KEY"] = "abc-cv89le9R94dFGWAGVTwCVxBNH65CVzTdAPmFnlaD2d6baRn"

# Step 3: Get user input

user_input = input("Enter a concept: ")

# Step 4: Define the Prompt Template

prompt = PromptTemplate(

input_variables=["concept"],

template="Define {concept} with a real-world example?",

)

# Step 5: Print the Prompt Template

print(prompt.format(concept=user_input))

# Step 6: Instantiate the LLMChain

llm = OpenAI(temperature=0.9)

chain = LLMChain(llm=llm, prompt=prompt)

# Step 7: Run the LLMChain

output = chain.run(user_input)

print(output)

When you execute the above code, the following will happen:

- Step 1: Import the necessary modules from the LangChain library.

- Step 2: Set the OpenAI API key by assigning it to the OPENAI_API_KEY environment variable. Make sure to replace the provided API key with your own valid key.

- Step 3: A user input prompt is displayed, and the entered value is stored in the variable user_input using the input function.

- Step 4: Define the Prompt Template using PromptTemplate.

- Step 5: The prompt template is printed by formatting it with the user_input variable. This ensures that the user’s input is used as the value for the “concept” in the prompt template.

- Step 6: Instantiate the LLMChain by initializing an LLM object (e.g., OpenAI) and passing it along with the prompt template to create the LLMChain. Note the argument, temperature, passed in the OpenAI constructor function. The temperature argument is used to specify how creative LLM can get while producing the output. The value of temperature varies from 0 to 1. A value near 1 signals LLM to be as creative as possible. A value near 0 signals LLMs to be as accurate as possible in generating its output.

- Step 7: The LLMChain is executed by passing the user_input variable to the run method, allowing the LLM to process the specific concept entered by the user.

As you execute the code, you will be asked to enter an input. You can enter any concept they desire, and the code will dynamically generate the prompt and run the LLMChain accordingly. As part of running this prompt, it will define the concept with the help of example. You may note that you have made a reusable prompt which can be used in your Python web app or Flask app.

Conclusion

An LLM Chain is a sequence of steps within the LangChain framework that combines primitives and LLMs to process user input, generate prompts, and leverage the power of OpenAI large language models (LLMs) for NLP tasks. It provides a flexible and powerful mechanism for building sophisticated language processing applications. By utilizing LLM Chains, we can enhance the capabilities of OpenAI LLMs, make our applications (such as ChatGPT) more interactive, and enable them to provide personalized and context-aware responses to users.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I found it very helpful. However the differences are not too understandable for me