Tag Archives: generative ai

Machine Learning Definition, Examples, Method, Types

Last updated: 3rd Jan, 2024 Machine learning is a machine’s ability to learn from data. It has been around for decades, but machine learning is now being applied in nearly every industry and job function. In this blog post, we’ll cover a detailed introduction to what is machine learning (ML) including different definitions. We will also learn about different types of machine learning tasks, algorithms, etc along with real-world examples. What is machine learning & how does it work? Definition 1: Simply speaking, machine learning can be defined as an approach to model our beliefs about real-world events. For example, let’s say a person came to a doctor with a …

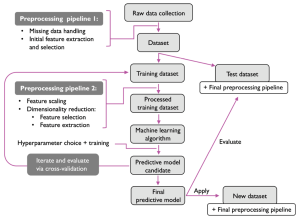

Machine Learning Models Solution Design: Examples

This blog is crafted for data scientists, machine learning (ML) and software engineers, business analysts / product managers, and anyone involved in the ML project lifecycle, aiming to create a reliable solution design and development strategy / plan for successful AI / machine learning project implementation and value realization. The blog revolves around a series of critical solution design questions, meticulously curated to guide teams from the initial conception of a project to its final deployment and beyond. By addressing each of these solution design questions, teams can ensure that they are not only building a model that is technically proficient but also one that aligns seamlessly with business objectives, …

Micro-average, Macro-average, Weighting: Precision, Recall, F1-Score

Last updated: 30th Dec, 2023 In this post, you will learn about how to use micro-averaging and macro-averaging methods for evaluating scoring metrics (precision, recall, f1-score) for multi-class classification machine learning problem. You will also learn about weighting method used as one of the other averaging choices of metrics such as precision, recall and f1-score for multi-class classification problem. The concepts will be explained with Python code examples. What & Why of Micro, Macro-averaging and Weighting metrics? Micro and macro-averaging methods are used in the evaluation of classification models, to compute performance metrics like precision, recall, and F1-score. These methods are especially relevant in scenarios involving multi-class or multi-label classification. In case of multi-class classification, …

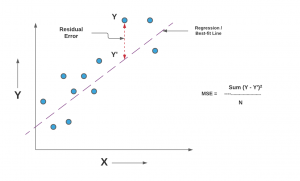

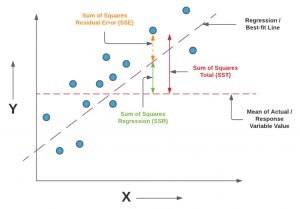

Mean Squared Error or R-Squared – Which one to use?

Last updated: 29th Dec, 2023 As you embark on your journey to understand and evaluate the performance of regression models, it’s crucial to know when to use each of these metrics and what they reveal about your model’s accuracy. In this post, you will learn about the concepts of the mean-squared error (MSE) and R-squared (R2), the difference between them, and which one to use when evaluating the linear regression models. Note that MSE is very closely related to root mean squared error (RMSE) which is also discussed in this blog. You also learn Python examples to understand the concepts in a better manner. For learning the differences between other …

Data Science Competitions on Different Online Platforms

Data science / Machine Learning is an ever-evolving field, and competitions provide a great way for beginners / practitioners to hone their skills, solve real-world problems, enhance their resumes / CVs and even earn rewards. Here’s a roundup of some notable machine learning / data science / AI competition platforms, each offering unique opportunities. Each of these data science competition platforms offers unique opportunities and challenges, making them ideal for both beginners and expert data scientists at various stages of their careers to learn, compete, and contribute to a wide array of problems.

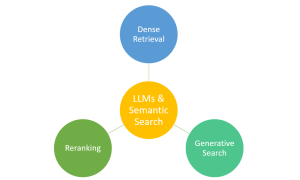

Large Language Models (LLMs) & Semantic Search: Examples

Have you ever marveled at how typing a few words into a search engine yields exactly the information you’re looking for from the vast expanse of the web? This is largely thanks to the advancements in semantic search, bolstered by technologies like Large Language Models (LLMs). Semantic search, which focuses on understanding the intent and contextual meaning behind queries, benefits from LLMs to provide more accurate and relevant results. However, it’s important to note that traditional search engines also rely on a sophisticated mix of algorithms, indexing, and ranking systems. LLMs complement these systems by enhancing their ability to interpret complex queries, making your search experience more intuitive and effective. …

Introducing Our New Data Science & AI Trends Page

We are thrilled to announce the launch of our dedicated Data Science and AI Trends page at VitalFlux.com! This new resource is designed to be a one-stop hub for data scientists, AI enthusiasts, and anyone passionate about staying at the forefront of technological innovation. What You’ll Find Our Data Science & AI Trends page is more than just a collection of articles; it’s a dynamic resource that aggregates the most insightful and current information from various high-impact sources. Here’s a sneak peek at what you can expect: Web Pages Stay informed with our selection of web pages from leading research institutions, tech news outlets, and individual thought leaders in the …

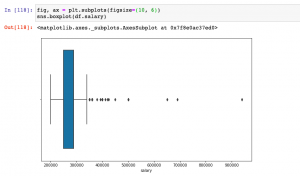

Python – Replace Missing Values with Mean, Median & Mode

Last updated: 18th Dec, 2023 Have you found yourself asking question such as how to deal with missing values in data analysis stage? When working with Python, have you been troubled with question such as how to replace missing values in Pandas data frame? Well, missing values are common in dealing with real-world problems when the data is aggregated over long time stretches from disparate sources, and reliable machine learning modeling demands for careful handling of missing data. One strategy is imputing the missing values, and a wide variety of algorithms exist spanning simple interpolation (mean, median, mode), matrix factorization methods like SVD, statistical models like Kalman filters, and deep …

Tool – Machine Learning Algorithm Cheat Sheet

Here is a comprehensive and user-friendly tool designed to bridge the gap between complex machine learning concepts and practical understanding. Whether you’re a student, educator, data scientist, or just a curious learner, this tool is your go-to resource for quick insights into some of the most popular and widely used machine learning algorithms. From Linear Regression to more advanced techniques like XGBoost and Principal Component Analysis, the plugin offers a succinct summary of each algorithm, including its definition, typical use cases, and applicable Python and R libraries. Select a Machine Learning Algorithm Select a machine learning algorithm from the drop-down to view and learn the details. Select a Feature Scaling …

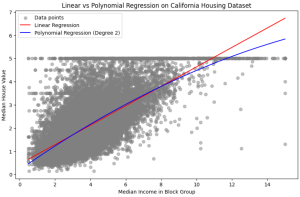

Linear Regression vs. Polynomial Regression: Python Examples

In the realm of predictive modeling and data science, regression analysis stands as a cornerstone technique. It’s essential for understanding relationships in data, forecasting trends, and making informed decisions. This guide delves into the nuances of Linear Regression and Polynomial Regression, two fundamental approaches, highlighting their practical applications with Python examples. What are Linear and Polynomial Regression? In this section, we will learn about what are linear and polynomial regression. What is Linear Regression? Linear Regression is a statistical method used in predictive analysis. It’s a straightforward approach for modeling the relationship between a dependent variable (often denoted as y) and one or more independent variables (denoted as x). In …

Linear Regression vs Logistic Regression: Python Examples

Last updated: 15th Dec, 2023 In the ever-evolving landscape of machine learning, two regression algorithms stand out for their simplicity and effectiveness: Linear Regression and Logistic Regression. But what exactly are these algorithms, and how do they differ from each other? At first glance, logistic regression and linear regression might seem very similar – after all, they share the word “regression.” However, the devil, as they say, is in the details. Each method is uniquely tailored to solve specific types of problems, and understanding these subtleties is key to unlocking their full potential. Linear regression and logistic regression are both machine learning algorithms used for modeling relationships between variables but …

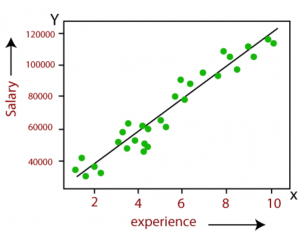

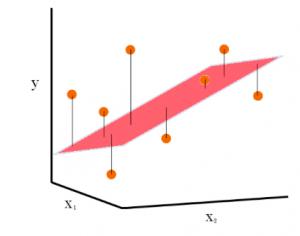

Linear Regression in Machine Learning: Python Examples

Last updated: 15th Dec, 2023 In this post, the linear regression concepts in machine learning is explained with multiple real-life examples. Two types of regression models (simple/univariate and multiple/multivariate linear regression) are taken up for sighting examples. In addition, Python code examples are used for demonstrating training of simple linear and multiple linear regression models. In case you are a machine learning or data science beginner, you may find this post helpful enough. You may also want to check a detailed post – What is Machine Learning? Concepts & Examples. What is Linear Regression? Linear regression is a machine learning concept that is used to build or train the models …

Random Forest vs XGBoost: Which One to Use? Examples

Understanding the differences between XGBoost and Random Forest machine learning algorithm is crucial as it guides the selection of the most appropriate model for a given problem. Random Forest, with its simplicity and parallel computation, is ideal for quick model development and when dealing with large datasets, whereas XGBoost, with its sequential tree building and regularization, excels in achieving higher accuracy, especially in scenarios where overfitting is a concern. This knowledge can be helpful to balance between computational efficiency and predictive performance, tailor models to specific data characteristics, and optimize their approach for either rapid prototyping or precision-focused tasks. In this blog, we will learn the difference between Random Forest …

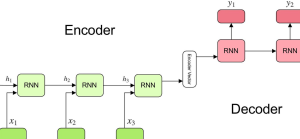

Generative AI Examples, Use Cases, Applications

Last updated: 12th Dec, 2023 Machine learning, particularly in the field of Generative AI or generative modeling, has seen significant advancements recently. Generative AI involves algorithms that create new data samples and is widely recognized for its ability to produce not only coherent text but also highly realistic images, videos, and music. One of the most popular Generative AI example applications includes Large Language Models (LLMs) like GPT-3 and GPT-4, which are specialized in tasks like text generation, summarization, and machine translation. This technology has gained immense popularity due to its diverse applications and the impressive realism of the content it generates. As a data scientist, it is crucial to …

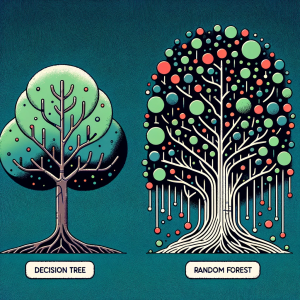

Difference Between Decision Tree and Random Forest

Last updated: 11th Dec, 2023 In machine learning, there are a few different tree-based algorithms that can be used for both regression and classification tasks. Two of the most popular are decision trees and random forest. A decision tree is a basic machine learning model, resembling a flowchart. Random Forest, an advanced technique, combines multiple decision trees to enhance accuracy and reduce overfitting, using averaging or voting for final predictions. Essentially, Random Forest is a collection of decision trees working together. Both of these algorithms have their similarities and differences, and in this blog post, we’ll take a look at the key differences between them. What’s Decision Tree Algorithm? How …

F-test & F-statistics in Linear Regression: Formula, Examples

Last updated: 11th Dec, 2023 In this blog post, we will take a look at the concepts and formula of f-test and related f-statistics in linear regression models and understand how to perform f-test and interpret f-statistics in linear regression with the help of examples. F-test and related F-statistics interpretation is key if you want to assess if the linear regression model results in a statistically significant fit to the data overall. An insignificant F-test determined by the f-statistics value vis-a-vis critical region implies that the predictors have no linear relationship with the target variable. We will start by discussing the importance of F-test and f-statistics in linear regression models …

I found it very helpful. However the differences are not too understandable for me