Accounts Payable Machine Learning Use Cases

The machine learning for accounts payable market is expected to grow from $6.1 million in 2016 to $76.8 million by 2021, at a compound annual growth rate (CAGR) of 53 percent. The software industry is rapidly embracing machine learning for account payable. As account payable becomes more automated, it also becomes more data-driven. Machine learning is enabling account payables stakeholders to leverage powerful new capabilities in this arena. In this blog post, you will learn machine learning / deep learning / AI use cases for account payable. What is Accounts Payable? Account payable is a crucial part of the business process because it helps to ensure that businesses have the …

85+ Free Online Books, Courses – Machine Learning & Data Science

This post represents a comprehensive list of 85+ free books/ebooks and courses on machine learning, deep learning, data science, optimization, etc which are available online for self-paced learning. This would be very helpful for data scientists starting to learn or gain expertise in the field of machine learning / deep learning. Please feel free to comment/suggest if I missed mentioning one or more important books that you like and would like to share. Also, sorry for the typos. Following are the key areas under which books are categorized: Data science Pattern Recognition & Machine Learning Probability & Statistics Neural Networks & Deep Learning Optimization Data mining Mathematics Here is my post …

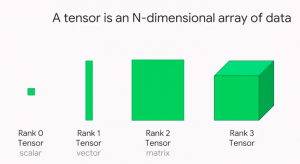

Tensor Explained with Python Numpy Examples

Tensors are a hot topic in the world of data science and machine learning. But what are tensors, and why are they so important? In this post, we will explain the concepts of Tensor using Python Numpy examples with the help of simple explanation. We will also discuss some of the ways that tensors can be used in data science and machine learning. When starting to learn deep learning, you must get a good understanding of the data structure namely tensor as it is used widely as the basic data structure in frameworks such as tensorflow, PyTorch, Keras etc. Stay tuned for more information on tensors! What are tensors, and why are …

Supplier Relationship Management & Machine Learning / AI

Supplier relationship management (SRM) is the process of managing supplier relationships to develop and maintain a strategic procurement partnership. SRM includes focus areas such as supplier selection, procurement strategy development, procurement negotiation, and performance measurement and improvement. SRM has been around for over 20 years but we are now seeing new technologies such as machine learning come into play. What exactly does advanced analytics such as artificial intelligence (AI) / machine learning (ML) have to do with SRM? And how will AI/ML technologies transform procurement? What are some real-world machine learning use cases related to supplier relationships management? What are a few SRM KPIs/metrics which can be tracked by leveraging …

Feedforward Neural Network Python Example

A feedforward neural network, also known as a multi-layer perceptron, is composed of layers of neurons that propagate information forward. In this post, you will learn about the concepts of feedforward neural network along with Python code example. We will start by discussing what a feedforward neural network is and why they are used. We will then walk through how to code a feedforward neural network in Python. In order to get good understanding on deep learning concepts, it is of utmost importance to learn the concepts behind feed forward neural network in a clear manner. Feed forward neural network learns the weights based on backpropagation algorithm which will be discussed …

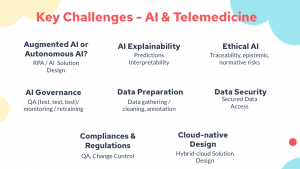

Artificial Intelligence (AI) for Telemedicine: Use cases, Challenges

In this post, you will learn about different artificial intelligence (AI) use cases of Telemedicine / Telehealth including some of key implementation challenges pertaining to AI / machine learning. In case you are working in the field of data science / machine learning, you may want to go through some of the challenges, primarily AI related, which is thrown in Telemedicine domain due to upsurge in need of reliable Telemedicine services. What is Telemedicine? Telemedicine is the remote delivery of healthcare services, using digital communication technologies. It has the potential to improve access to healthcare, especially in remote or underserved communities. It can be used for a variety of purposes, including …

Digital Healthcare Technology & Innovations: Examples

Digital healthcare technology is making waves in the medical community. It has the potential to change the way we approach healthcare, and it is already starting to revolutionize the way patients are treated. In this blog post, we will explore some of the most exciting digital healthcare technologies including AI / machine learning & blockchain based applications, initiatives and innovations. We will also take a look at some real-world examples of how these technologies are being used to improve patient care. Digital health refers to the use of digital technology to improve the delivery of healthcare services. Connected health (also known as i-health) is a term that encompasses all digital …

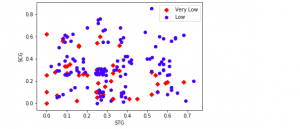

Scatter plot Matplotlib Python Example

If you’re a data scientist, data analyst or a Python programmer, data visualization is key part of your job. And what better way to visualize all that juicy data than with a scatter plot? Matplotlib is your trusty Python library for creating charts and graphs, and in this blog we’ll show you how to use it to create beautiful scatter plots using examples and with the help of Matplotlib library. So dig into your data set, get coding, and see what insights you can uncover! What is a Scatter Plot? A scatter plot is a type of data visualization that is used to show the relationship between two variables. Scatter …

Why AI & Machine Learning Projects Fail?

AI / Machine Learning and data science projects are becoming increasingly popular for businesses of all sizes. Every organization is trying to leverage AI to further automate their business processes and gain competitive edge by delivering innovative solutions to their customers. However, many of these AI & machine learning projects fail due to various different reasons. In this blog post, we will discuss some of the reasons why AI / Machine Learning / Data Science projects fail, and how you can avoid them. The following are some of the reasons why AI / Machine learning projects fail: Lack of understanding of business problems / opportunities Ineffective solution design approaches Lack …

Weight Decay in Machine Learning: Concepts

Weight decay is a popular technique in machine learning that helps to improve the accuracy of predictions. In this post, we’ll take a closer look at what weight decay is and how it works. We’ll also discuss some of the benefits of using weight decay and explore some possible applications. As data scientists, it is important to learn about concepts of weight decay as it helps in building machine learning models having higher generalization performance. Stay tuned! What is weight decay and how does it work? Weight decay is a regularization technique that is used to regularize the size of the weights of certain parameters in machine learning models. Weight …

Purpose of Dashboard: Advantages & Disadvantages

A dashboard is a visual representation of the most important information needed to achieve a goal. Dashboards form an integral part of analytical solutions. As the demand for data analytics continues to grow, dashboards are well-positioned to become one of the most essential tools in any business toolkit. It can provide an overview of what is happening in your business and help you make better decisions. While there are many advantages to using a dashboard, there are also some disadvantages that you should be aware of. In this blog post, we will discuss the purpose of the dashboard and its advantages and disadvantages. As a product manager/business analyst and data …

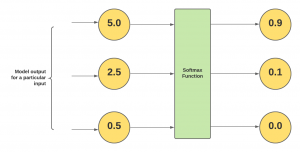

Softmax Regression Explained with Python Example

In this post, you will learn about the concepts of what is Softmax regression/function with Python code examples and why do we need them? As data scientist/machine learning enthusiasts, it is very important to understand the concepts of Softmax regression as it helps in understanding the algorithms such as neural networks, multinomial logistic regression, etc in a better manner. Note that the Softmax function is used in various multiclass classification machine learning algorithms such as multinomial logistic regression (thus, also called softmax regression), neural networks, etc. Before getting into the concepts of softmax regression, let’s understand what is softmax function. What’s Softmax function? Simply speaking, the Softmax function converts raw …

Information Theory, Machine Learning & Cross-Entropy Loss

What is information theory? How is information theory related to machine learning? These are some of the questions that we will answer in this blog post. Information theory is the study of how much information is present in the signals or data we receive from our environment. AI / Machine learning (ML) is about extracting interesting representations/information from data which are then used for building the models. Thus, information theory fundamentals are key to processing information while building machine learning models. In this blog post, we will provide examples of information theory concepts and entropy concepts so that you can better understand them. We will also discuss how concepts of …

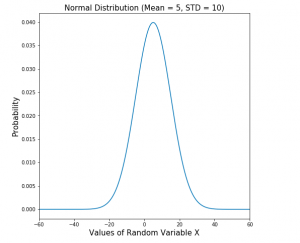

Normal Distribution Explained with Python Examples

What is normal distribution? It’s a probability distribution that occurs in many real world cases. In this blog post, you will learn about the concepts of Normal Distribution with the help of Python example. As a data scientist, you must get a good understanding of different probability distributions in statistics in order to understand the data in a better manner. Normal distribution is also called as Gaussian distribution or Laplace-Gauss distribution. Normal Distribution with Python Example Normal distribution is the default probability for many real-world scenarios. It represents a symmetric distribution where most of the observations cluster around the central peak called as mean of the distribution. A normal distribution can be thought of as a …

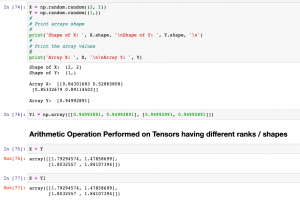

Tensor Broadcasting Explained with Examples

In this post, you will learn about the concepts of Tensor Broadcasting with the help of Python Numpy examples. Recall that Tensor is defined as the container of data (primarily numerical) most fundamental data structure used in Keras and Tensorflow. You may want to check out a related article on Tensor – Tensor explained with Python Numpy examples. Broadcasting of tensor is borrowed from Numpy broadcasting. Broadcasting is a technique used for performing arithmetic operations between Numpy arrays / Tensors having different shapes. In this technique, the following is done: As a first step, expand one or both arrays by copying elements appropriately so that after this transformation, the two tensors have the …

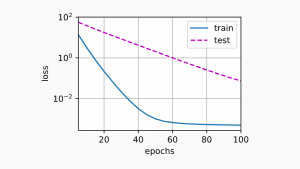

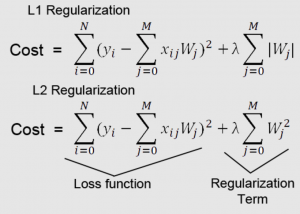

Regularization in Machine Learning: Concepts & Examples

In machine learning, regularization is a technique used to avoid overfitting. This occurs when a model learns the training data too well and therefore performs poorly on new data. Regularization helps to reduce overfitting by adding constraints to the model-building process. As data scientists, it is of utmost importance that we learn thoroughly about the regularization concepts to build better machine learning models. In this blog post, we will discuss the concept of regularization and provide examples of how it can be used in practice. What is regularization and how does it work? Regularization in machine learning represents strategies that are used to reduce the generalization or test error of …

I found it very helpful. However the differences are not too understandable for me