First Principles Thinking using ChatGPT

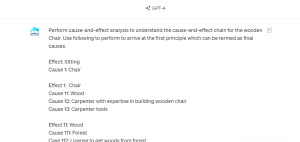

Have you ever wondered why an object such as a chair is shaped the way it is, or why it’s even needed in the first place? What mystery unravels when we dig into the very essence of everyday objects and concepts around us? Navigating through a universe having well-established beliefs and customary wisdom, the hunt for innovative answers and deciphering the secrets hidden behind the everyday becomes not just a curiosity, but a necessity. This is where first principles thinking comes to the rescue. I have posted a detailed blog on First principles thinking – First principles thinking: Concepts & Examples. In this blog, let’s explore how we can utilize …

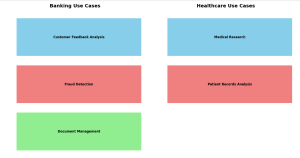

Generative AI Framework for Product Managers: Examples

Ever wondered how you as a product manager can stay ahead in the competitive era fuelled by technological advancements such as generative AI? Are you constantly grappling with the pressure to deliver groundbreaking solutions in line with your business goals? As a product manager, wouldn’t it be revolutionary to have some kind of a playbook that simplifies these challenges? What exactly can generative AI do for modern product managers? Which areas of your daily struggles can it alleviate, and what AI frameworks are best suited for your unique challenges? In this blog, we will dive into the potential of Generative AI vis-a-vis real-life use cases for product managers. Use Cases: …

Problems with Categorical Variables: Examples

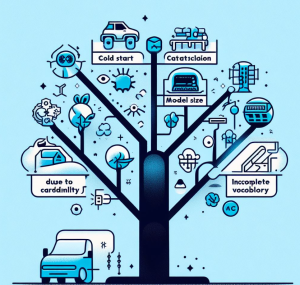

Have you ever encountered unfamiliar words while learning a new language and didn’t know their meanings? Or tried to fit all your belongings into a suitcase, only to realize it’s too full? Or started reading a book series from the third book and felt lost? These scenarios in our daily lives surprisingly resemble some challenges we face with categorical variables in machine learning. Categorical variables, while essential in many datasets, bring with them a unique set of challenges. In this article, we’ll be discussing three major problems associated with categorical features: Let’s explore each with real-life examples and supporting Python code snippets. Incomplete Vocabulary The “Incomplete Vocabulary” problem arises when …

Central Tendency in Machine Learning: Python Examples

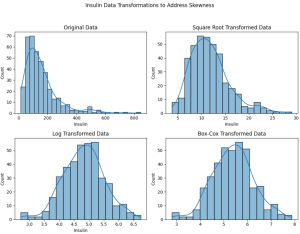

Have you ever wondered why your machine learning model is not performing as expected? Could the “average” behavior of your dataset be misleading your model? How does the “central” or “typical” value of a feature influence the performance of a machine learning model? In this blog, we will explore the concept of central tendency, its significance in machine learning, and the importance of addressing skewness in your dataset. All of this will be demonstrated with the help of Python code examples using a diabetes dataset. We will be working with the diabetes dataset which can be found on Kaggle – Diabetes Dataset. The dataset consists for multiple columns such as …

Data Analytics for Car Dealers: Actionable Insights

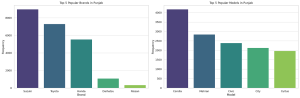

Are you starting a car dealership and wondering how to leverage data to make informed business decisions? In today’s data-driven world, analytics can be the difference between a thriving business and a failing one. This blog aims to provide actionable insights for car dealers, especially those starting new car dealer business, to excel in various business aspects. I will cover inventory management, pricing strategy, marketing and sales, customer service, and risk mitigation, all backed by data analytics. I will continue to update this blog with more methods in time to come. The data used for analysis can be found on the Kaggle.com – Ultimate Car Price Prediction Dataset. First and …

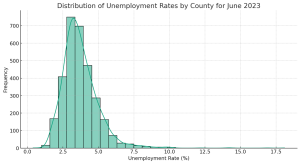

Unemployment Data & Actionable Insights Examples

Unemployment figures often flood the news, painting a broad picture of economic stability or crisis. But have you ever wondered how these rates break down at the local level? Do certain counties (or cities) in different states fare better or worse than the national average, and if so, why? Unemployment is a critical indicator of economic health and social well-being. While national or state-level unemployment rates often make headlines, diving deeper into county-level or city level data can offer valuable insights for local governments, policymakers, and social organizations. In this blog, we will explore a dataset that provides unemployment rates for various U.S. counties in June 2023. Along the way, …

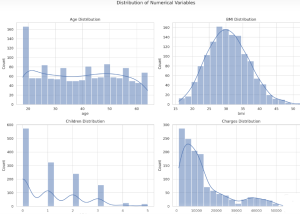

Insurance & Linear Regression Model Example

Ever wondered how insurance companies determine the premiums you pay for your health insurance? Predicting insurance premiums is more than just a numbers game—it’s a task that can impact millions of lives. In this blog, we’ll demystify this complex process by walking you through an end-to-end example of predicting health insurance premium charges by demonstrating with Python code example. Specifically, we’ll use a linear regression model to predict these charges based on various factors like age, BMI, and smoking status. Whether you’re a beginner in data science or a seasoned professional, this blog will offer valuable insights into building and evaluating regression models. What is Linear Regression? Linear Regression is …

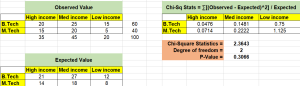

Chi-square test – Formula, Concepts, Examples

The Pearson’s Chi-square (χ2) test is a statistical test used to determine whether the distribution of observed data is consistent with the distribution of data expected under a particular hypothesis. The Chi-square test can be used to compare or evaluate the independence of two distributions, or to assess the goodness of fit of a given distribution to observed data. In this blog post, we will discuss different types of Chi-square tests, the concepts behind them, and how to perform them using Python / R. As data scientists, it is important to have a strong understanding of the Chi-square test so that we can use it to make informed decisions about …

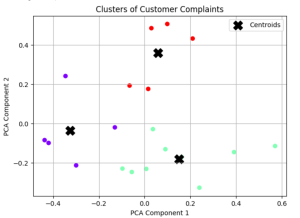

Text Clustering Real-World Applications: Examples

How often have you wondered about the vast amounts of unstructured data around us and its untapped potential? How can businesses sift through thousands of customer reviews, documents, or feedback to derive actionable insights? What if there was a way to automatically group similar pieces of text, helping organizations quickly identify patterns and trends? Enter text clustering. A subset of text analytics, text clustering is an unsupervised machine learning task that divides a set of texts into clusters or groups. This ensures that texts in the same group are more similar to each other than to those in other groups. A powerful tool for deciphering insights from unstructured data, text …

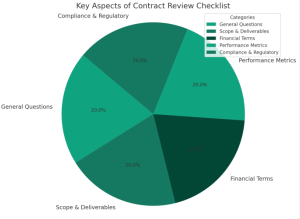

Contract Analysis & Review Checklist: Questions, Examples

Have you ever found yourself knee-deep in contractual jargon, wondering if you’ve missed a critical clause that could cost your organization thousands or even millions? How confident are you that every contract your team signs is optimized for both performance and cost efficiency? If you’re a procurement stakeholder, a category manager, or a contract specialist, these questions are not just hypothetical—they’re the daily challenges you face. In this blog, you will learn about a structured approach to learning, understanding, and reviewing contracts, minimizing risks, and maximizing value based on asking the right kind of questions. We delve into key questions you should be asking, highlight essential clauses to scrutinize, and …

Find Topics of Text Clustering: Python Examples

Have you ever clustered a collection of texts and wondered what predominant topics underlie each group? How can you pinpoint the essence of each cluster comprising of large volume of words? Is there a way to succinctly represent the core topic of each cluster using Python? Text clustering is a powerful technique in natural language processing (NLP) that groups documents into clusters based on their content. Once you’ve clustered your data, a natural follow-up question arises: “What are these clusters about?” In this article, we’ll discuss two different methods to find the dominant topics of text clusters using Python. Meanwhile, check out my post on text clustering – Text Clustering …

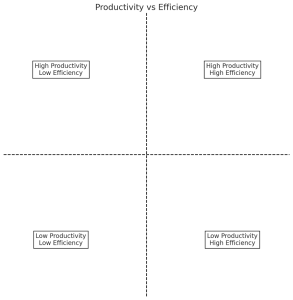

Productivity vs Efficiency: Differences, Examples

If you’ve ever found yourself caught in the whirlwind of tasks and deadlines, you’ve probably asked yourself: “How can I get more done?” or “How can I make better use of my time?” At the core of these questions lie two concepts that are often used interchangeably but are fundamentally different: Productivity and Efficiency. Understanding the nuances between productivity and efficiency can be a game-changer in both your personal and professional life. While both are geared towards improving performance and achieving goals, they focus on different aspects of the work process. Knowing when to prioritize one over the other can mean the difference between spinning your wheels and skyrocketing your …

OpenAI Python API Example for NLP Tasks

Ever wondered how you can leverage the power of OpenAI’s GPT-3 and GPT-3.5 (from Jan 2024 onwards) directly in your Python application? Are you curious about generating human-like text with just a few lines of code? This blog post will walk you through an example Python code snippet that utilizes OpenAI’s Python API for different NLP tasks such as text generation. Check out my other post on how to use Langchain framework for text generation using OpenAI GPT models. OpenAI Python APIs The OpenAI Python API is an interface that allows you to interact with OpenAI’s language models, including their GPT-3 model. The following are different popular models that you …

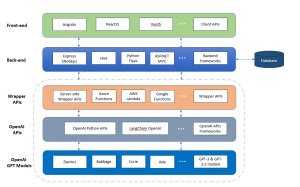

Architecting a Generative AI Platform for GPT-based LLM Apps

Have you ever wondered how to build a scalable Generative AI platform based on OpenAI GPT models that can serve different applications? Are you a data scientist, product manager, or software engineer looking to understand the intricacies of the architecture of such a scalable generative AI platform? This blog aims to demystify the architectural building blocks needed to create a robust GPT-based platform. By the end, you will have a clear roadmap for architecting, designing, and implementing your own GPT-based large language models (LLMs) applications platform. Generative AI Platform Architecture for GPT-based LLM Apps The following is the technology architecture of generative AI platform which can leverage OpenAI GPT based …

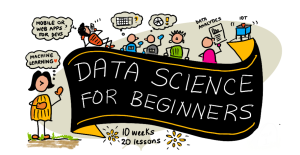

Microsoft’s Free Courses: Data Science, Machine Learning, AI

Are you keen on diving into the world of data science, machine learning, or artificial intelligence? Have you been searching for courses that not only teach the fundamentals but are also free and accessible? Look no further! Microsoft has put together three distinct courses that will cater to your interests and ignite your passion for learning. Data Science for Beginners This course offers an ideal starting point for those new to data science, focusing on the basics and guiding through practical exercises. The course would help you demystify the complex world of data, allowing you to make informed decisions in various fields such as business, healthcare, and more. Each lesson …

Text Clustering Python Examples: Steps, Algorithms

Text clustering has swiftly emerged as a cornerstone in data-driven decision-making across industries. But what exactly is text clustering, and how can it transform the way businesses operate? How does it convert unstructured text into actionable insights? What are the core steps involved in text clustering, and how are they interlinked? What algorithms are pivotal in implementing text clustering effectively? In this blog, we will unravel these questions, diving deep into the systematic steps of text clustering, its underlying algorithms, and real-world examples that bring this technique to life. Whether you’re a product manager seeking to leverage data analytics or a data scientist curious to learn key steps of text …

I found it very helpful. However the differences are not too understandable for me