Last updated: 18th Dec, 2023

When it comes to statistical tests, z-test and t-test are two of the most commonly used. But what is the difference between z-test and t-test? And when to use z-test vs t-test? In this relation, we also wonder about z-statistics vs t-statistics. And, the question arises around what’s the difference between z-statistics and t-statistics. In this blog post, we will answer all these questions and more! We will start by explaining the difference between z-test and t-test in terms of their formulas. Then we will go over some examples so that you can see how each test is used in practice. As data scientists, it is important to understand the difference between z-test and t-test so that you can choose the right test for your data. Let’s get started!

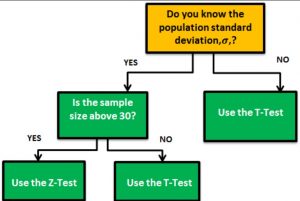

Check out this Z-test vs T-test decision tool which will help you select the most appropriate test out of Z-test and T-test based on your input.

What is Z-Test?

Z-test is a statistical hypothesis testing technique which is used to test the null hypothesis in relation to the following given that the population’s standard deviation is known and the data belongs to normal distribution:

Use Z-test: To test whether there is a difference between sample and population

Z-test can be used to test the hypothesis that there is a difference between sample and population. In other words, test whether the difference between sample and population is statistically significant. This hypothesis can be tested using one-sample Z-test for means. In other words, one-sample Z-test for means can be used to test the hypothesis that the sample belongs to the population. In this test, the mean of the sample is compared against the population mean in the sampling distribution.

For example, suppose a researcher wants to investigate if the average height of students in a particular university differs from the average height of college students across the country. They could collect a random sample of students from the university and calculate the mean height of the sample. They can then conduct a Z-test to determine if the difference between the sample mean and the population mean is statistically significant or not.

The formula for Z-statistics for one-sample Z-test for means is given below. The standard error in the formula given below is the standard deviation of the sampling distribution of the mean which is the distribution of all possible sample means that could be obtained from the population. Read greater details in this blog, one-sample Z-test for means. The z-statistic measures how many standard deviations the sample mean is from the population mean. It is used to determine the statistical significance of the difference between the sample mean and the population mean.

Z = (X̄ – µ)/SE

= (X̄ – µ)/σ/√n, , where SE is the standard error, X̄ is the sample mean, µ is the population mean, σ is the population standard deviation and the n is the sample size.

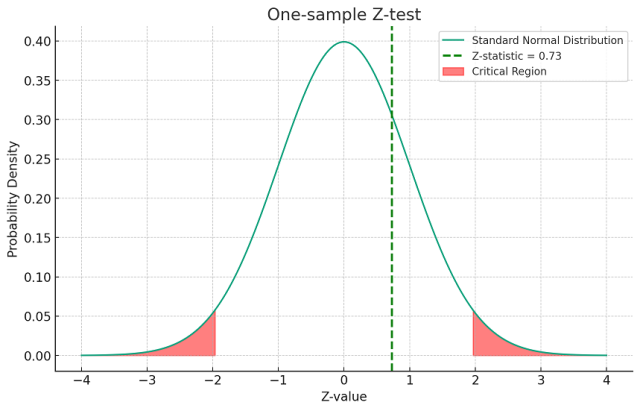

The diagram below represents z-statistics for a sample data. The plot above represents a one-sample z-test with the following characteristics:

- The red shaded areas show the critical regions at a significance level of for a two-tailed test.

- The green dashed line represents the calculated z-statistic, which is approximately 0.73.

- The critical z-value for this two-tailed test is approximately ±1.96.

Since the z-statistic is within the critical values (i.e., it is less than 1.96 and greater than -1.96), we would not reject the null hypothesis based on this z-statistic at the 0.05 significance level.

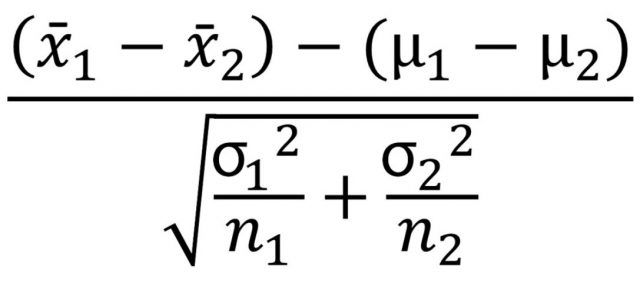

Use Z-Test: To test whether there is difference between two independent samples

Z-test can be used to test the hypothesis that there is difference between the two independent sample means. In other words, test whether the difference between the two independent samples is statistically significant. This hypothesis can be tested using two-sample Z-test for means. A two-sample z-test for means is a statistical test used to compare the means of two independent samples. The null hypothesis for a two-sample z-test for means states that there is no significant difference between the means of the two samples. The formula for Z-statistics is the following. Read further details in this blog, Two-sample Z-test for means.

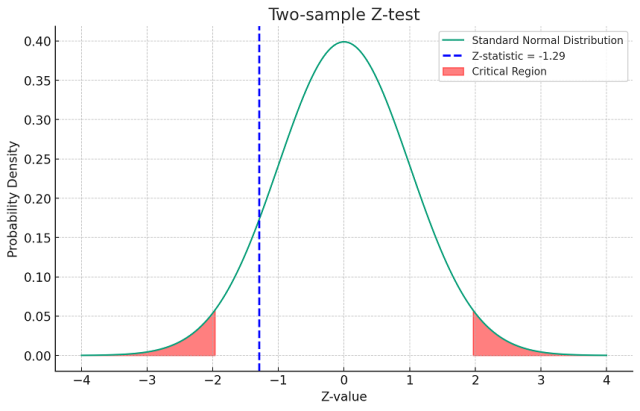

The following represents plot for a two-sample z-test, assuming some hypothetical values for these parameters, and show the critical region where the null hypothesis would be rejected. We will use a significance level of 0.05 for a two-tailed test.

The plot illustrates a two-sample z-test with the following details:

- The red shaded areas indicate the critical regions for a two-tailed test at a significance level of .

- The critical z-value for this test is approximately .

- The blue dashed line represents the calculated z-statistic for the two-sample test, which is approximately .

Given this z-statistic, we would not reject the null hypothesis since the z-statistic falls outside the critical regions (it is not less than -1.96 or greater than 1.96).

Use Z-Test: To test Hypotheses related to Proportions

Z-test can be used to test hypothesis related to proportions as well.

- There is no difference between the hypothesized proportion and the theoretical population proportion. This hypothesis can be tested using one-sample Z-test for proportion. Greater details can be read in this blog, one-sample Z-test for proportion.

- There is no difference between the proportions belonging to two different populations. This hypothesis can be tested using two-sample Z-test for proportions. Greater details can be read in this blog, two-sample Z-test for proportions.

What is T-Test?

T-test is a statistical hypothesis technique which is used to test the null hypothesis in relation to the following given the population standard deviation is unknown, data belongs to normal distribution, and the sample size is small (size less than 30)

Use T-Test: To test whether there is a difference between sample and population

There is no difference between the sample mean and the population mean given the population standard deviation is known and the sample size is small, or, the population standard deviation is unknown. This is very much similar to one-sample Z-test for means. Greater details can be read in this blog, one-sample t-test for means. The formula for t-statistics look like the following. Note that the sample mean is compared with the population mean as like in one sample Z-test. However, the difference lies in how the standard error is calculated as the ratio of standard deviation of the sample and the square root of the sample size.

T = (X̄ – μ) / SE

= (X̄ – μ) / S/√n, where SE is the standard error, X̄ is the sample mean, µ is the population mean, S is the sample standard deviation and the n is the sample size. Note the difference between the Z-statistics and T-statistics in one-sample Z-test and one-sample T-test in relation to usage of population standard deviation σ in case of Z-test while sample standard deviation, S in case of T-test.

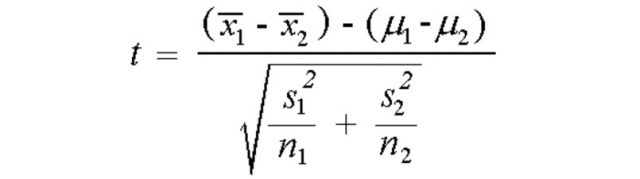

Use T-Test: To test whether there is difference between two independent samples

There is no difference between the two samples given the population standard deviation is known and the sample size is small, or, the population standard deviation is unknown. This hypothesis can be tested using two-samples t-test for independent samples. In case of two-samples t-test for independent samples, different formula exists in case the variance of the two populations are equal or otherwise. In case the population variances are unequal, the pooled variance is used to calculate the T-statistics. Read further details about two-sample t-test for independent samples in this blog, two-samples t-test for independent samples: formula and examples. Note the difference between the formula for two-samples Z-test for means and the two-samples t-test for means in the respective blogs. The formula for two-samples t-test for independent samples given population variances are equal is the following:

Differences between Z-Test & T-Test

The following represents the differences between Z-Test and T-Test:

| Feature | Z-test | T-test |

|---|---|---|

| Definition | A Z-test is a statistical test used to determine whether two population means are different when the variances are known and the sample size is large. | A T-test is a statistical test used to determine if there is a significant difference between the means of two groups, which may be related in certain features. |

| Sample Size | Typically used for larger sample sizes, usually over 30. | Often used for smaller sample sizes, less than 30. |

| Population Variance | Known | Unknown |

| Distribution | Normal distribution | T-distribution, which varies depending on the degrees of freedom. |

| Use Case | Used when data is normally distributed and the sample size is large. | Used when the data is not normally distributed or when the sample size is small, making the standard deviation less reliable. |

| Types | One-sample Z-test, Two-sample Z-test. | One-sample T-test, Two-sample T-test, Paired T-test. |

| Standard Error | Uses population standard deviation. | Uses sample standard deviation. |

| Applicability | More suited for hypothesis testing with large sample sizes and known population variances. | More versatile, can be used for both small and large sample sizes with unknown population variances. |

| Statistics | In Z-test, z-statistics is used | In T-test, t-statistics is used. |

| Confidence intervals | Confidence intervals for large samples can be constructed using Z-scores, which are more precise with larger data. | T-distribution provides a more accurate confidence interval for smaller samples, important in cases like cross-validation where sample sizes are limited. |

| Assumptions | Assumes data is approximately normally distributed when sample size is large. | Less dependent on the normality assumption, especially useful when the data distribution is unknown or non-normal. |

When to use T-test vs Z-test?

The following decision flow can be used to arrive at decision regarding whether to use Z-test vs T-test:

- Known Population Standard Deviation (σ):

- If the sample size is above 30 and the data is normally distributed, use the Z-test.

- If the data is not normally distributed, consider using nonparametric tests.

- Unknown Population Standard Deviation:

- If the sample size is not large enough for the central limit theorem to apply, use the T-test.

- If the sample is large, but the central limit theorem cannot be assumed due to skewed distribution or outliers, use the T-test or nonparametric tests.

- If the data is paired (such as pre-test vs. post-test), use the paired T-test.

- If the data is not paired and represents two independent samples, use the independent T-test.

The above assumes that other conditions for the tests (like the independence of observations) are met and that nonparametric tests are considered when the distribution assumptions are not satisfied.

Z-test vs T-test Examples

Understanding when to use a Z-test versus a T-test in statistical analysis is crucial for accurate results. Here are some examples:

- Z-Test Example:

- Imagine a large shoe company wants to assess if the average foot size of their adult male customers has changed from the known national average of 10.5 (U.S. size) with a known standard deviation. They sample 200 customers. A Z-test is appropriate here due to the large sample size and known population standard deviation.

- A researcher is studying the effectiveness of a national literacy program. They have a large sample of 500 students and the national literacy rate’s standard deviation is known. To determine if the literacy rate in the sample significantly differs from the national average, a Z-test is suitable due to the large sample size and known population standard deviation.

- T-Test Example

- A small startup is testing a new energy drink and wants to see if it significantly alters energy levels. They do not have the population standard deviation and conduct a study with 25 participants. Here, a T-test is suitable due to the small sample size and the unknown population standard deviation.

- A local gym conducts a study with 15 of its members to test the effectiveness of a new workout routine on weight loss. The population standard deviation is not known. A T-test is the best choice here, as it is designed for smaller samples where the population standard deviation is unknown.

Frequently Asked Questions (FAQs)

The following are some of the most common FAQs when dealing with T-test and Z-test:

- When to use t-score or t-statistics vs z score or z-statistics?

- Use a t-statistics in hypothesis testing when dealing with small sample sizes (less than 30) and unknown population standard deviation. Opt for a z-statistics when the sample size is large (30 or more) and the population standard deviation is known. T-tests adapt better to small samples, while z-tests are suitable for larger, normally distributed datasets.

- Which test to use: z-test vs t-test vs chi-square?

- Choose a Z-test for large samples (over 30) with known population standard deviation. Use a T-test for smaller samples (under 30) or when the population standard deviation is unknown. Opt for a Chi-square test for categorical data to examine the relationship between two variables or the difference between observed and expected frequencies. Here is a detailed blog on this topic: Z-test vs T-test vs Chi-square test

- When to use one sample z-test vs t-test?

- Use a one-sample Z-test when the sample size is large (over 30) and the population standard deviation is known. Opt for a one-sample T-test with smaller samples (under 30) or when the population standard deviation is unknown. Both tests assess if the sample mean significantly differs from a known mean.

- When to use z-test vs t-test vs f-test?

- Use a T-test for small samples or unknown population standard deviation to compare means. Choose a Z-test for large samples with known standard deviation. Apply an F-test to compare variances between two or more groups, often used in ANOVA to assess if group means are significantly different. A detailed blog will be posted on this topic in near future.

Summary

The z-test and t-test are different statistical hypothesis tests that help determine whether there is a difference between two population means or proportions. The z-statistic is used to test for the null hypothesis in relation to whether there is a difference between the populations means or proportions given the population standard deviation is known, data belongs to normal distribution, and sample size is larger enough (greater than 30). T-tests are used when the population standard deviation is unknown, the data belongs to normal distribution and the sample size is small (lesser than 30).

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

- What is Embodied AI? Explained with Examples - May 11, 2025

- Retrieval Augmented Generation (RAG) & LLM: Examples - February 15, 2025

Hello M. Ajitesh Kumar,

In this blog, below the diagram there is an error in the explanation,

Please see this sentence: If the population standard deviation is unknown, Z-test is recommended to be used.

Thank you for pointing that out. Corrected.

Great job!

Thanks for explaining clearly!