Category Archives: Machine Learning

Machine Learning – Insurance Applications Use Cases

In this post, you will learn about some of the following insurance applications use cases where machine learning or AI-powered solution can be applied: Insurance advice to consumers and agents Claims processing Fraud protection Risk management AI-powered Insurance Advice to Consumers & Agents Insurance Advice to Consumers: Machine learning models could be trained to recommend the tailor made products based on the learning of the consumer profiles and related attributes such as queries etc from the past data. Such models could be integrated with Chatbots (Google Dialog flow, Amazon Lex etc) applications to create intelligent digital agents (Bots/apps) which could understand the intent of the user, collect appropriate data from the user (using prompts) …

AWS reInvent – Top 7 New Machine Learning Services

In this post, you will learn about some great new and updated machine learning services which have been launched at AWS re:Invent Conference Nov 2018. My personal favorite is Amazon Textract. Amazon Personalize Amazon Forecast Amazon Textract Amazon DeepRacer Amazon Elastic inference AWS Inferentia Updated Amazon Sagemaker Amazon Personalize for Personalized Recommendations Amazon Personalize is a managed machine learning service by Amazon with the primary goal to democratize recommendation system benefitting smaller and larger companies to quickly get up and running with the recommendation system thereby creating the great user experience. Here is the link to Amazon Personalize Developer Guide. The following are some of the highlights: Helps personalize the user experience using some of …

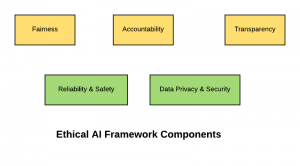

Guidelines for Creating an Ethical AI Framework

In this post, you will learn about how to create an Ethical AI Framework which could be used in your organization. In case, you are looking for Ethical AI RAG Matrix created with Excel, please drop me a message. The following are key aspects of ethical AI which should be considered for creating the framework: Fairness Accountability Transparency Reliability & Safety Data privacy and security Fairness AI/ML-powered solutions should be designed, developed and used in respect of fundamental human rights and in accordance with the fairness principle. The model design considerations should include the impact on not only the individuals but also the collective impact on groups and on society at large. The following represents some …

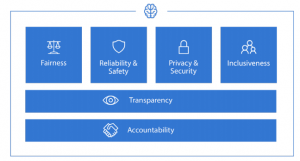

Ethical AI Principles – IBM, Google, Intel, Microsoft

In this post, you will get a quick glimpse of ethical AI principles of companies such as IBM, Intel, Google, and Microsoft. The following represents the ethical AI principles of companies mentioned above: IBM Ethical AI Principles: The following represents six ethical AI principles of IBM: Accountability: AI designers and developers are responsible for considering AI design, development, decision processes, and outcomes. Value alignment: AI should be designed to align with the norms and values of your user group in mind. Explainability: AI should be designed for humans to easily perceive, detect, and understand its decision process, and the predictions/recommendations. This is also, at times, referred to as interpretability of AI. Simply …

IEEE Bookmarks on Ethical AI Considerations

In this post, you will get to have bookmarks for ethical AI by IEEE (Institute of Electrical and Electronics Engineers) group. Those starting on the journey of ethical AI would find these bookmarks very useful. ML researchers and data scientists would also want to learn about ethical AI practices to apply them while building and testing the models. The following are some bookmarks on ethical AI considerations by IEEE group: The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: An initiative by IEEE for setting up new standards and solutions, certifications and codes of conduct, and consensus building for ethical implementation of intelligent technologies to ensure that these technologies are …

AI-powered Project Baseline to Map Human Health

In this post, you will learn about technologies and data gathering strategy for Project Baseline, an initiative by Google. Project Baseline is an IOT-based AI-powered initiative to map human health. Different kinds of machine learning algorithms including deep learning etc would be used to understand different aspects of human health and make predictions for overall health improvements and precautionary measures. This would require a very large volume of data to be gathered and processed before being fed into AI models. The following represents the data gathering strategies for Project Baseline: Diagnostic tests covering blood-related tests; specialized tests such as ECG, chest X-ray, eyesight check Doctor examination leading to the collection of data related to health …

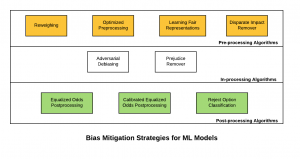

Machine Learning Models – Bias Mitigation Strategies

In this post, you will learn about some of the bias mitigation strategies which could be applied in ML Model Development lifecycle (MDLC) to achieve discrimination-aware machine learning models. The primary objective is to achieve a higher accuracy model while ensuring that the models are lesser discriminant in relation to sensitive/protected attributes. In simple words, the output of the classifier should not correlate with protected or sensitive attributes. Building such ML models becomes the multi-objective optimization problem. The quality of the classifier is measured by its accuracy and the discrimination it makes on the basis of sensitive attributes; the more accurate, the better, and the less discriminant (based on sensitive attributes), the better. The following are some of …

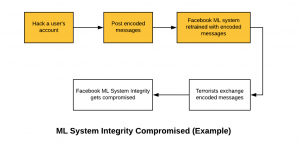

Facebook Machine Learning Tool to Check Terrorists Posts

In this post, you will learn about details on Facebook machine learning tool to contain online terrorists propaganda. The following topics are discussed in this post: High-level design of Facebook machine learning solution for blocking inappropriate posts Threat model (attack vector) on Facebook ML-powered solution ML Solution Design for Blocking Inappropriate Posts The following is the workflow Facebook uses for handling inappropriate messages posted by terrorist organizations/users. Train/Test a text classification ML/DL model to flag the posts as inappropriate if the posts is found to contain words representing terrorist propaganda. In production, block the messages which the model could predict as inappropriate with very high confidence. Flag the messages for data analysts processing if the …

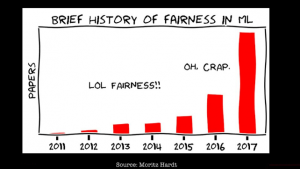

ML Model Fairness Research from IBM, Google & Others

In this post, you would learn about details (brief information and related URLs) on some of the research work done on AI/machine learning model ethics & fairness (bias) in companies such as Google, IBM, Microsoft, and others. This post will be updated from time-to-time covering latest projects/research work happening in various companies. You may want to bookmark the page for checking out the latest details. Before we go ahead, it may be worth visualizing a great deal of research happening in the field of machine learning model fairness represented using the cartoon below, which is taken from the course CS 294: Fairness in Machine Learning course taught at UC Berkley. IBM Research for ML Model Fairness …

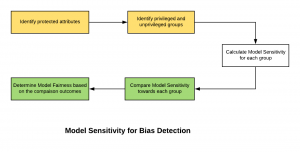

Fairness Metrics – ML Model Sensitivity for Bias Detection

There are many different ways in which machine learning (ML) models’ fairness could be determined. Some of them are statistical parity, the relative significance of features, model sensitivity etc. In this post, you would learn about how model sensitivity could be used to determine model fairness or bias of model towards the privileged or unprivileged group. The following are some of the topics covered in this post: How could Model Sensitivity be used to determine Model Bias or Fairness? Example – Model Sensitivity & Bias Detection How could Model Sensitivity determine Model Bias or Fairness? Model sensitivity could be used as a fairness metrics to measure the model bias towards the privileged or unprivileged group. Higher the …

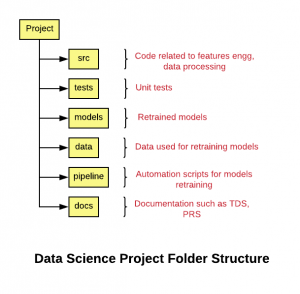

Data Science Project Folder Structure

Have you been looking out for project folder structure or template for storing artifacts of your data science or machine learning project? Once there are teams working on a particular data science project and there arises a need for governance and automation of different aspects of the project using build automation tool such as Jenkins, one would feel the need to store the artifacts in well-structured project folders. In this post, you will learn about the folder structure using which you could choose to store your files/artifacts of your data science projects. Folder Structure of Data Science Project The following represents the folder structure for your data sciences project. Note that the project structure is created keeping in mind integration with build and automation jobs. …

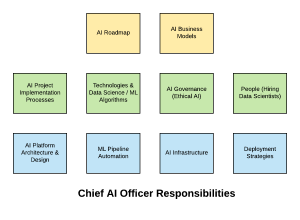

Job Description – Chief Artificial Intelligence (AI) Officer

Whether your organization needs a chief artificial intelligence (AI) officer is a topic where there have been differences of opinions. However, the primary idea is to have someone who heads or leads the AI initiatives across the organization. The designation could be chief AI officer, Vice-president (VP) – AI research, Chief Analytics Officer, Chief Data Officer, AI COE Head or maybe, Chief Data Scientist etc. One must understand that building AI/machine learning models and deploying them in production is just one part of the whole story. Aspects related to AI governance (ethical AI), automation of AI/ML pipeline, infrastructure management vis-a-vis usage of cloud services, unique project implementation methodologies etc., become of prime importance once you are done with the hiring of data scientists for …

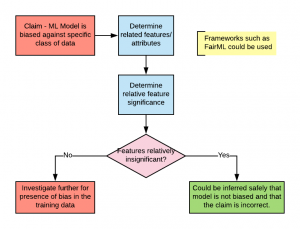

Bias Detection in Machine Learning Models using FairML

Detecting bias in machine learning model has become of great importance in recent times. Bias in the machine learning model is about the model making predictions which tend to place certain privileged groups at a systematic advantage and certain unprivileged groups at a systematic disadvantage. And, the primary reason for unwanted bias is the presence of biases in the training data, due to either prejudice in labels or under-sampling/over-sampling of data. Especially, in banking & finance and insurance industry, customers/partners and regulators are asking the tough questions to businesses regarding the initiatives taken by them to avoid and detect bias. Take an example of the system using a machine learning model to …

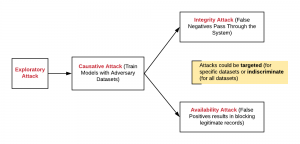

Security Attacks Analysis of Machine Learning Models

Have you wondered around what would it be like to have your machine learning (ML) models come under security attack? In other words, your machine learning models get hacked. Have you thought through how to check/monitor security attacks on your AI models? As a data scientist/machine learning researcher, it would be good to know some of the scenarios related to security/hacking attacks on ML models. In this post, you would learn about some of the following aspects related to security attacks (hacking) on machine learning models. Examples of Security Attacks on ML Models Hacking machine learning (ML) models means…? Different types of Security Attacks Monitoring security attacks Examples of Security Attacks on ML Models Most of …

JupyterLab & Jupyter Notebook Cheat Sheet Commands

Are you starting to create machine learning models (using python programming) using JupyterLab or Jupyter Notebook? This post list down some commands which are found to be very useful while one (beginner data scientist) is getting started with using JupyterLab notebook for building machine learning models. Notebook Operations: The following command helps to perform operations with the notebook. Ctrl + S: Save the notebook Ctrl + Q: Close the notebook Enter: While on any cell, you want to enter edit mode, press Enter. Cells Operation: The following commands help with performing operations on cells: J: Select the cell below the current cell; This command would be used to go through cells below the …

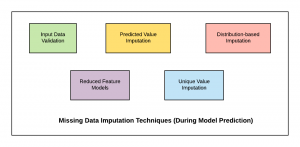

Missing Data Imputation Techniques in Machine Learning

Have you come across the problem of handling missing data/values for respective features in machine learning (ML) models during prediction time? This is different from handling missing data for features during training/testing phase of ML models. Data scientists are expected to come up with an appropriate strategy to handle missing data during, both, model training/testing phase and also model prediction time (runtime). In this post, you will learn about some of the following imputation techniques which could be used to replace missing data with appropriate values during model prediction time. Validate input data before feeding into ML model; Discard data instances with missing values Predicted value imputation Distribution-based imputation Unique value imputation Reduced feature models Below is the diagram …

I found it very helpful. However the differences are not too understandable for me