Category Archives: Generative AI

Procurement Analytics Use Cases Examples

Last updated: 26th Nov, 2023 The procurement analytics applications is seeing tremendous growth in last few years. With so much data available, advancement in data analytics and related technology field, and the need for digital transformation across procurement organizations, it’s important to know how procurement analytics can help you make better business decisions. This blog will cover procurement analytics and key use cases examples from advanced analytics field such as machine learning, AI, generative AI that will be useful for business stakeholders such as category managers, sourcing managers, supplier relationship managers, business analysts/product managers, and data scientists to implement different use cases using machine learning. The use cases around data-driven decision …

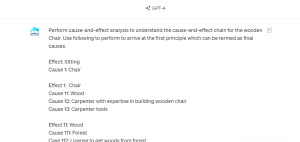

First Principles Thinking using ChatGPT

Have you ever wondered why an object such as a chair is shaped the way it is, or why it’s even needed in the first place? What mystery unravels when we dig into the very essence of everyday objects and concepts around us? Navigating through a universe having well-established beliefs and customary wisdom, the hunt for innovative answers and deciphering the secrets hidden behind the everyday becomes not just a curiosity, but a necessity. This is where first principles thinking comes to the rescue. I have posted a detailed blog on First principles thinking – First principles thinking: Concepts & Examples. In this blog, let’s explore how we can utilize …

Generative AI Framework for Product Managers: Examples

Ever wondered how you as a product manager can stay ahead in the competitive era fuelled by technological advancements such as generative AI? Are you constantly grappling with the pressure to deliver groundbreaking solutions in line with your business goals? As a product manager, wouldn’t it be revolutionary to have some kind of a playbook that simplifies these challenges? What exactly can generative AI do for modern product managers? Which areas of your daily struggles can it alleviate, and what AI frameworks are best suited for your unique challenges? In this blog, we will dive into the potential of Generative AI vis-a-vis real-life use cases for product managers. Use Cases: …

OpenAI Python API Example for NLP Tasks

Ever wondered how you can leverage the power of OpenAI’s GPT-3 and GPT-3.5 (from Jan 2024 onwards) directly in your Python application? Are you curious about generating human-like text with just a few lines of code? This blog post will walk you through an example Python code snippet that utilizes OpenAI’s Python API for different NLP tasks such as text generation. Check out my other post on how to use Langchain framework for text generation using OpenAI GPT models. OpenAI Python APIs The OpenAI Python API is an interface that allows you to interact with OpenAI’s language models, including their GPT-3 model. The following are different popular models that you …

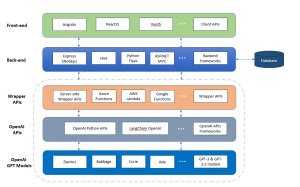

Architecting a Generative AI Platform for GPT-based LLM Apps

Have you ever wondered how to build a scalable Generative AI platform based on OpenAI GPT models that can serve different applications? Are you a data scientist, product manager, or software engineer looking to understand the intricacies of the architecture of such a scalable generative AI platform? This blog aims to demystify the architectural building blocks needed to create a robust GPT-based platform. By the end, you will have a clear roadmap for architecting, designing, and implementing your own GPT-based large language models (LLMs) applications platform. Generative AI Platform Architecture for GPT-based LLM Apps The following is the technology architecture of generative AI platform which can leverage OpenAI GPT based …

Encoder Only Transformer Models Quiz / Q&A

Are you intrigued by the revolutionary world of transformer architectures? Have you ever wondered how encoder-only transformer models like BERT, ELECTRA, or DeBERTa have reshaped the landscape of Natural Language Processing (NLP)? The rapid advancement of machine learning has led to the creation of numerous transformer architectures, each with unique features, applications, and underlying mechanics. Whether you’re a data scientist, machine learning engineer, generative AI enthusiast, or a student eager to deepen your understanding, this quiz offers an engaging and informative way to assess your knowledge and sharpen your skills. It would also help you prepare for your interviews on this topic. Encoder-only transformer models have become a cornerstone in …

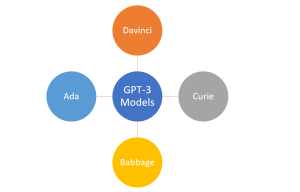

OpenAI GPT-3 Models List: Explained with Examples

In the ever-evolving landscape of natural language processing (NLP), OpenAI’s GPT-3 models have garnered significant attention for how they could understand and generate human-like text. Different GPT-3 models discussed in this blog can be accessed using APIs and OpenAI Playground. In this blog post, we will delve into the OpenAI GPT-3 models and provide a comprehensive list, along with explanations and examples of their capabilities. Although GPT-3.5 models are more powerful than their counterpart GPT-3 models, it is only these GPT-3 models which are currently available for fine-tuning. Whether you are an experienced data scientist or a curious generative ai enthusiast, understanding these models is crucial in making the most …

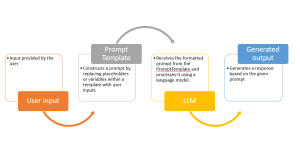

LLM Chain OpenAI Python Example

Have you ever wondered how to fully utilize large language models (LLMs) in our natural language processing (NLP) applications, like we do with ChatGPT? Would you not want to create an application such as ChatGPT where you write some prompt and it gives you back output such as text generation or summarization. While learning to make a direct API call to an OpenAI LLMs is a great start, we can build full fledged applications serving our end user needs. And, building prompts that adapt to user input dynamically is one of the most important aspect of an LLM app. That’s where LangChain, a powerful framework, comes in. In this blog, …

Langchain ChatGPT Hello World Python Example

Have you ever wondered how to build applications that not only utilize large language models (LLMs) but are also capable of interacting with their environment and connecting to other data sources. If so, then LangChain is the answer! In this blog, we will learn about what is LangChain, what are its key aspects, how does it work. We will also quickly review the concepts of prompt, tokens and temperature when using the OpenAI API. We will the learn about creating a ‘Hello World’ Python program using LangChain and OpenAI’s Large Language Models (LLMs) such as GPT-3 models. What is LangChain Framework? LangChain is a dynamic framework specifically designed for the …

Encoder-only Transformer Models: Examples

How can machines accurately classify text into categories? What enables them to recognize specific entities like names, locations, or dates within a sea of words? How is it possible for a computer to comprehend and respond to complex human questions? These remarkable capabilities are now a reality, thanks to encoder-only transformer architectures like BERT. From text classification and Named Entity Recognition (NER) to question answering and more, these models have revolutionized the way we interact with and process language. In the realm of AI and machine learning, encoder-only transformer models like BERT, DistilBERT, RoBERTa, and others have emerged as game-changing innovations. These models not only facilitate a deeper understanding of …

LLMs & Semantic Search Course by Andrew NG, Cohere & Partners

Andrew Ng, a renowned name in the world of deep learning and AI, has joined forces with Cohere, a pioneer in natural language processing technologies. Alongside him are Jay Alammar, a well-known educator and visualizer of machine learning concepts, and Serrano Academy, an esteemed institution dedicated to AI research and education. Together, they have launched an insightful course titled “Large Language Models with Semantic Search.” This collaboration represents a fusion of expertise aimed at addressing the growing needs of semantic search in various applications. In an era where keyword search has dominated the search landscape, the need for more sophisticated, content-aware search capabilities is becoming increasingly evident. Content-rich platforms like …

Quiz: BERT & GPT Transformer Models Q&A

Are you fascinated by the world of natural language processing and the cutting-edge generative AI models that have revolutionized the way machines understand human language? Two such large language models (LLMs), BERT and GPT, stand as pillars in the field, each with unique architectures and capabilities. But how well do you know these models? In this quiz blog, we will challenge your knowledge and understanding of these two groundbreaking technologies. Before you dive into the quiz, let’s explore an overview of BERT and GPT. BERT (Bidirectional Encoder Representations from Transformers) BERT is known for its bidirectional processing of text, allowing it to capture context from both sides of a word …

Pre-training vs Fine-tuning in LLM: Examples

Are you intrigued by the inner workings of large language models (LLMs) like BERT and GPT series models? Ever wondered how these models manage to understand human language with such precision? What are the critical stages that transform them from simple neural networks into powerful tools capable of text prediction, sentiment analysis, and more? The answer lies in two vital phases: pre-training and fine-tuning. These stages not only make language models adaptable to various tasks but also bring them closer to understanding language the way humans do. In this blog, we’ll dive into the fascinating journey of pre-training and fine-tuning in LLMs, complete with real-world examples. Whether you are a …

Top 5 Books on Generative AI: New Releases on Amazon

Are you fascinated by the potential of generative artificial intelligence (AI)? Are you looking for the latest insights and knowledge in the field of AI and its creative applications? Look no further! In this blog post, we’ll introduce you to the top 5 books on generative AI that have been making waves on Amazon in the last 90 days. These books delve into various aspects of generative AI, offering readers a comprehensive understanding of its implications, applications, and transformative power. 1. The Artificial Intelligence and Generative AI Bible: [5 in 1] The Most Updated and Complete Guide Author: Alger Fraley Rating: 4.4 Step into the world of generative AI with …

Prompt Engineering: Core Principles, Examples

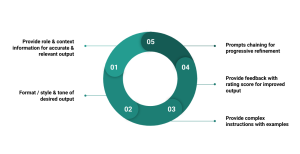

Ever chatted with Siri or Alexa and wondered how they come up with their answers? Or how do the latest AI tools seem to “know” just what you’re looking for? That’s all thanks to something called “prompt engineering“. In this blog, we’ll learn the key concepts of prompts engineering. We’ll talk about what prompt engineering is, its core guiding principles, and why it’s a must-know in today’s techy world. Let’s get started! What is Prompt Engineering? Prompt engineering is the art and science of designing, refining and optimizing prompts to guide the behavior of generative AI models like those built on the GPT architecture. While the underlying AI model might …

GPT Models In-context Learning: Examples

Have you ever wondered how AI models like OpenAI GPT-3 (Generative Pretrained Transformers-3) can generate impressively human-like text? Enter the realm of in-context learning that gives GPT-3 its conversational abilities and makes it extraordinary. In this blog, we’re going to learn the concepts of in-context learning, its different forms, and how GPT-3 uses it to revolutionize the way we interact with AI. What’s In-context Learning? In-context learning is at the heart of these large language models (LLMs), enabling GPT models to understand/comprehend and create text that closely resembles human speech, based on the instructions and examples they’re provided. As the model learns about the context based on the examples provided …

I found it very helpful. However the differences are not too understandable for me