Ever chatted with Siri or Alexa and wondered how they come up with their answers? Or how do the latest AI tools seem to “know” just what you’re looking for? That’s all thanks to something called “prompt engineering“. In this blog, we’ll learn the key concepts of prompts engineering. We’ll talk about what prompt engineering is, its core guiding principles, and why it’s a must-know in today’s techy world. Let’s get started!

What is Prompt Engineering?

Prompt engineering is the art and science of designing, refining and optimizing prompts to guide the behavior of generative AI models like those built on the GPT architecture. While the underlying AI model might be the “engine” of the system, the prompt serves as the “steering wheel,” determining the direction and nature of the output.

Prompt engineering fosters creativity, enabling customized fiction, product ideas, or simulated conversations with historical figures. Well-engineered prompts can enhance model accuracy, relevance, style, and ability to follow complex instructions. Learning prompt engineering maximizes the utility of generative models, tailoring outputs to specific needs.

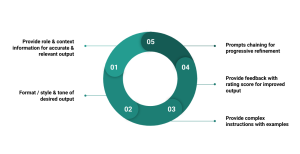

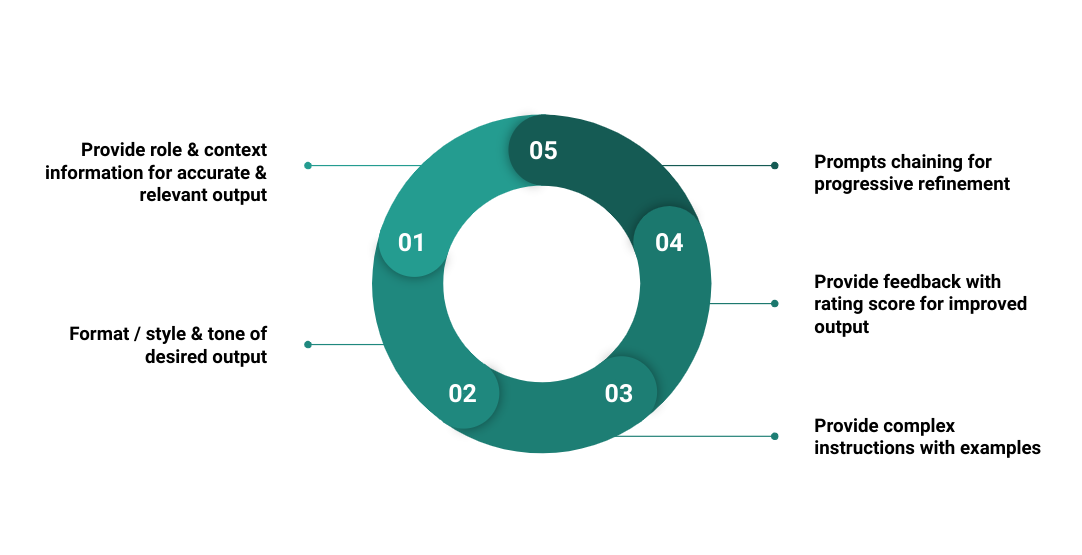

Core Principles of Prompt Engineering

The following are five core principles of prompt engineering that can be followed to create great prompts for LLMs such as ChatGPT, Bard, etc.

Provide Role & Context Information for Accurate & Relevant Output

Guide the model to produce more accurate or relevant results. And, the popular and easy way to do is to provide accurate role-play and context as part of the prompt before asking the question.

Role-playing is a powerful technique in prompt engineering, especially with models like LLM (Language Model). By asking the model to “act” or “role-play” as a specific character or expert, you provide it with a context or frame of reference. This context often helps the model generate outputs that are more in line with the expected tone, style, or content depth. It’s akin to asking a versatile actor to play a particular role in a movie — the actor’s performance is guided by the character’s persona.

Importance of Role-playing: When you set a role for the LLM, such as “Act as a machine learning expert,” you’re essentially narrowing down its vast knowledge into a specific domain or expertise. This role-playing can drastically improve the accuracy and relevance of the outputs by aligning them with the persona’s expected knowledge and behavior.

Importance of Providing Context: Beyond role-playing, explicitly providing context in the prompt helps anchor the AI’s response. If the model understands the background or the specific scenario you’re referencing, it’s more likely to produce outputs that are on-point and relevant.

Example: Travel Recommendations

- Basic Prompt: “Tell me about travel destinations.”

- Potential Output: A broad overview of popular travel spots from different parts of the world, spanning various travel themes.

- Engineered Prompt with Role-playing and Context: “Act as a travel advisor specializing in Southeast Asia. List the top 5 travel destinations for solo backpackers focusing on budget and culture.”

- Potential Output: A curated list of destinations like Bali, Chiang Mai, Hanoi, Siem Reap, and Luang Prabang, with insights into their cultural highlights and budget-friendly tips.

In this example, the role-playing element (“Act as a travel advisor specializing in Southeast Asia”) ensures the AI taps into a more specific subset of its knowledge. The context provided narrows down the focus to solo backpackers interested in budget and culture. Together, these elements guide the LLM to produce a response that’s both accurate and highly relevant to the user’s intent.

Set Format / Style & Tone for Desired Output

Elicit specific styles, formats, or tones in the generated content.

Example: Story Writing

- Basic Prompt: “Write a story about a lost cat.”

- Engineered Prompt: “Write a heartwarming story in the style of a classic fairy tale about a lost cat who embarks on a magical journey.”

While the basic prompt might generate a wide variety of stories about a lost cat, the engineered prompt specifically instructs the model to produce a story with a particular tone (heartwarming) and style (classic fairy tale). This ensures that the generated content matches a desired aesthetic or mood.

Provide Complex Instructions with Examples

Help the model understand and follow complex instructions.

Example: Recipe Creation

- Basic Prompt: “Give me a pasta recipe.”

- Engineered Prompt: “Provide a vegan pasta recipe using ingredients commonly found in a pantry, ensuring it takes no longer than 30 minutes to prepare and cook. Additionally, suggest a wine pairing for the dish.”

The basic prompt is open-ended and might result in any kind of pasta recipe. The engineered prompt, however, provides a series of complex instructions: the recipe must be vegan, use pantry staples, be quick to make, and come with a wine pairing recommendation. This level of detail helps the model understand exactly what’s required and produces a more tailored result.

Provide Feedback with Rating Score for Improved Output

Enable a feedback mechanism to rate the output of the prompt execution. By sending the model a rating on its last generated content, you create an immediate feedback loop. This feedback loop can be leveraged to prompt the model to “reflect” on its performance and attempt to improve in the subsequent generation. While the model doesn’t possess true self-awareness or emotions, this method uses the rating as a form of dynamic prompt engineering to optimize results.

Example: Marketing Slogan Creation

- Scenario: A brand manager wants to use a generative AI model to come up with a catchy slogan for a new line of eco-friendly shoes.

- Engineered Prompt: “Generate a catchy slogan for our eco-friendly shoe line that emphasizes comfort and sustainability.”

- Initial Output: The model suggests: “Eco-shoes: Step into the Future!”

- Rating Mechanism: The brand manager rates the slogan on a scale of 1 to 10 based on creativity, relevance to the product, and market appeal. Let’s say the slogan receives a rating of 6 — it’s on-topic, but not as catchy as desired.

- Feedback to Model: “The last slogan you provided received a rating of 6 out of 10. We’re looking for something more memorable and directly emphasizing comfort. Try again.”

- Iterative Process: Using the feedback, the model might generate a new slogan like: “Walk Softly, Tread Lightly: Comfort in Every Eco-Step.” The brand manager can continue this process of rating and feedback until a satisfactory slogan is achieved.

This approach of sending the model it’s rating on the last content effectively creates a pseudo-interactive session where the AI iteratively refines its output based on user feedback. It’s a powerful method to quickly converge to the desired quality of content.

Prompts Chaining for Progressive Refinement

Use chained prompts for progressive refinement of the output.

Chaining prompts involves breaking down a complex request into a series of simpler, sequential prompts. Each prompt builds upon the previous one, guiding the model step-by-step to the desired outcome. This approach can help in progressively refining the content, ensuring that the final output closely aligns with user expectations.

Example: Designing a Comprehensive Workout Plan

- Scenario: A personal trainer wants to use a generative AI model to craft a comprehensive 4-week workout plan for a client who’s training for a marathon.

- Initial Prompt: “Describe the key considerations for a 4-week marathon training plan.”

- Model’s Output: The model lists factors such as weekly mileage, long runs, recovery days, cross-training, and tapering.

- Chained Prompt 1: “Now, based on these considerations, provide a detailed schedule for Week 1, focusing on building a strong foundation.”

- Model’s Output: A detailed 7-day training plan for the first week, emphasizing foundational strength and initial mileage buildup.

- Chained Prompt 2: “For Week 2, increment the mileage and introduce interval training. Draft the schedule.”

- Model’s Output: A 7-day plan for the second week, increasing the mileage and incorporating interval workouts.

- This process can be continued for weeks 3 and 4, chaining prompts to progressively shape the workout plan.

By chaining prompts, the trainer methodically guides the model through the creation process, ensuring each component of the workout plan is crafted thoughtfully and cohesively. This technique is especially useful when dealing with multifaceted tasks that require a systematic approach.

Why Learn Prompt Engineering?

Here are some of the key reasons why one should master working with core principles of prompt engineering discussed in the previous sections:

- Maximize Utility of Generative Models: As powerful as generative AI models are, they’re not always perfect out of the box. They can sometimes generate outputs that are overly verbose, slightly off-topic, or not quite what the user had in mind. By mastering prompt engineering, users can extract the most value from these models and tailor the outputs to their specific needs.

- Customization & Creativity: Prompt engineering allows for a great deal of creative expression. Whether you’re trying to generate a piece of fiction in a specific style, come up with innovative product ideas, or simulate a conversation with a historical figure, the way you craft your prompt can make all the difference in the results you get.

- Cost & Efficiency: Generative models, especially the larger ones, can be expensive to run. By optimizing prompts, users can often get to the desired result more quickly, saving both time and computational resources.

- Safety & Ethical Considerations: AI models can sometimes produce outputs that are inappropriate, biased, or potentially harmful. Carefully engineered prompts can help mitigate some of these risks by guiding the model away from problematic outputs.

- Competitive Advantage: As more businesses and individuals begin to utilize generative AI in their workflows, being skilled in prompt engineering can provide a competitive edge. It’s akin to knowing the best way to query a search engine; the better you are at it, the more valuable information you can extract.

- A Fundamental Skill in the AI Age: Just as understanding basic programming or web design became crucial skills in previous decades, prompt engineering might become a foundational skill in the age of generative AI. It’s about communicating effectively with one of the most powerful tools of our era.

Conclusion

In the evolving landscape of generative AI, the potency of a machine learning model isn’t solely reliant on its underlying architecture or the vastness of data it has been trained on. The true magic often lies in the deft craft of posing the right questions or prompts. Prompt engineering bridges the gap between raw computational capability and human intent. By mastering the principles discussed here, one can harness the full potential of generative AI, making it an invaluable tool in an array of applications, from creative writing to problem-solving. As we stand on the cusp of an AI-driven era, refining our prompts will be the key to unlocking meaningful, relevant, and impactful outputs.

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

- What is Embodied AI? Explained with Examples - May 11, 2025

I found it very helpful. However the differences are not too understandable for me