In this post, you will learn about the three most important challenges or guiding principles that could be used while you are building machine learning models.

The three key challenges which could be adopted while training machine learning models are following:

- The conflict between simplicity and accuracy

- Dimensionality – Curse or Blessing?

- The multiplicity of good models

The Conflict between Simplicity and Accuracy

Before starting on working for training one or more machine learning models, one would need to decide whether one would like to go for simple model or one would want to focus on model accuracy. The simplicity of models could be achieved by using algorithms which help in building interpret-able models. These models are primarily called as data or statistical model. The example of such models are multiple regression models, logistic models, discriminant analysis models. When in the field of healthcare, data models would be most sought after as it helps in model explain-ability thereby ensuring higher simplicity. Model simplicity is also achieve by having lower dimensionality or lesser number of features.

When the focus is to achieve higher predictive accuracy, one would rather want to go for algorithmic models created using algorithms such as decision trees, random forest, neural networks. With algorithmic models, one loose the aspects of simplicity it is difficult to explain what went in to make a prediction.

Dimensionality – Curse or Blessing?

It is of utmost importance to decide on how many features one would require to train the most optimal model. Large number of features with each of them containing some information that could be used for prediction could play important role in attaining high accuracy of the models. However, large number of features also increases the model complexity. In addition, the larger number of features may as well result in model over-fitting. On the other hand, having a very smaller number of features may result in model under-fitting. One would thus require to decide whether to have large number of features or smaller number but important features.

It is seen that the models with fewer parameters is less complex, and because of this, is preferred because it is likely to generalize better on average. Thus, it is key to use the most appropriate features to build the models.

There are different techniques to have the optimal dimensionality. They are some of the following:

- Features deletion: It is recommended that one achieves the maximum accuracy with the training data set. Thereby, start removing the features and take the accuracy measure on each feature deletion on test data set. A point will come when error on test and training data set will be close. This will result in most appropriate features set.

- Feature addition: Start with a very simple model built with fewer number of features. Then, go on adding features one-by-one and determine the model accuracy or error on test data set.

There are several feature selection techniques which could be used to select the most important features.

- Wrapper methods: Wrapper methods is based on creating many models with different subsets of input features and select those features that result in the best performing model according to a performance metric. These methods evaluate multiple models using procedures that add and/or remove predictors to find the optimal combination that maximizes model performance.

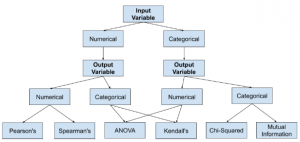

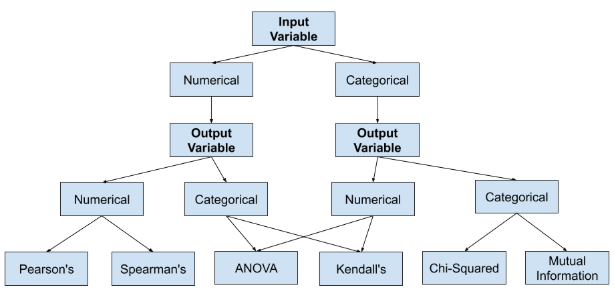

- Filter methods: Filter methods use statistical measures to score the correlation or dependence between input variables that can be filtered to choose the most relevant features.

The diagram below taken from this page displays different feature selection techniques which could be used:

The Multiplicity of Good Models

While training the model on a given input data set, one may end up building several models / functions which has got comparable accuracy or error. The challenge then become as to which model to select. There are several techniques one could adopt in this relation:

- Build ensembles of competing models and make prediction makes on voting

- Use statistical techniques to decide on which one or models to select.

- There are several model selection techniques which could be used for selecting models.

- Probabilistic methods: Probabilistic techniques where the model is scored based on its performance on the training data set and overall complexity. The following represents some of the techniques used, primarily, for data (statistical) models

- Akaike Information Criterion (AIC).

- Bayesian Information Criterion (BIC).

- Minimum Description Length (MDL).

- Structural Risk Minimization (SRM)

- Resampling methods: Resampling techniques is used to estimate the performance of a model on out-of-sample data. It is achieved by splitting the training dataset into training and test data sets, fitting the model on the training data set, and evaluating it on the test set. The process may then be repeated multiple times and the mean performance across each trial is reported. The following are some of the common resampling model selection methods:

- Random train/test split

- Cross validation

- Bootstrap

- Probabilistic methods: Probabilistic techniques where the model is scored based on its performance on the training data set and overall complexity. The following represents some of the techniques used, primarily, for data (statistical) models

References

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

- What is Embodied AI? Explained with Examples - May 11, 2025

- Retrieval Augmented Generation (RAG) & LLM: Examples - February 15, 2025

I found it very helpful. However the differences are not too understandable for me