This article represents my thoughts on steps that may be required to perform regression analysis (linear or multiple) using R programming language, on a given data set where response variable is primarily a continuous variable. Remember that continuous variables are the ones which could take any numeric data unlike discreet variables which could take only limited set of data. Please feel free to comment/suggest if I missed to mention one or more important points. Also, sorry for the typos.

Following are the key steps described later in this article:

- Load the data

- Observe the data

- Clean the data

- Explore the data visually

- Fit the linear or multiple regression model

- Analyze the regression model

- Come up with one or more models

- Predict using one of the model

Following are key steps that could be taken to perform the regression analysis on a given data set:

- Load the Data: One could load the data using commands such as read.csv or read.table. Following is how read.table command may look like:

housingprice = read.table( path_to_csv_file, sep=",", header=TRUE, stringsAsFactors=FALSE )

- Observe the Data: One could observe the data in R by just writing the variable in the console. Additionally, one could command such as head or tail to see the set of first/last 6 or n data. Following is how the command would look like:

# Default head command. This will display 6 rows head( housingprice ) # Head command with variable length; This will display 8 rows head( housingprice, n=8) #Default tail command displaying last 6 rows tail( housingprice ) # Tail command display last n rows tail( housingprice, n=8 )

- Clean the Data: Often, the header comes with names which are not readable enough. One could decide to rename the header columns using “names” commands. Following is how the command would look like:

names(housingprice) <- c("TotalPrice", "ValuePerSqFt", "IncomePerSqFtOfNeighbourhood") - Explore the Data Visually: One of the most important aspect of doing data anlysis is explore the data visually. For this, you could use the ggplot package. Following could be some of the steps in this relation. Later, the commands are also shown below.

- Load the plotting package:Load the ggplot2

- Analyze the response variable: Check whether response variable is normally distributed. One could use histogram of the response variables to check out frequency distribution and see if it is normal distribution or otherwise. For linear regression model, the response variables should ideally by normally distributed. In case it is not a normal distribution and multiple modes or so, one could use commands such as facet_wrap(~variableName ) for detailed view.

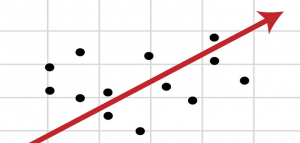

- Explore the relationship between response and predictor variables: Use scatterplot to visualize the relationship between the response and predictor variables. One may find the outliers in the data. These outliers could be removed and thus, data could be cleaned further

- Clean the data: One could clean the data in order to remove the instances of outliers

Following is the details related with the commands.

# Load the ggplot2 require( ggplot2 ) # One could use histogram of the response variables to check out frequency distribution and see if it is normal distribution or otherwise. For linear regression model, the response variables should ideally by normally distributed. ggplot( housingprice, aes(x=ValuePerSqFt)) + geom_histogram(binwidth=10) + xlab( "Value per Sq Ft") # In case it is not a normal distribution and multiple modes or so, one could use commands such as facet_wrap(~variableName ) for detailed view ggplot( housingprice, aes(x=ValuePerSqFt)) + geom_histogram(binwidth=10) + xlab( "Value per Sq Ft") + facet_wrap(~varName) # Use scatterplot for exploring relationship between response and predictor variables ggplot( housingprice, aes(x=SqFt, y=ValuePerSqFt)) + geom_point()

- Fit the linear or multiple regression model: The central idea is to fit the straight line through a given set of points, be it linear or multiple regression model. For linear regression model, the math is following:

# b = slope, c is intercept and e is error y = bx + c + e

One could use “lm” command such as following to fit the linear regression model:

lm( responseVariable ~ predictorVariable )

For multiple regression model, the math is following:

# Formula for multiple regression; Y represents vector for response variable whereas X may represent matrix of multiple predictor variables, bB represents vectors of coefficients Y = XB + e

One could use “lm” command to fit the multiple regression model as well.

lm( responseVariable ~ predictorVar1 + predictorVar2 + predictorVar3 )

- Analyze the Regression Model: One could use commands such as “summary” to analyze the regression model (linear or multiple). It provides information on residuals (estimate, standard error) and coefficients (estimate, standard error, t and p value)

- Come up with one or more models: While analyzing your data, you may figure out the one of the models may not fit well with the data. One of the ways to do is to check the coefficients and related plots. One could use coefplot package for same purpose. Thus, you could come up with different models based on the need to improve upon or get different coefficients. The size of the coefficients whether positive or negavtive tells about the effect, that the predictor variable has on response variable.

- Predict using one of the model: Once done with analysis of regression models and figuring out one or more models to be fitted, this is time to use one of the model to predict the data. One could use following command to predict.

predictVar = predict( modelVar, newdata=dataFrameVar, se.fit=TRUE_or_FALSE, interval="Prediction or Confidence", level=0_1)

Above would print out the predicted variable along with lower and upper bound values. Based on the lower and upper range values, one could decide on whether the model was a right fit or not.

Latest posts by Ajitesh Kumar (see all)

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I found it very helpful. However the differences are not too understandable for me