Weight decay is a popular technique in machine learning that helps to improve the accuracy of predictions. In this post, we’ll take a closer look at what weight decay is and how it works. We’ll also discuss some of the benefits of using weight decay and explore some possible applications. As data scientists, it is important to learn about concepts of weight decay as it helps in building machine learning models having higher generalization performance. Stay tuned!

What is weight decay and how does it work?

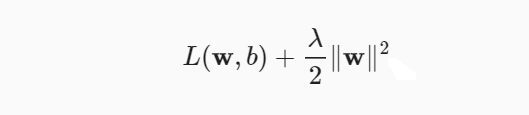

Weight decay is a regularization technique that is used to regularize the size of the weights of certain parameters in machine learning models. Weight decay is most widely used regularization technique for parametric machine learning models. Weight decay is also known as L2 regularization, because it penalizes weights according to their L2 norm. In weight decay technique, the objective function of minimizing the prediction loss on the training data is replaced with the new objective function, minimizing the sum of the prediction loss and the penalty term. It involves adding a term to the objective function that is proportional to the sum of the squares of the weights. This is how the new loss function looks like using weight decay technique:

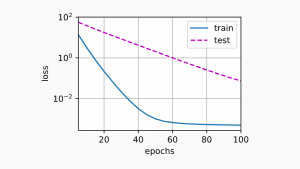

In the above equation, L(w, b) represents the original loss function before adding the regularization L2 norm (weight decay) term. For the value of lambda as 0, the original loss function comes into picture. For the value of lambda greater than 0, the size of weight vector is selected appropriately to minimize the overall loss. If the weight vector grows too large, the learning algorithm focuses on minimizing the L2 weight norm vs. minimizing the training error. In general, weight decay improves the generalization performance of a machine learning model by preventing overfitting. It does this by penalizing large weights, which encourages the model to learn simpler functions that are less likely to overfit the training data. Weight decay is typically used with stochastic gradient descent and can be applied to any differentiable loss function. Weight decay has been shown to improve the generalization performance of many types of machine learning models including deep neural networks. Weight decay is usually implemented by modifying the update rule for the weights such that the gradient is not only based on the training data but also on the weight decay term.

Other type of weight decay is adding L1 norm to weight vector which is called Lasso regularization. This type of weight decay sets some of the weights to zero if weight is below certain threshold. It is mostly used for feature selection.

Weight decay is often used in conjunction with other regularization techniques, such as early stopping, or dropout to further improve the accuracy of the model. It is a relatively simple technique that can be applied to a wide variety of machine learning models.

Weight decay technique is used for the following kind of models:

- Linear regression models

- Logistic regression models (binary, multinomial, etc)

- Artificial neural networks such as multi-layer perceptron network, convolutional neural networks, recurrent neural networks

What are benefits of weight decay technique?

Some benefits of using weight decay include:

- Improved generalization performance: Weight decay is used to reduce the generalization error of a machine learning model by preventing overfitting.

- Reduced complexity: weight decay can reduce the complexity of a machine learning model by encouraging the learned representation to be sparser.

- Reduced overfitting: Weight decay helps reduce overfitting by penalizing large weights. This encourages the model to learn simpler functions that are less likely to overfit the training data.

- Improved convergence: weight decay can help improve the convergence of an optimization algorithm by encouraging smaller weights.

- Simpler functions are learned

- Deep neural networks perform better with weight decay

- Efficient training: Weight decay can improve the efficiency of training by reducing the amount of time required to converge on a solution.

What are some possible applications of weight decay in machine learning?

Some possible applications for weight decay include:

- Image classification

- Object detection

- Speech recognition

- Natural language processing

- Regression and classification problems using linear and logistic regression models respectively

Weight decay is a regularization technique that is used in machine learning to reduce the complexity of a model and prevent overfitting. It has been shown to improve the generalization performance of many types of machine learning models, including deep neural networks. Weight decay can be implemented by modifying the update rule for the weights such that the gradient is not only based on the training data but also on the weight decay term. It is a relatively simple technique that can be applied to a wide variety of machine learning models.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me