Artificial Intelligence (AI) agents have started becoming an integral part of our lives. Imagine asking your virtual assistant whether you need an umbrella tomorrow, or having it remind you of an important meeting—these agents now help us with weather forecasts, managing daily tasks, and much more. But what exactly are these AI agents, and how do they work? In this blog post, we’ll break down the inner workings of AI agents using an easy-to-understand framework. Let’s explore the key components of an AI agent and how they collaborate to enable seamless interactions, such as providing weather updates or managing tasks efficiently.

What are AI Agents?

AI agents are artificial entities that display intelligent behavior while interacting with their environment, such as recognizing spoken commands, identifying objects in images, or responding to questions in natural language. AI agents act like humans by perceiving linguistic and visual inputs from the environment and reasoning the inputs. They then plan different sets of actions, select the most appropriate sequence of actions (decision-making), and finally perform the actions. At the foundation of these AI agents are large language models (LLMs) and visual language models (VLMs). Both LLMs and VLMs enable AI agents to possess human-like characteristics such as linguistic proficiency and visual cognition, as well as cognitive traits like contextual memory, intuitive reasoning, planning, and decision-making.

When talking about AI agents’ ability to perceive environmental inputs in the form of natural text and visuals, we talk about multi-modal agent AI (MAA). For example, an AI assistant capable of simultaneously analyzing spoken commands and corresponding gestures to perform tasks can be considered an MAA system. Such agentic systems that process multi-modal information can be termed as MAA systems.

The following can be used as design principles for creating an AI agent. The details can be read in this paper: Agent AI: Surveying the Horizons of MultiModal Interaction.

- Leverage existing pre-trained models and pre-training strategies to effectively equip agents with a robust understanding of key modalities, such as text and visual inputs. Additionally, create domain-specific LLMs by fine-tuning these foundational models to cater to specialized applications, ensuring that the agents can deliver highly accurate and context-aware outputs within specific fields. Retrieval-Augmented Generation (RAG) techniques can further enhance these systems by enabling the integration of real-time external knowledge retrieval, ensuring that outputs remain relevant and up-to-date.

- Enable agents to develop sufficient long-term task-planning capabilities.

- Integrate a memory framework that allows learned knowledge to be encoded and retrieved when needed.

- Utilize environmental feedback to train the agent effectively in selecting appropriate actions.

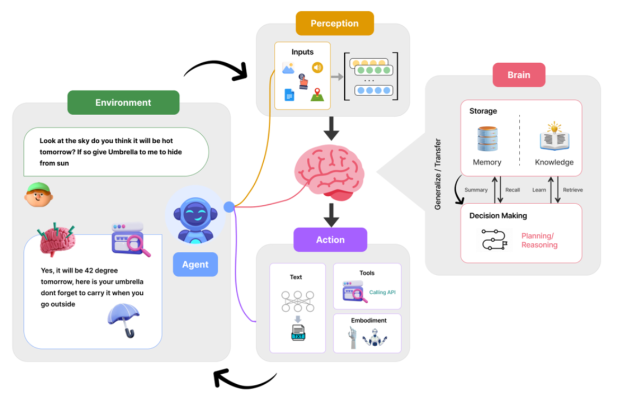

The following image illustrates how AI agents operate, highlighting their ability to interact with the environment, process inputs through perception, make decisions using advanced LLM capabilities, and take actions tailored to user needs and context. It visually complements the explanation provided in this blog post.

1. The Environment: Where It All Begins

AI agents exist to interact with their environment. The environment includes everything the agent can perceive and act upon, such as:

- A user’s query: For example, “Do you think it will be hot tomorrow? If so, give me an umbrella to hide from the sun.”

- External data sources: Weather APIs, geographical data, or sensory inputs.

The environment acts as the starting point, where the AI agent gathers raw information to begin processing.

2. Perception: Understanding the Inputs

Once the agent receives input from the environment, its perception system kicks in. The perception phase involves:

- Collecting data: The agent gathers inputs like text, numbers, or even images.

- Processing the data: These inputs are analyzed and converted into meaningful information. For instance, the agent dissects phrases like “hot tomorrow,” mapping them to relevant datasets such as temperature forecasts. It identifies key terms, resolves ambiguities, and links them to actionable insights, such as recognizing that “hot” pertains to high temperatures and “tomorrow” specifies a future timeframe.

Perception is the foundation for the agent’s ability to make sense of the world.

3. The Brain: Decision-Making and Learning

The brain is the AI agent’s powerhouse, where complex processing and advanced decision-making occur. One of the most critical components of this ‘brain’ is a Large Language Model (LLM), which plays a pivotal role in enabling advanced reasoning and decision-making capabilities.

The Role of LLMs as The Brain

- Understanding Context: LLMs, like GPT models, can interpret complex queries by understanding the nuances of language, enabling the AI agent to process human-like conversations accurately.

- Knowledge Representation: LLMs act as a repository of vast knowledge, allowing the agent to reference diverse topics and provide informed responses.

- Reasoning and Inference: These models analyze inputs and infer logical outcomes, such as determining weather patterns or making recommendations.

- Learning Through Feedback: LLMs improve over time by learning from user interactions, refining their ability to handle more complex scenarios.

For instance, when a user asks, “Do you think it will be hot tomorrow?”, the LLM processes the query, retrieves weather data, and crafts a human-like response such as, “Yes, it will be 42 degrees tomorrow. Here’s your umbrella; don’t forget to carry it when you go outside.”

By serving as the core of the decision-making process, LLMs empower AI agents to deliver highly intelligent and context-aware outputs. They adapt to new contexts by leveraging vast pre-trained knowledge and dynamically updating their responses based on the nuances of user input, ensuring that their outputs remain relevant and accurate across diverse scenarios.

a. Storage

- Memory: Stores previous interactions and relevant data.

- Knowledge: Maintains a database of learned models and rules.

The agent can summarize, recall, learn, and retrieve information from storage to make decisions. For example, it might pull historical weather data to understand patterns.

b. Decision-Making

The agent employs planning and reasoning to determine the best course of action. For instance:

- Planning: Analyzing the weather forecast to decide if an umbrella is necessary.

- Reasoning: Concluding that a 42-degree Celsius day warrants the use of an umbrella for sun protection.

The brain’s decision-making capabilities allow the AI agent to handle complex tasks and provide intelligent responses.

4. Action: Delivering the Output

After processing and decision-making, the AI agent takes action tailored to the environment and specific user needs, ensuring that its responses and outputs are both relevant and context-aware. Actions can be categorized into:

- Text Responses: Providing answers to user queries, such as, “Yes, it will be 42 degrees tomorrow. Here’s your umbrella; don’t forget to carry it when you go outside.”

- Tool Usage: Calling APIs or using external tools to fetch additional data.

- Embodiment: Performing physical actions, such as a robotic assistant handing over an umbrella.

The agent’s actions complete the interaction loop, delivering value to the user.

5. The Feedback Loop: Learning and Improving

AI agents are designed to improve over time. They leverage feedback from their actions and user interactions to refine their processes. This feedback loop enables the agent to:

- Generalize knowledge from past experiences.

- Transfer learned insights to new scenarios.

For example, after repeatedly providing weather-based recommendations, the agent might enhance its understanding of temperature thresholds for different users. Beyond weather, this feedback loop could also apply to tasks like personalizing health tips based on fitness data or optimizing energy usage patterns for smart home devices.

Bringing It All Together

An AI agent’s workflow is a continuous cycle of:

- Perceiving inputs from the environment.

- Processing and understanding these inputs.

- Using its “brain” for reasoning and decision-making.

- Taking action and delivering output.

- Learning from feedback to improve future interactions.

Final Thoughts

Understanding how AI agents work can help us appreciate the technology behind these intelligent systems. This blog covered their interaction with the environment, perception of inputs, decision-making powered by LLMs, and feedback loops for continuous improvement. Whether it’s predicting the weather, managing schedules, or assisting with personal tasks, AI agents are here to stay and will only become more capable as they continue to learn and evolve.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me