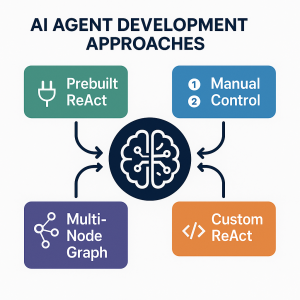

AI agents are autonomous systems combining three core components: a reasoning engine (powered by LLM), tools for external actions, and memory to maintain context. Unlike traditional AI-powered chatbots (created using DialogFlow, AWS Lex), agents can interact with end user based on planning multi-step workflows, use specialized tools, and make decisions based on previous results. In this blog, we will learn about different approaches for building agentic systems. The blog represents Python code examples to explain each of the approaches for creating AI agents. Before getting into the blog, lets quickly look at the set up code which will be basis for code used in the approaches.

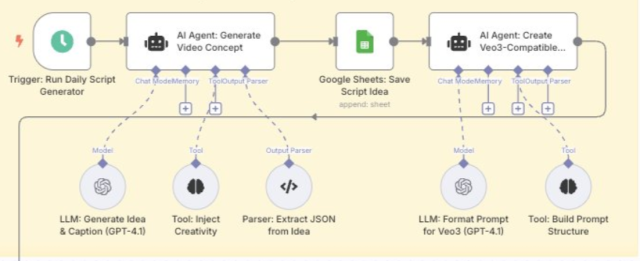

To explain different approaches, I have used a sample agentic system design that represents a two-stage video production pipeline: the first AI agent generates creative concepts using GPT-4.1, creativity injection tools, and JSON parsers, then saves results to Google Sheets. The second AI agent transforms these concepts into Veo3-compatible prompts using formatting tools and prompt structure builders. The following picture represents the agentic workflow.

Notice in the picture below that both the AI agents have three key components or features related to reasoning, memory and tools.

Python Setup Code for Building Agents

In the code below, note some of the following:

- AgentState is shared data structure that flows through every node in your workflow. This is applicable for approach related to usage of LangGraph workflow for creating AI agent. The key variables include messages, and trigger. Variable messages stores the conversation history between user, agent, and tools. trigger represents initial user input that starts the agent workflow.

- There are tools definition represented using methods (such as inject_creativity & extract_json_structure: refer AI agent in above picture for generating concept for the video) and annotated with @tool. The @tool annotation provides benefits such as enabling of tools discovery by the agent, generating tool documentation etc.

- In this blog, the concept of creating AI agent while using three components such as reasoning, tools use and memory is manifested using LangChain & LangGraph libraries.

from langgraph.graph import StateGraph, START, END

from langgraph.prebuilt import create_react_agent

from langchain_core.tools import tool

from langchain_core.messages import BaseMessage, HumanMessage

from langchain.chat_models import init_chat_model

from typing_extensions import TypedDict, Annotated

from langgraph.graph.message import add_messages

# Modern state definition

class AgentState(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

trigger: str

video_concept: str

tool_outputs: dict

# Define tools using modern @tool decorator

@tool

def inject_creativity(concept: str) -> str:

"""Enhances video concepts with creative and trending elements"""

return f"ENHANCED: {concept} + viral hooks + trending elements"

@tool

def extract_json_structure(text: str) -> str:

"""Converts concept text into structured JSON format"""

return f'{{"concept": "{text}", "duration": "60s", "style": "dynamic"}}'

Approach 1: Prebuilt ReAct Agent

In this approach, the ReAct (Reasoning + Acting) pattern is used where the agent alternates between thinking and tool usage automatically. The steps are mentioned as part of the prompt. In the following code, AI agent for creating concepts for video generation is created. Refer to agentic workflow image presented earlier in this blog. This approach is popular choice for 90% of use cases. It handles ReAct logic automatically.

def approach_1_prebuilt_react_agent(state: AgentState) -> AgentState:

tools = [inject_creativity, extract_json_structure]

model = init_chat_model("gpt-4o-mini", temperature=0.7)

# Create agent using LangGraph's prebuilt function

agent = create_react_agent(

model=model,

tools=tools,

prompt="""You are a creative video concept generator.

For each request:

1. Generate an initial creative concept

2. Use inject_creativity to enhance it

3. Use extract_json_structure to format the output"""

)

result = agent.invoke({

"messages": [HumanMessage(content=f"Generate concept for: {state['trigger']}")]

})

return {**state, "video_concept": result["messages"][-1].content}

Approach 2: Manual Orchestration

Manual orchestration represents a choreographed approach to agent development where the developer explicitly defines when, how, and in what sequence tools are executed. Unlike autonomous agents that make decisions about tool usage, this approach follows a predetermined script that ensures predictable, repeatable outcomes. Use this approach when you need guaranteed tool execution order or predictable workflows.

def approach_2_manual_orchestration(state: AgentState) -> AgentState:

model = init_chat_model("gpt-4o-mini", temperature=0.7)

# Step 1: Generate initial concept

initial_prompt = f"Generate a creative video concept for: {state['trigger']}"

initial_response = model.invoke([HumanMessage(content=initial_prompt)])

initial_concept = initial_response.content

# Step 2: Enhance with creativity tool

enhanced_concept = inject_creativity.invoke({"concept": initial_concept})

# Step 3: Structure as JSON

structured_concept = extract_json_structure.invoke({"text": enhanced_concept})

# Step 4: Final refinement

final_prompt = f"Refine this concept: {enhanced_concept}"

final_response = model.invoke([HumanMessage(content=final_prompt)])

return {

**state,

"video_concept": final_response.content,

"tool_outputs": {

"initial": initial_concept,

"enhanced": enhanced_concept,

"structured": structured_concept

}

}

Approach 3: Multi-Node Graph

Multi-node graph orchestration transforms a single agent workflow into a distributed system of specialized nodes, each responsible for distinct cognitive functions. Instead of one monolithic agent handling all tasks, you create a network of interconnected processing units that can work in parallel, make conditional decisions, and handle complex branching logic. The agentic workflow is created using the following workflow components of LangGraph:

- Nodes: Processing units (LLM calls, tool executions, decision points). Nodes are the functional building blocks of your workflow—individual processing units that perform specific tasks. Single responsibility principle can be used as a basis for designing nodes.

- Edges: Data flow and execution dependencies. Edges create predetermined pathways between nodes, ensuring consistent execution order.

- State: State acts as the shared memory that flows through your workflow, carrying data between nodes.

- Conditional Routing: Conditional edges enable intelligent routing based on runtime conditions and state values.

LangGraph brings graph-based thinking to agent development. Understanding its core concepts—nodes, edges, state, and conditional routing—is essential for building sophisticated, production-ready AI systems.

def create_approach_3_multi_node_graph():

model = init_chat_model("gpt-4o-mini", temperature=0.7)

def generate_initial_concept(state: AgentState) -> AgentState:

prompt = f"Generate a creative video concept for: {state['trigger']}"

response = model.invoke([HumanMessage(content=prompt)])

return {**state, "initial_concept": response.content}

def enhance_with_creativity(state: AgentState) -> AgentState:

enhanced = inject_creativity.invoke({"concept": state["initial_concept"]})

return {**state, "enhanced_concept": enhanced}

def structure_as_json(state: AgentState) -> AgentState:

structured = extract_json_structure.invoke({"text": state["enhanced_concept"]})

return {**state, "structured_concept": structured}

def finalize_concept(state: AgentState) -> AgentState:

final_prompt = f"Create final concept based on: {state['enhanced_concept']}"

response = model.invoke([HumanMessage(content=final_prompt)])

return {**state, "video_concept": response.content}

# Build the multi-node workflow

workflow = StateGraph(AgentState)

workflow.add_node("generate_initial", generate_initial_concept)

workflow.add_node("enhance_creative", enhance_with_creativity)

workflow.add_node("structure_json", structure_as_json)

workflow.add_node("finalize", finalize_concept)

# Chain them together

workflow.add_edge(START, "generate_initial")

workflow.add_edge("generate_initial", "enhance_creative")

workflow.add_edge("enhance_creative", "structure_json")

workflow.add_edge("structure_json", "finalize")

workflow.add_edge("finalize", END)

return workflow.compile()

Conclusion

The choice for the approach that can be taken to create AI agents depends upon your specific business requirements, technical constraints, and organizational priorities. Choose manual orchestration when compliance is non-negotiable (example, financial services, healthcare, etc.), there is a need for greater predictability and debugging & maintenance are priorities. Choose multi-node graph approach when there is a need for modularity given the complexity of the workflow, need for collaboration between different teams with individual team working on their own agents, etc. It is often seen that one starts with manual orchestration and then go on to adopt other approaches. It is important to note that every approach involves compromises between control and flexibility, speed and reliability, simplicity and capability.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me