Are you interested in using neural networks to solve complex regression problems, but not sure where to start? Sklearn’s MLPRegressor can help you get started with building neural network models for regression tasks. While the packages from Keras, Tensorflow or PyTorch are powerful and widely used in deep learning, Sklearn’s MLPRegressor is still an excellent choice for building neural network models for regression tasks when you are starting on. Recall that Python Sklearn library is one of the most popular machine learning libraries, and it provides a wide range of algorithms for classification, regression, clustering, dimensionality reduction, and more.

In this blog post, we will be focusing on training a neural network regression model using Sklearn MLPRegressor (Multi-layer Perceptron Regressor). We’ll go over different aspects of Sklearn MLPRegressor and how they work, as well as provide a step-by-step example of a neural network regression using Sklearn MLPRegressor.

Quick Overview – What is Neural Network?

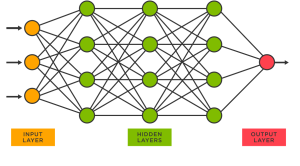

Neural networks are a type of machine learning / deep learning algorithm that mimics the way the human brain works. They are composed of multiple layers of processing units (neurons), which connect to each other and interact with each other through an activation function. Neural networks use input layers (e.g., images, audio, or video), hidden layers (which contain artificial neurons that process data), and output layers (which generate a result from the processed data). Neural networks can be used for various applications, including machine learning, computer vision, natural language processing, speech recognition, robotics, and more.

Neural network models are trained with inputs fed from one layer and outputs produced in another layer with one or more hidden layers in between. The neurons in between these layers represent weights—the strength of the connection between each neuron—and biases—the threshold value for activating each neuron. Neural network models learn by adjusting their weights and biases over time to reduce errors in their outputs when compared to expected results. This process is known as training, or backpropagation.

There are different types of neural network architectures such as feedforward neural networks, convolutional neural networks, recurrent neural networks, LSTM, Transformers, and many more. Neural networks models can be trained using different packages such as Keras, PyTorch, TensorFlow, and Sklearn. Each package has its own advantages and disadvantages depending on the task at hand and the user’s level of expertise.

Read and learn more about neural networks in my related blogs such as the following:

- Neural network explained with Perceptron example

- Neural network types and real-life examples

- Neural network quiz

Neural Network for Regression – Sklearn MLPRegressor

Sklearn MLPRegressor is a powerful machine learning algorithm for regression tasks. It provides a high degree of accuracy and can handle complex, non-linear datasets. MLPRegressor is an artificial neural network model that uses backpropagation to adjust the weights between neurons in order to improve prediction accuracy. MLPRegressor implements a Multi-Layer Perceptron (MLP) algorithm for training and testing data sets using backpropagation and stochastic gradient descent methods. It includes several parameters that can be used to fine-tune the model’s performance including number of hidden layers, activation functions, solvers (for optimization), etc. It is an efficient method for solving regression problems as it can learn complex non-linear relationships between input and output variables.

One of the main advantages of using MLPRegressor over other regression algorithms is its ability to handle data with large numbers of features or inputs. While other regression models may struggle with datasets containing hundreds or thousands of features, MLPRegressor can quickly learn the patterns and provide accurate predictions. This makes it ideal for applications such as predicting stock prices, sales figures and customer satisfaction ratings.

Another benefit of using MLPRegressor over other algorithms is its ability to handle missing data points without much issue as well as performing feature selection automatically through its training process, eliminating irrelevant or redundant features from further consideration in the model building process. This makes it easier to identify the most important predictors without having to manually eliminate them from the dataset which can be quite time consuming when dealing with large numbers of input variables.

One of the main disadvantages of using MLPRegressor over other regression algorithms is its lack of interpretability. This means that it can be difficult to understand why a particular model has made certain predictions and how different parameters have affected the outcome. As such, it can be challenging to explain to stakeholders why a certain decision was made and how reliable it is. Another major limitation of MLPRegressor is its propensity for overfitting. Since this type of algorithm works by building complex nonlinear relationships between inputs and outputs, it can be prone to memorizing the training data which leads to poor generalization on unseen data.

Sklearn MLPRegressor Python Code

The following Sklearn Python code represents the training of regression model using MLPRegressor.

from sklearn.neural_network import MLPRegressor

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.datasets import fetch_california_housing

#

# Load California housing data set

#

housing = fetch_california_housing()

X = housing.data

y = housing.target

#

# Create training/ test data split

#

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1)

#

# Instantiate MLPRegressor

#

nn = MLPRegressor(

activation='relu',

hidden_layer_sizes=(10, 100),

alpha=0.001,

random_state=20,

early_stopping=False

)

#

# Train the model

#

nn.fit(X_train, y_train)

The code below will print the RMSE and R-squared value of the trained regression model.

import numpy as np

from sklearn.metrics import mean_squared_error

# Make prediction

pred = nn.predict(X_test)

#

# Calculate accuracy and error metrics

#

test_set_rsquared = nn.score(X_test, y_test)

test_set_rmse = np.sqrt(mean_squared_error(y_test, pred))

#

# Print R_squared and RMSE value

#

print('R_squared value: ', test_set_rsquared)

print('RMSE: ', test_set_rmse)

Conclusion

Neural networks are powerful tools for solving complex problems involving large amounts of unstructured or unpredictable data. They have become increasingly popular due to their ability to provide accurate predictions while being relatively simple compared other machine learning algorithms like decision trees or support vector machines. With Sklearn’s MLPRegressor class you can quickly build a powerful neural network for regression tasks without having much prior knowledge about neural networks themselves. This post provided a comprehensive overview of how you can get started building your own neural network models for real world applications using just Python code!

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me