This article represents guidelines based on which one could determine whether to use Logistic regression or SVM with Kernels when working on a classification problem. These are guidelines which I gathered from one of the Andrew NG videos on SVM from his machine learning course in Coursera.org. As I wanted a place to reach out quickly in future when I am working on classification problem and, want to refer which algorithm to use out of Logistic regression or SVM, I decided to blog it here. Please feel free to comment/suggest if I missed to mention one or more important points. Also, sorry for the typos.

Key Criteria for Using Logistic Regression vs SVM

Following are different important scenarios related with number of training examples and features based on which one could try different learning algorithms out of logistic regression or SVM with/without Kernels:

- Large number of features and smaller number of training examples: Given that the number of features are relatively larger than the training examples, one could use either of Logistic Regression or SVM without a kernel or Linear Kernel. For instances, lets say the text classification example. In a typical spam email classification example, each of the spam word could become a feature and each of the email could become a training example. Thus, there will be 10000 or more features (words categorized as representative of spam) with 10 – 1000 emails (training examples) to be used for learning algorithm. In examples like these, typically, it is recommended that one should use either logistic regression or SVM without a Kernel or SVM with Linear Kernel as there is not enough training data to fit a complicated non-linear function such as SVM with Gaussian kernel.

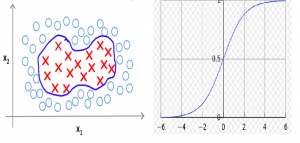

- Smaller number of features and large, but not very large, number of training examples: In scenarios when the number of features are relatively smaller than the number of training examples, and the number of training examples are not that large, one may use SVM with Gaussian Kernel. In such scenarios, the number of feature set could vary from 1 to 1000 and the number of training examples could vary from 10 to upto 50000 but not bigger than that.

- Smaller number of features and very large number of training examples: In scenarios where number of training examples are much much larger than the number of features, one could go on for adding more features and later use SVM without a Kernel (Linear Kernel) or Logistic Regression. In such scenarios, the number of training examples could be as large as 100,000 or may be in millions.

One may note that the logistic regression and SVM without a Kernel can be used interchangeably as they are similar algorithms. The strength of SVM lies in usage of kernel functions, such as Gaussian Kernel, for complex non-linear classification problem.

Latest posts by Ajitesh Kumar (see all)

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me