When building a Retrieval-Augmented Generation (RAG) application powered by Large Language Models (LLMs), which combine the ability to generate human-like text with advanced retrieval mechanisms for precise and contextually relevant information, effective indexing plays a pivotal role. It ensures that only the most contextually relevant data is retrieved and fed into the LLM, improving the quality and accuracy of the generated responses. This process reduces noise, optimizes token usage, and directly impacts the application’s ability to handle large datasets efficiently. RAG applications combine the generative capabilities of LLMs with information retrieval, making them ideal for tasks such as question-answering, summarization, or domain-specific problem-solving. This blog will walk you through the indexing workflow step by step.

The Indexing Workflow

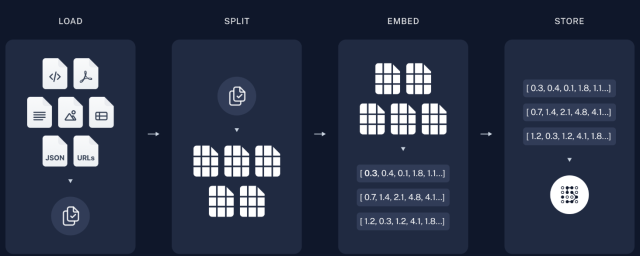

Indexing in RAG applications typically follows four key stages: Load, Split, Embed, and Store. Each stage contributes uniquely to ensuring efficient and accurate retrieval of information. Let’s break these stages down:

1. Load: Preparing the Data

The first step is to gather the data that your application will access during retrieval. This data can include:

- Text Files (e.g., .txt, .md)

- Documents (e.g., .pdf, .docx)

- Tables (e.g., .csv, .xlsx)

- Images with Text (via OCR)

- JSON or API endpoints

- Web URLs

The goal here is to load the raw data into the pipeline. Regardless of the data format, everything is pre-processed into a standardized format that can be indexed. For example, PDFs might be converted to plain text, while images might require text extraction.

2. Split: Breaking Data into Chunks

Once the data is loaded, it must be divided into manageable chunks. This is because LLMs, including GPT-based models, have token limits. By splitting data into smaller pieces:

- Retrieval becomes more efficient.

- Each chunk can be processed and referenced individually.

How is the data split?

- By length: Splitting text into chunks of a fixed number of characters or tokens.

- By structure: Dividing based on headings, paragraphs, or semantic boundaries.

- By context: Ensuring that chunks maintain coherence and relevance to avoid incomplete information.

3. Embed: Converting Data to Vectors

To enable efficient retrieval, each chunk is transformed into a numerical representation, or an embedding, using a vectorizer model (e.g., OpenAI’s embedding models or Hugging Face embeddings).

- These embeddings encode the semantic meaning of the chunks in a high-dimensional vector space.

- Similar chunks will have embeddings close to each other in this vector space.

For example:

- A chunk discussing “climate change” might have an embedding like

[0.3, 0.4, 0.1, 1.8, 1.1...]. - Another chunk about “global warming” could have a vector like

[0.7, 1.4, 2.1, 4.8, 4.1...].

These embeddings ensure that semantically related information is grouped together for efficient search.

4. Store: Saving the Indexed Data

Finally, the embeddings are stored in a vector database such as Pinecone, Weaviate, or Vespa. A vector database is designed to store and manage high-dimensional vectors efficiently, making it particularly suitable for handling embeddings. It allows for fast similarity searches by comparing these vectors, enabling the retrieval of semantically relevant information quickly and accurately. This database:

- Facilitates fast similarity searches to retrieve the most relevant chunks.

- Links each embedding to its original data, allowing the application to present the full context.

During retrieval, the application queries the vector database with a user’s question or input. The database returns the closest matches based on the embeddings’ similarity, which the LLM then uses to generate a relevant response.

Benefits of Indexing for RAG

- Scalability: Handles large datasets by enabling fast searches across millions of chunks.

- Accuracy: Improves retrieval precision, ensuring the LLM works with high-quality, relevant information.

- Efficiency: Minimizes token consumption by the LLM, as only the most relevant chunks are passed to it.

Conclusion

Indexing is the backbone of RAG applications, bridging the gap between static datasets and the dynamic responses of LLMs. By following the Load → Split → Embed → Store pipeline, you ensure that your application retrieves the right data at the right time, enhancing its overall performance.

Understanding and implementing indexing will improve your RAG application, whether you’re building a Q&A bot or a domain-specific assistant.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me