Are you curious about how machines not only learn from data but actually create it? Have you ever found yourself puzzled while trying to choose between Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) for your project? Or, even trying to understand when to use GANs or VAEs? Well, you’re not alone!

In this blog post, we’re going to learn about two key technologies GANs vs VAEs in the generative modeling, comparing their strengths, weaknesses, and everything in between. We will dive into real-life scenarios, showing when you might want to pull out GANs to generate high-quality, realistic images, and when you’d prefer the control that VAEs provide over the features of your outputs. So, whether you’re an experienced data scientist, a product manager or a forward-thinking business leader, or simply a tech enthusiast, it will always be helpful if you learn the key differences and similarities between GAEs and VAEs such that you can leverage it for most appropriate use cases.

GANs vs VAEs – Key Differences & Similarities

Lets dive in straight into learning key differences and similarities between VAEs and GANs.

| Topics | Generative Adversarial Networks (GANs) | Variational Autoencoders (VAEs) |

|---|---|---|

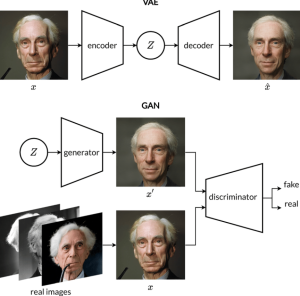

| Functionality | Composed of two models (a generator and a discriminator) that compete with each other. The generator creates fake samples and the discriminator attempts to distinguish between real and fake samples. | Composed of an encoder and a decoder. The encoder maps inputs to a latent space, and the decoder maps points in the latent space back to the input space. |

| Output Quality | Can generate high-quality, realistic outputs. Known for generating images that are hard to distinguish from real ones. | Generally produces less sharp or slightly blurrier images compared to GANs. However, this may depend on the specific implementation and problem domain. |

| Latent Space | Often lacks structure, making it hard to control or interpret the characteristics of the generated samples. | Creates a structured latent space which can be more easily interpreted and manipulated. |

| Training Stability | Training GANs can be challenging and unstable, due to the adversarial loss used in training. | Generally easier and more stable to train because they use a likelihood-based objective function. |

| Use Cases | Great for generating new, creative content. Often used in tasks like image generation, text-to-image synthesis, and style transfer. | Useful when there’s a need for understanding the data-generating process or controlling the attributes of the generated outputs. Often used in tasks like anomaly detection, denoising, or recommendation systems. |

| Activation function | In generator network, the final layer typically uses a Tanh activation function, mapping the output to a range that matches the preprocessed input data, usually from -1 to 1. For intermediate layers, GANs commonly employ activation functions like ReLU or LeakyReLU, effectively circumventing the vanishing gradient problem. This allows GANs to learn and propagate more diverse gradients back through the network. The discriminator network uses a Sigmoid activation function in its output layer | The encoder network in a VAE transforms the input data into two components: a mean and a standard deviation. Since the mean can span any real value and the standard deviation needs to be positive, these outputs typically forego activation functions. However, much like GANs, VAEs use activation functions such as ReLU or LeakyReLU in intermediate layers to introduce non-linearity and mitigate the vanishing gradient problem. The decoder, which maps points from the latent space back to the data space, can employ various activation functions in its final layer. |

What’s the similarities between GANs and VAEs?

Despite their differences, Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) also share a number of similarities. Here are some of them:

- Generative Models: Both GANs and VAEs are generative models. This means they learn the underlying distribution of the training data so as to generate new data points with similar characteristics.

- Neural Network-Based: Both GANs and VAEs are based on neural networks. GANs consist of two neural networks, a generator, and a discriminator, while VAEs consist of an encoder and a decoder.

- Use of Latent Space: Both models map inputs to a lower-dimensional latent space and then generate outputs from this latent space. This latent space can be used to explore, manipulate, and understand the data distribution.

- Backpropagation and Gradient Descent: Both GANs and VAEs are trained using backpropagation and gradient descent. This involves defining a loss function and iteratively updating the model parameters to minimize this loss.

- Ability to Generate New Samples: Both GANs and VAEs can generate new samples that were not part of the original training set. These models are often used to generate images, but they can also be applied to other types of data.

- Use of Non-linear Activation Functions: Both models use non-linear activation functions such as ReLU in their hidden layers, which enable them to model complex data distributions.

VAEs vs GANs: When to use Which one?

The choice between Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) often comes down to the specific needs and context of the problem you’re trying to solve. Here are a couple more real-world examples to illustrate this:

When to use GANs: Here are some real world examples of when you would want to use GANs:

- Creation of high-quality images for banners, logos, etc: Picture running a digital advertising company that needs to design a wide variety of banners, logos, and ad layouts. However, hiring graphic designers for the sheer volume of designs could be expensive and time-consuming. Here, GANs might be your saving grace. Known for their ability to produce sharp and visually appealing synthetic images, GANs could be used to automatically generate diverse and creative design elements. These designs can then be fine-tuned as needed, significantly reducing the time and cost associated with the design process.

- Suppose you’re in a business that requires high-quality, realistic image generation, such as a fashion e-commerce company looking to generate virtual models wearing different clothes. In this case, you might lean towards using GANs. GANs have shown remarkable proficiency in generating high-resolution, sharp, and realistic images, thanks to their adversarial training process. They’re particularly known for their ability to generate ‘new’ instances of training data – for instance, creating images of clothes that don’t yet exist, but still look plausible and attractive to potential customers.

When to use VAEs: Here are some real world examples of when you would want to use VAEs:

- Synthetic data generation: Consider a healthcare analytics company that needs to classify and analyze different types of cells or bacteria from microscope image data, but lacks sufficient labelled data for standard supervised learning approaches. VAEs, in this case, would be an excellent choice. VAEs could be used to learn the underlying structure of the data in an unsupervised manner, and then generate new synthetic samples to augment the training dataset. This helps improve the performance of subsequent supervised learning tasks, such as classification or anomaly detection.

- Let’s say you run a movie streaming service, and you’re interested in building a recommendation system that provides users with personalized movie recommendations. Here, VAEs might be a better fit. Unlike GANs, VAEs are excellent at learning the underlying structure of the data and generating data that’s not just similar, but also diverse. They’re able to capture the variance in the data, making them particularly suited to tasks like recommendation systems where you’re looking to provide a broad set of personalized suggestions. Additionally, VAEs provide a smooth and well-structured latent space, which makes it easy to navigate the recommendation space and avoid recommending outliers.

Here are some of the reasons why you might prefer VAEs over GANs in certain cases for several reasons:

- Smooth Latent Space: VAEs ensure a continuous, smooth latent space, making them well-suited for tasks that require interpolation or exploration of variations along certain dimensions in the data. This is useful for applications like morphing, where one image is gradually transformed into another.

- Stochasticity: VAEs are inherently stochastic, which means they generate diverse outputs even for the same input, contributing to a wider variety of generated images. This is particularly useful when diversity is preferred over the pinpoint accuracy of the output.

- Ease of Training: GANs can be notoriously hard to train due to issues like mode collapse, vanishing gradients, and the difficulty of finding equilibrium during the adversarial process. VAEs, on the other hand, have a more straightforward training process, based on maximization of a well-defined objective function.

- Reconstruction Ability: VAEs have a strong ability to reconstruct the input data from the encoded latent representation. This can be beneficial in tasks like denoising or super-resolution, where a ‘clean’ or ‘high-resolution’ version of an input image is to be generated.

However, it’s worth mentioning that while VAEs are powerful, they tend to produce blurrier images compared to GANs. This is because VAEs often model the pixel-wise mean of the data, which leads to averaging out details, especially in regions of the data distribution where there is a lot of variation. GANs, thanks to their adversarial training process, generally produce sharper, more detailed images, making them more suitable when high visual quality is a priority.

Conclusion

Both GANs and VAEs stand as powerful tools in the realm of generative models. GANs, with its innovative adversarial mechanism, excel at producing sharp, realistic images. Their application shines particularly in fields requiring high-fidelity and visually appealing results, such as fashion e-commerce, digital advertising, game development, architectural visualization, etc. On the other hand, VAEs is known for its ability to understand the intricate structure of the data and generate a diverse range of data, offer a well-structured and smooth latent space. This makes VAEs ideal for tasks demanding diversity and structured exploration, seen in areas such as personalized recommendation systems, financial risk assessment, healthcare analytics, and music personalization.

Moreover, while GANs often employ ReLU and Leaky ReLU activation functions, VAEs typically use softer activations like sigmoid or tanh to regulate the output. Despite these differences, both share common ground, particularly in being unsupervised learning models utilizing neural networks, leveraging the power of deep learning to generate novel data that capture the intricacies of their training sets. The choice between GANs and VAEs, as we’ve seen, comes down to the specifics of the use case, the type of data at hand, and the desired attributes of the generated data. It’s a nuanced decision involving a balance between the quality and diversity of output, the structure and interpretability of the learned representations, and the complexity of the training process. By understanding the strengths and limitations of GANs and VAEs, we can better navigate the landscape of generative models and harness their power to create impactful solutions across a wide array of industries. Featured image courtesy.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me