Deep learning is a powerful tool for solving complex problems, but it can be difficult to get started. In this blog post, we’ll provide a checklist of things to keep in mind when training and evaluating the deep learning models and deciding whether they are suitable to deploy in production. By following this checklist, you can ensure that your models are well-trained and ready to tackle real-world tasks.

Validation of data distribution

The distribution of data can have a significant impact on the performance of deep learning models. When training a model, it is important to ensure that the training data is representative of the distribution of the data that will be seen by the model in production. If the training data is not representative, the model may not perform well on data that is actually seen in production. One way to check whether the training data is representative is to split it into a training set and a validation set. The distribution of data in the validation set should be similar to the distribution of data in the production environment. If the distributions are significantly different, it may be necessary to retrain the model with a different training set. Validating the data distribution during training can help to ensure that deep learning models are robust and can generalize well to new data.

Checking data distribution can help to ensure that the model is not overfitting or underfitting the data. Overfitting occurs when the model fits too closely to the training data, and does not generalize well to new data. Underfitting occurs when the model does not fit the training data well, and also does not generalize well to new data.

Ensuring whether training loss converged

Training loss decides training speed and model accuracy. If training loss does not converge, training would take longer time and computing resources resulting in greater cost. When training deep learning models, it is important to understand and ensure that training loss has converged before moving on to testing or deployment. There are a few reasons for this. First, training loss convergence means that the model is no longer improving with training, which means that additional training is unlikely to improve performance. Second, training loss convergence is a good indication that the model has fit the training data well and is generalizing well to new data. Third, training loss convergence is a good indicator of how much training data is needed – if the training loss hasn’t converged after a certain amount of training data, then it’s likely that more training data is needed. Finally, training loss convergence can be used as a stopping criterion for training – once the training loss has converged, there’s no need to continue training. In summary, training loss convergence is an important quantity to track when training deep learning models.

Overfitting Check

Overfitting is a problem that can occur when training deep learning models. This occurs when the model has been trained too well on the training data, and as a result, does not generalize well to new data. Overfitting can lead to poor performance on unseen data, and can also be a major problem when trying to deploy a model in production. There are a few ways to prevent overfitting, such as using more data for training, using regularization methods, or early stopping. Checking for overfitting is important before deploying a deep learning model, as it can help to ensure that the model will perform well on new data.

Accuracy comparison to a zero-rule baseline

Accuracy comparison to a zero-rule baseline is needed when training deep learning models, hence, it is important to understand the concept of a zero-rule baseline. A zero-rule baseline is a model that simply predicts the majority class for all examples. Therefore, accuracy measures can be misleading when comparing to a zero-rule baseline. accuracy alone doesn’t give you the whole story and isn’t enough to indicate how your model is performing. Comparing your model’s accuracy to a zero-rule baseline will give you a better idea of how your model is actually performing. If your model’s accuracy is significantly higher than the zero-rule baseline, then you know that your model is actually learning and doing better than just randomly guessing. However, if your model’s accuracy is not much higher than the zero-rule baseline, then you know that your model isn’t actually learning and isn’t doing any better than just randomly guessing. Therefore, accuracy comparisons to a zero-rule baseline are needed when training deep learning models in order to get a better understanding of how well the models are actually performing.

Confusion matrix plotting

Confusion matrix is a table that is used to evaluate the performance of a machine learning model. It is also used to understand which confusion plot helps us understand how our model is performing. The x-axis of the plot represents the actual labels and the y-axis represents the predicted labels. The confusion matrix is plotted for each class separately.

A confusion matrix is a tool for evaluating the performance of a machine learning model. It can be used to determine how well the model is able to classify data set members into the correct classes. A confusion matrix is also known as an error matrix or a classification matrix. The confusion matrix is created by taking the known labels for a data set and predicted labels generated by a machine learning model and tabulating them in a grid. The confusion matrix can be plotted to visualize the performance of the machine learning model. Plotting the confusion matrix can help to determine if the deep learning or classical machine learning model is overfitting or underfitting the data.

Training & validation loss / accuracy curves check

When training machine learning models, it is important to keep track of both the training loss and validation loss. The training loss is a measure of how well the model is able to learn from the training data. The validation loss is a measure of how well the model is able to generalize from the training data to new, unseen data. If the training loss is much lower than the validation loss, it means that the model is overfitting to the training data and is not able to generalize well. On the other hand, if the training loss is close to the validation loss, it means that the model is learning well and should be able to generalize well. Checking both the training loss and validation loss helps us to evaluate how well our machine learning models are doing.

Prediction error evaluation / check

Prediction error is a prediction made by the model – which can be either classification or regression – that deviates from the actual output value. In supervised learning, prediction error can be used during training to reduce the error in future predictions. It is also important to check prediction errors when evaluating deep learning models so that you can identify any areas where the model is not performing well and make improvements accordingly. By checking prediction errors, you can ensure that your deep learning model is performing as accurately as possible.

Test with different optimizers

As anyone who has tried to train a deep learning model knows, finding the right optimizer can be a challenge. There are so many different optimizers out there, and each one has its own strengths and weaknesses. Trying different optimizers is important because it allows you to find the one that works best for your particular data set and training goals. Some optimizers are better at handling large data sets, while others are better at preventing overfitting. Trying different optimizers is important when training deep learning models for a few reasons. First, different optimizers may work better with different types of data. Second, different optimizers may be better suited for different types of tasks. Finally, the default settings for optimizers may not be optimal for a given problem. By trying different optimizers, one can find an optimizer that works well with their data and their task. In addition, by tweaking the hyperparameters of an optimizer, one can often improve its performance. As a result, it is important to try different optimizers when training deep learning models.

Test with different regularization techniques

Deep learning models are complex, and training them can be a challenge. As anyone who has tried to train a deep learning model knows, getting the model to converge can be a challenge. One way to improve convergence is to regularize the model, which helps to prevent overfitting and improve generalization. However, there is no one-size-fits-all approach to regularization, and it can be difficult to know which technique will work best for a given problem. This is why it is important to try different regularization techniques when training deep learning models. By experiment with different approaches, we can gain a better understanding of what works best for a particular problem and learn to optimize our models more effectively.

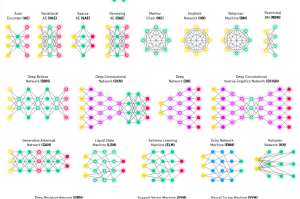

Test with different neural architectures

When it comes to training deep learning models, it is important to try out different neural architectures. This is because each architecture has its own strengths and weaknesses. For example, some architectures are better at handling complex data sets, while others are better at dealing with simple data sets.Some architectures are better at recognizing patterns, while others are better at handling input with a lot of noise. Some architectures are also more efficient, meaning they require less training data to achieve the same level of accuracy. As a result, it’s important to try different architectures to see which one works best for your specific task. This can be time-consuming, but it’s worth it in the end to get the best results.

Try running weightwatcher

By running weightwatcher, one can check whether the layers have converged individually to a good alpha, and exhibit no rank collapse or correlation traps. Weightwatchers are used to compare the weights of neighboring nodes in order to identify when they have become too similar. If the weights of two nodes are too similar, then the layers may not have converged properly, and may be susceptible to rank collapse or correlation traps. By running a weightwatcher, you can identify these problems and fix them before they cause any damage.

Weightwatchers is an iterative optimization algorithm that can help check whether the layers converge individually. Weightwatchers starts with a randomly initialized kernel, and then iterates through the following three steps:

- Compute the gradient of the loss function with respect to the kernel parameters.

- Update the kernel parameters using gradient descent.

- Compare the current kernel with the previous kernel to determine if convergence has been reached.

If convergence has not been reached, Weightwatchers will return to step 1 and continue iterating until a good solution is found. This ensures that the layers have converged to a good alpha, and that there are no rank collapse or correlation traps.

We’ve outlined a few key points to keep in mind when evaluating deep learning models. However, this is not an exhaustive list and there are many other factors that could be taken into account. If you would like to know more about our evaluation process or have any questions, please don’t hesitate to reach out. We would be happy to discuss your specific needs and see how we can help.

- Mathematics Topics for Machine Learning Beginners - July 6, 2025

- Questions to Ask When Thinking Like a Product Leader - July 3, 2025

- Three Approaches to Creating AI Agents: Code Examples - June 27, 2025

I found it very helpful. However the differences are not too understandable for me