Category Archives: LangChain

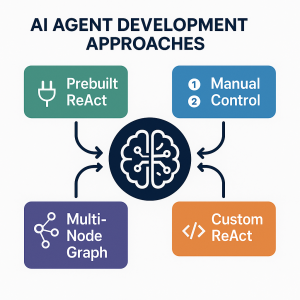

Three Approaches to Creating AI Agents: Code Examples

AI agents are autonomous systems combining three core components: a reasoning engine (powered by LLM), tools for external actions, and memory to maintain context. Unlike traditional AI-powered chatbots (created using DialogFlow, AWS Lex), agents can interact with end user based on planning multi-step workflows, use specialized tools, and make decisions based on previous results. In this blog, we will learn about different approaches for building agentic systems. The blog represents Python code examples to explain each of the approaches for creating AI agents. Before getting into the blog, lets quickly look at the set up code which will be basis for code used in the approaches. To explain different approaches, …

How to Setup MEAN App with LangChain.js

Hey there! As I venture into building agentic MEAN apps with LangChain.js, I wanted to take a step back and revisit the core concepts of the MEAN stack. LangChain.js brings AI-powered automation and reasoning capabilities, enabling the development of agentic AI applications such as intelligent chatbots, automated customer support systems, AI-driven recommendation engines, and data analysis pipelines. Understanding how it integrates into the MEAN stack is essential for leveraging its full potential in creating these advanced applications. So, I put together this quick learning blog to share what I’ve revisited. The MEAN stack is a popular full-stack JavaScript framework that consists of MongoDB, Express.js, Angular, and Node.js. Each component plays …

Creating a RAG Application Using LangGraph: Example Code

Retrieval-Augmented Generation (RAG) is an innovative generative AI method that combines retrieval-based search with large language models (LLMs) to enhance response accuracy and contextual relevance. Unlike traditional retrieval systems that return existing documents or generative models that rely solely on pre-trained knowledge, RAG technique dynamically integrates context as retrieved information related to query with LLM outputs. LangGraph, an advanced extension of LangChain, provides a structured workflow for developing RAG applications. This guide will walk through the process of building a RAG system using LangGraph with example implementations. Setting Up the Environment To get started, we need to install the necessary dependencies. The following commands will ensure that all required LangChain …

Building a RAG Application with LangChain: Example Code

The combination of Retrieval-Augmented Generation (RAG) and powerful language models enables the development of sophisticated applications that leverage large datasets to answer questions effectively. In this blog, we will explore the steps to build an LLM RAG application using LangChain. Prerequisites Before diving into the implementation, ensure you have the required libraries installed. Execute the following command to install the necessary packages: Setting Up Environment Variables LangChain integrates with various APIs to enable tracing and embedding generation, which are crucial for debugging workflows and creating compact numerical representations of text data for efficient retrieval and processing in RAG applications. Set up the required environment variables for LangChain and OpenAI: Step …

Building an OpenAI Chatbot with LangChain

Have you ever wondered how to use OpenAI APIs to create custom chatbots? With advancements in large language models (LLMs), anyone can develop intelligent, customized chatbots tailored to specific needs. In this blog, we’ll explore how LangChain and OpenAI LLMs work together to help you build your own AI-driven chatbot from scratch. Prerequisites Before getting started, ensure you have Python (version 3.8 or later) installed and the required libraries. You can install the necessary packages using the following command: Setting Up OpenAI API Key To use OpenAI’s services, you need an API key, which you can obtain by signing up at OpenAI’s website (OpenAI) and generating a key from the …

I found it very helpful. However the differences are not too understandable for me