Here are the top web pages /videos for learning back propagation algorithm used to compute the gradients in neural network. I will update this page with more tutorials as I do further deep dive on back propagation algorithm. For beginners or expert level data scientists / machine learning enthusiasts, these tutorials will prove to be very helpful.

Before going ahead and understanding back propagation algorithm from different pages, lets quickly understand the key components of neural network algorithm:

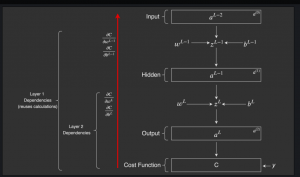

- Feed forward algorithm: Feed forward algorithm represents the aspect of how input signals travel through different neurons present in different layers in form of weighted sums and activations, and, result in output / prediction. The key aspect in feed forward algorithm is activation function. You may want to check out this post which represents activation functions in form of animation – Different types of activation functions illustrated using animation.

- Back propagation algorithm: Back propagation algorithm represents the manner in which gradients are calculated on output of each neuron going backwards (using chain rule). The goal is to determine changes which need to be made in weights in order to achieve the neural network output closer to actual output. Note that back propagation algorithm is only used to calculate the gradients.

- Optimization algorithm (Optimizer): Once the gradients are determined, the final step is to use appropriate optimization algorithm to update the weights using the gradients calculated using back propagation algorithm.

In order to understand back propagation in a better manner, check out these top web tutorial pages on back propagation algorithm.

- Implementing a neural network from scratch (Python): Provides Python implementation for neural network. Very helpful post. Explained neural network feed forward / back propagation algorithm step-by-step implementation.

- Calculus on computational graphs: Back propagation

- Neural network back propagation algorithm (Stanford CS page):

- Neural networks: Feedforward and Backpropagation explained & Optimization: Great explanation on back propagation algorithm, I must say.

Here are some great youtube videos on back propagation algorithm.

- Lex Friedman tutorial on Back propagation

- Great lecture on back propagation by Andrej Karpathy (Stanford CS231n)

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me