Following are the key points which will be dealt with, in this and the following article (part 2):

- Technology architecture building blocks

- Technology architecture description

- Technology team and required skillsets

In this article, we shall look into key technology architecture building blocks. In second part of the series, we shall look into steps needed to be configured to achieve the continuous delivery of microservices containers into AWS cloud along with the technology architecture diagram.

Key Technology Architecture Building Blocks

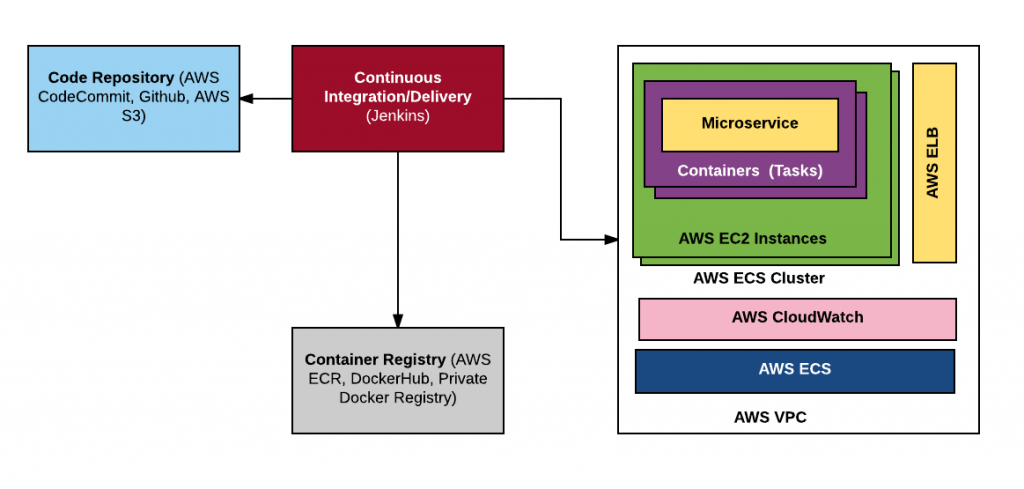

Following is the high-level technology architecture diagram which represents key building blocks of the system.

AWS Cloud Microservices Containers Technology Architecture

Below is the details on key technologies which would make up the technology architecture.

- Code repository: One would need a code repository to commit and work with his/her code. Git is my favorite code repository. If one wants to use AWS service, one could use AWS CodeCommit. AWS CodeCommit is a version control service hosted by AWS. One can use CodeCommit to privately store and manage assets such as source code, documents, binaries etc, in the cloud. The primary advantage of using AWS CodeCommit is the close proximity that it provides to one’s code against other production resources in the AWS cloud. It is integrated with IAM (Identity and Access Management). Access AWS CodeCommit page for further details.

- Continuous Integration (CI)/Continuous Delivery (CD): This is the most critical component of this architecture. Jenkins is the most popular choice in this category. It has got various different plugins which makes CI and CD happen in easy manner. It helps achieve some of the following objectives:

- Check out latest code from code repository.

- Run build, unit tests and create deployable artifacts

- Create container images and push the same to container repository.

- Fires the steps such as creating TaskDefinition, update the AWS ECS container service with the new datsk definition and the desired count, start a new task on AWS ECS cluster resulting into blue/green deployment on AWS cloud. Note that AWS ECS is a highly scalable, high-performance, container management service that supports Docker containers and allows you to run applications easily on a managed cluster of Amazon EC2 instances.

First two-three points are taken care as part of continuous integration (CI) server such as Jenkins. The last point represents continuous delivery aspect of the build/delivery pipeline. Jenkins does act as a continuous delivery server as well.

- Containers: Containers are the deployable unit which gets into production along with the microservices it host. Docker Containers are the most popular choice. One should note that microservices and containers (Dockers) are central to Cloud-native architectures.

- Microservices: As per Wikipedia page, Microservices is a specialisation of an implementation approach for service-oriented architectures (SOA) used to build flexible, independently deployable software systems…A central microservices property that appears in multiple definitions is that services should be independently deployable.[7] The benefit of distributing different responsibilities of the system into different smaller services is that it enhances the cohesion and decreases the coupling. One could find principles of Microservices by Sam newman on this page, Principles of Microservices. In short, following are 8 principles of microservices:

- Modelled around business domain: Concepts such as “Bounded Contexts” is relevant here.

- Culture of automation

- Hide implementation details

- Decentralize all the things including teams (2-pizza teams)

- Deploy independently. Easier said then done. :). Some of the patterns which makes it happen are “Event Sourcing” and “Command Query Responsibility Segregation” (CQRS)

- Isolate failure. Patterns like “Circuit Breakers” makes it happen.

- Highly Observable

- Code Pipeline: Code Pipeline builds, tests, and deploys the code every time there is a code change, based on the release process models one defines. Following diagram represents the Code Pipeline:

Code Pipeline

Following are different stages of code pipeline:

- Code Commits: In this stage, developers commit changes into a code repository. It is described as one of the points above. Code repositories such as AWS CodeCommit, AWS S3, Github etc are used.

- Build/CI: This stage is used to build the code artifacts and run tests. This is described earlier under the section continuous integration/continuous delivery. Tools such as AWS CodeBuild, Jenkins, TeamCity are used.

- Staging/Code testing: In this stage, code is deployed and tested in QA/Pre-prod environments. Tools such as Blazemeter, Runscope etc.

- Code deployment into production: In this stage, code is moved into production. In the context of this article, the microservice is moved into production and become accessible to other application/microservices. Tools such as AWS CodeDeploy, AWS CloudFormation, AWS OpsWorks Stacks, AWS Elastic Beanstalk

- Containers Registry: Once the build and unit tests are run successfully, the next stage is to push a new Docker image of container hosting microservices into the container registry. Following are different container registries which can be used:

- AWS EC2 Container Registry (ECR): Amazon EC2 Container Registry (ECR) is a fully-managed Docker container registry that makes it easy for developers to store, manage, and deploy Docker container images. The advantage of hosting Docker images in AWS ECR is that AWS ECR is integrated with Amazon EC2 Container Service (ECS) which would simplify your development to production workflow.

- Docker private registry: One could use tools as JFrog or Nexus as Docker private registry on-premise to host Docker images.

- Docker public repositories such as DockerHub

- Containers/Microservices Deployment: Once the Docker container images are pushed into the container registry, next step is deploy the containers into production servers or cluster of servers. Whn working with AWS cloud, this part is achieved using continuous delivery server. With Jenkins, it is as simple as executing scripts (after container images are pushed into the registry) which does some of the following:

- Create new TaskDefinition. TaskDefinition is a JSON file comprising of details of one or more containers which form the application. Instance of TaskDefinition is called as “Task”. It is the AWS ECS (Service) which is responsible for creating instances of TaskDefinition or Task on AWS ECS cluster of EC2 machines.

- Update the service with the new task definition and deaired task count to be run when executing task (container hosting microservice) into production.

- Start the new task on the ECS cluster.

The above steps makes assumption that you have already done the following setup prior to the continuous delivery run:

- Create the TaskDefinition template with substitutable fields.

- Create an new ECS IAM role. Briefly speaking, AWS IAM (Identity and Access Management) is a web service that enables Amazon Web Services (AWS) customers to manage users and user permissions in AWS.

- Create an AWS Elastic Load Balancing (ELB) load balancer to be used in ECS service definition.

- Create the ECS service definition specifying the task definition, task count, ELB and other details.

- Containers Orchestration: Once the task is executed, the microservices go live. The availability requirements of microservices in terms of scaling containers (hosting microservices) up and down is met with the help of ECS service scheduler, AWS CloudWatch alarm and AWS ELB. ECS service scheduler allows one to distribute traffic across your containers using Elastic Load Balancing (ELB). AWS ECS automatically registers and deregisters the containers from the associated load balancer. One should recall that the TaskDefinitions were configured with AWS ELB, and thus, executing a task make sure that they can be accessed using ELB when moved into production. Note that AWS Cloudwatch is a monitoring service for AWS cloud resources and the applications you run on AWS.

- Containers Clusters: Last, but not the least, we also need to setup AWS ECS cluster which will run these microservices containers. Following are some of the key components of the ECS cluster setup:

- ECS Console as starting point: One would require to login to ECS console in order to get started with ECS cluster setup.

- Amazon Virtual Private Cloud (Amazon VPC): AWS VPC lets one provision a logically isolated section of the Amazon Web Services (AWS) cloud where one could launch AWS resources in a virtual network that you define. By default, the ECS cluster creation wizard creates a new VPC with two subnets in different Availability Zones, and a security group open to the Internet on port 80. This is a basic setup that works well for an HTTP service. More details could be found on Creating a cluster page. However, when working with ECS using AWS CLI, One will need to create VPC and security groups separately.

- AWS EC2 machines: During cluster creation process, one is required to choose EC2 instance type for container instances. At the same time, one would also require to configure number of EC2 instances to launch into the cluster.

- Container IAM role: This is the role which is used with container instances.

Stay tuned for my next blog where I would be taking you over the details related with technology architecture diagram along with setting up the system for moving the microservices containers in the AWS cloud on the basis of continuous delivery principles.

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

- RAG Pipeline: 6 Steps for Creating Naive RAG App - November 1, 2025

Excellent blog Ajitesh.

Thanks Sravan