Have you ever heard of the term “Python Pickle“? If not, don’t feel bad—it can be a confusing concept. However, it is a powerful tool that all data scientists, Python programmers, and web application developers should understand. In this article, we’ll break down what exactly pickling is, why it’s so important, and how to use it in your projects.

What is Python Pickle?

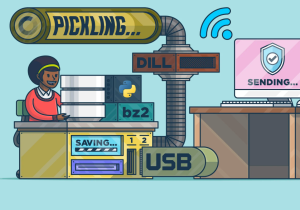

In its simplest form, pickling is the process of converting any object into a byte stream (a sequence of bytes). This byte stream can then be transmitted over a network or stored in a file for later use. It’s like putting the object into an envelope and sending it off! This is particularly useful when you need to save complex objects (such as lists or dictionaries) without losing any data.

Python Pickle, also known as Python Serialization, is a Python in-built module, pickle, used for serializing and de-serializing objects into Python format text strings. In Python, these strings are called pickles and can be created by Python code or sent from other Python programs. A Python Pickle is easily carried over the network and can be validated without knowing the details of the received objects. The Python Pickle module allows you to take an existing object – like a tuple, a list, a dictionary or even custom classes – convert it version of itself that is independent of type, structure, and context. Essentially, this means that Python Pickles can represent any Python object in a form that can be transmitted over the Internet or saved as part of a file. When you de-serialize the pickled representation of an object using the Python Pickle module it will restore it back to exactly the same state it was in when it was first serialized. This makes Python Pickle an incredibly powerful tool for digital data storage and transport across various devices and networks. As long as other devices and programs have compatible versions of Python installed along with the pickling module they should be able to successfully share this type of information.

Why Should I Use Python Pickle?

The main benefit of using Python Pickle is that it allows you to store complex objects in one place without having to reinvent the wheel every time you need them. For example, let’s say that you are working on an AI project where you train a model with images and labels. If you want to reuse this model at some point in the future, pickling would allow you to quickly access all of your pre-trained data without having to recreate it from scratch. Python pickle allows us to serialize and de-serialize Python object structures to compact bytecode so that we can save our machine learning models in its current state and reload it if we want to classify new, unlabeled examples (in case of supervised learning models), without needing the model to learn from the training data all over again.

How Do I Use Python Pickle?

Using Python pickle is fairly straightforward. First, import the “pickle” package into your program. Next, create an object (or list of objects) that you would like to save for later use. Finally, use the “pickle” command to convert your object(s) into a byte stream and store them away for future use! It really is as simple as that!

The code below represents the following different aspects:

- Build the classifier model using RandomForest algorithm

- Write the pickle file on local drive as well Google Drive or local drive

- Read the pickle file and make predictions

Train the RandomForest Classifier

The code below can be used to train a machine learning classification model using Random Forest algorithm:

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from mlxtend.plotting import plot_decision_regions

from sklearn.metrics import accuracy_score

from sklearn.ensemble import RandomForestClassifier

#

# Load IRIS data set

#

iris = datasets.load_iris()

X = iris.data[:, 2:]

y = iris.target

#

# Create training/ test data split

#

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1, stratify=y)

#

# Create an instance of Random Forest Classifier

#

forest = RandomForestClassifier(criterion='gini',

n_estimators=5,

random_state=1,

n_jobs=2)

#

# Fit the model

#

forest.fit(X_train, y_train)

#

# Measure model performance

#

y_pred = forest.predict(X_test)

print('Accuracy: %.3f' % accuracy_score(y_test, y_pred))

Write the machine learning model as pickle file to google folder

Using the following code, we mounted the google drive and then created a rf_classifier directory within Google drive where the pickle files will be stored. Within this rf_classifier directory, a subdirectory pkl_objects is created to save the serialized Python objects (“forest” model created in above code) to Google drive drive. Via the dump method of the pickle module, the trained random forest classifier model got serialized. The file gets written as forest.pkl file.

The dump method takes as its first argument the object that needs to be pickled. For the second argument, an open file object is used for writing the Python object. Via the wb argument inside the open function, the file in binary mode is opened for pickle, and protocol=4 is set to choose the latest and most efficient pickle protocol that was added to Python 3.4, which is compatible with Python 3.4 or newer.

# Mounting the google drive in order to write the pickle file

from google.colab import drive

drive.mount('/content/gdrive')

#

# Write the pickle file in the folder rf_classifier/pkl_objects

#

import pickle

import os

dest = os.path.join('/content/gdrive/MyDrive', 'rf_classifier', 'pkl_objects')

dest

if not os.path.exists(dest):

os.makedirs(dest)

pickle.dump(forest,

open(os.path.join(dest, 'forest.pkl'), 'wb'),

protocol=4)

The following code can be used to write the pickle file to local folder:

#

# Write the pickle file in the folder rf_classifier/pkl_objects

#

import pickle

import os

dest = os.path.join('rf_classifier', 'pkl_objects')

dest

if not os.path.exists(dest):

os.makedirs(dest)

pickle.dump(forest,

open(os.path.join(dest, 'forest.pkl'), 'wb'),

protocol=4)

Load the Machine Learning Model by reading pickle file

The following code can be used to load the pickle file and make the predictions. Note that the load method on pickle module is invoked to read the pickle file in binary mode and load it as Python object.

#

# Load the random forest classifier model from the drive

#

forest_loaded = pickle.load(open(os.path.join('/content/gdrive/MyDrive', 'rf_classifier',

'pkl_objects', 'forest.pkl'),

'rb'))

#

# Measure model performance

#

y_pred = forest_loaded.predict(X_test)

print('Accuracy: %.3f' % accuracy_score(y_test, y_pred))

Conclusion

Python pickles are an incredibly useful tool for any programmer who works with complex data structures. By converting objects into byte streams and storing them away for future use, pickles allow us to quickly access pre-trained models or lists without having to recreate them from scratch every time they are needed. So if you are looking for an easy way to store complex objects without losing any data along the way—Python pickles should definitely be on your radar!

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me