In this post, you will learn about different aspects of creating a machine learning system with high reliability. It should be noted that system reliability is one of the key software quality attributes as per ISO 25000 SQUARE specifications.

Have you put measures in place to ensure high reliability of your machine learning systems? In this post, you will learn about some of the following:

- What is the reliability of machine learning systems?

- Why bother about machine learning models reliability?

- Who should take care of the ML systems reliability?

What is the Reliability of Machine Learning Systems?

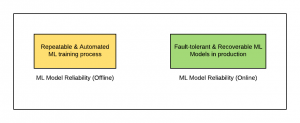

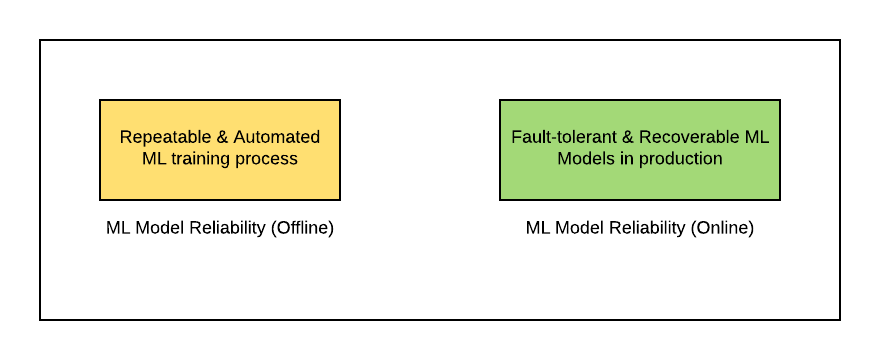

As like software applications, the reliability of machine learning systems is primarily related to the fault tolerance and recoverability of the system in production. In addition, the reliability of ML systems is al related to how reliable is the training process of ML models. Let’s look into the details related to both the aspects:

Fig: ML Model Reliability

Fault-tolerance/Recoverability of ML Systems in Production

Fault tolerance of ML systems could be defined as the behavior of the system when the model performance starts degrading beyond the acceptable limits. The ideal behavior of the ML system is to fall back to either one of last best serving models or simplistic heuristics model built using rules.

One of the key aspects of recoverability is to record the features information and related predictions for monitoring the data and related metrics. This would help in coming up with alternate models which could provide greater accuracy in case the model performance starts degrading.

Reliability of ML training process

Reliability of ML training process depends upon how repeatable is the model training process. The goal is to detect the problems with the models and prevent the models from moving into production. One of the goals of operationalizing the machine learning training/testing process is to achieve automation of the overall ML models training/testing process. As part of the model training automation process, the following would need to be achieved:

- Automated data extraction from disparate data sources

- Feature extraction

- Model training/testing

- Evaluating models

- Model selection

- Storing model evaluation metrics apart from storing the information such as hyperparameters, data used for training the models

In order to avoid the bad models to move into the production, the different form of quality checks would need to be performed on different aspects of ML models such as the following:

- Data (data poisoning, data quality, data compliance)

- Features (features threshold, features importance, unit tests)

- Models (fairness, stability, online-offline metrics)

- ML pipeline (pipeline security)

The container technology along with workflow tools could be used to achieve a repeatable model training process.

Why bother about ML models reliability?

Reliability is one of the key traits of software product quality. This is as per ISO 25000 SQUARE specifications for evaluating software product quality. Ensuring reliability of the model would make the models more trustable and hence greater adoption of models by the end user.

Who should take care of the ML systems reliability?

It is the responsibility of some of the following to create and monitor reliable ML model training/testing system.

- ML researcher/Data scientists: Helps in designing test cases (related to data, features, models, pipeline) around testing the reliability of ML models.

- Quality assurance engineers: Plays an important role in checking QA testing outcomes related to data, features, models and ML pipeline tests.

- Operations guy/engineer: Plays important role in automating the ML pipeline

References

Summary

In this post, you learned about different aspects of the reliability of a machine learning system. While creating a reliable ML system, one would require to assure the quality of model reliability in production and also model training process reliability. While model reliability in production is related with fault-tolerance and recoverability of models, the model training reliability would mean the repeatability of ML training/testing process which is associated with automation of ML training/testing processes. Being a data scientist/ML researcher, you would have a key role in laying out guidelines for achieving reliability of ML systems.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me