In this post, you will learn about details on Facebook machine learning tool to contain online terrorists propaganda. The following topics are discussed in this post:

- High-level design of Facebook machine learning solution for blocking inappropriate posts

- Threat model (attack vector) on Facebook ML-powered solution

ML Solution Design for Blocking Inappropriate Posts

The following is the workflow Facebook uses for handling inappropriate messages posted by terrorist organizations/users.

- Train/Test a text classification ML/DL model to flag the posts as inappropriate if the posts is found to contain words representing terrorist propaganda.

- In production, block the messages which the model could predict as inappropriate with very high confidence.

- Flag the messages for data analysts processing if the confidence level is not very high. These messages are prioritized based on likelihood estimate of the messages being inappropriate.

Threat Model (Attack Vector) on Facebook ML-powered Solution

The following lists down the threat model using which terrorists could carry out attacks on the claimed ML model (by Facebook) thereby compromising both systems’ integrity and systems’ availability. Here the assumption is that ISIS representatives of Islamic background/religion are trying to hack into the ML-powered system.

- The hackers (hypothetically speaking, the users categorized as terrorists) get control of users’ account belonging to any non-Islamic country. Alternatively, the hacker from any non-Islamic country opens up an account on the Facebook.

- The hacker starts posting encoded messages with words of interests interleaved and hard enough for the data analyst to flag the post as inappropriate.

- The ML model gets trained with the encoded messages.

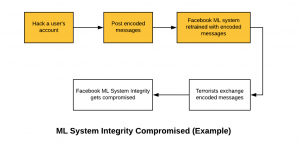

- In future, the hackers (terrorists) start exchanging messages thereby compromising ML systems integrity as the inappropriate messages pass through the system. This is a classic example of false negatives. The following diagram represents the same.

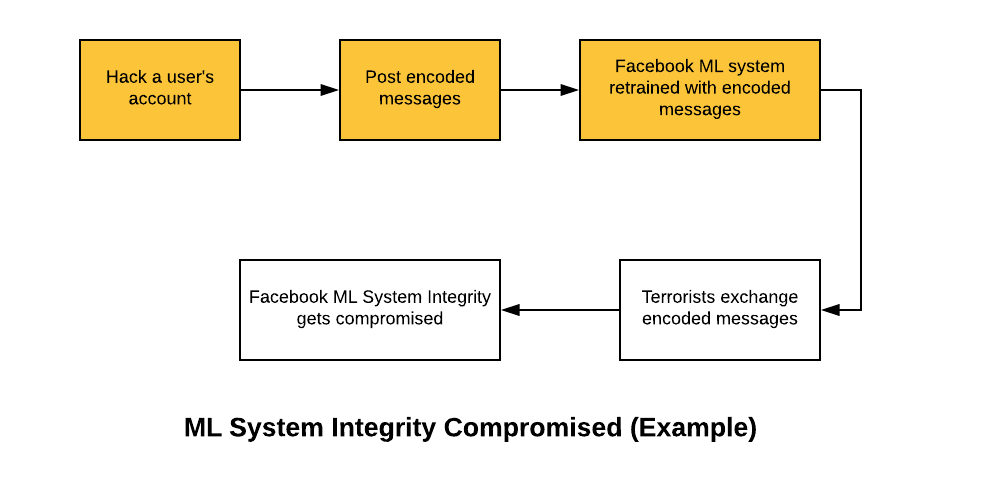

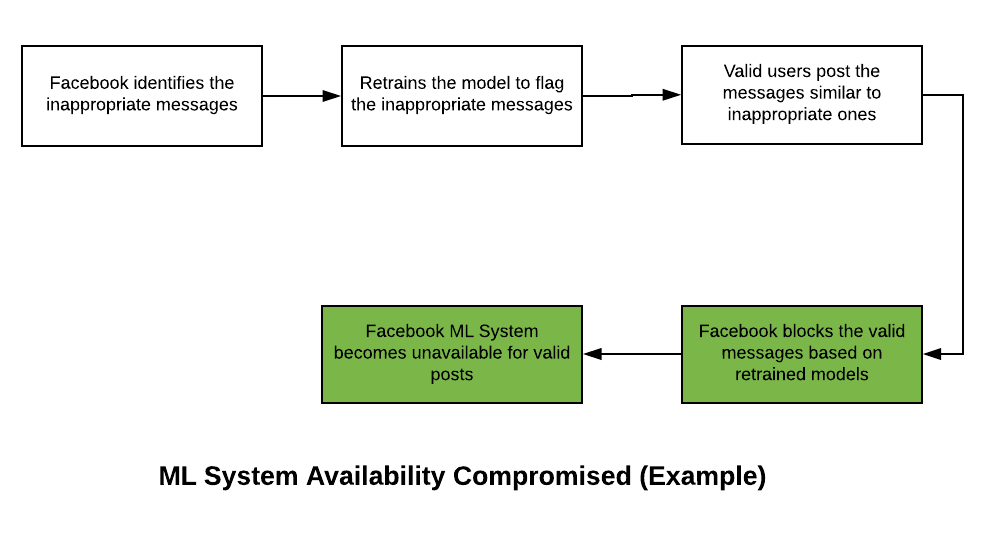

- Let’s say, Facebook in future finds out about the encoded messages.

- It makes it difficult to block these encoded messages with the primary reason that valid message will also get blocked. This would compromise ML system availability. In simpler words, this would mean that Facebook ML system would make system unavailable for users posting valid messages. The following diagram represents the same.

Summary

In this post, you learned about how did Facebook go about designing a machine learning solution to contain online terrorist propaganda. The challenge is to make sure that the model is retrained appropriate appropriately and look for adversary data sets entered into the system by hackers (the terrorist organization) to train the model inappropriately.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me