In this post, you will learn about data quality assessment frameworks / techniques in relation to machine learning and why one needs to assess data quality for building high-performance machine learning models? As a data science architect or development manager, you must get a sense of the importance of data quality in relation to building high-performance machine learning models. The idea is to understand what is the value of data set. The goal is to determine whether the value of data can be quantised. This is because it is important to understand whether the data contains rich information which could be valuable for building models and inform stakeholders on data collection strategy and other aspects.

Here are the top 3 data assessment frameworks which can be considered for assessing or evaluating data quality when training models using supervised learning algorithms:

- Data Shapley

- Leave-one-out (LOO) technique

- Data valuation using Reinforcement Learning (DVRL)

Here are some of the topics which will be covered in this post:

- Why one needs to assess data quality?

- Data quality assessment frameworks for machine learning

What’s the need for assessing data quality?

In order to train machine learning models having high performance, it is of utmost importance to make sure that the data used for training the models is of very high quality. As per one statistics gathered from a survey, 43% respondent said that data quality is the biggest machine learning barrier.

What is low-quality dataset?

The following represents scenarios in which the quality of the data used for training machine learning models can said to be low:

- Incorrectly labeled data set: This can be data with incorrect labels.

- Low-quality data set: This can be data with missing values or wrong values. In case of images, this could be unclear image. Here is an example of the low-quality data:

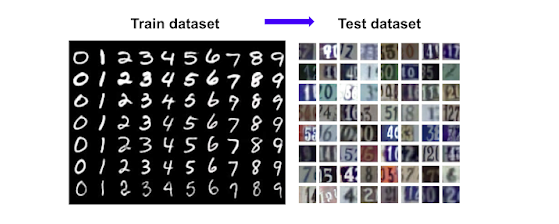

- Data samples mis-match in the training and test data: Here is an example of the scenario when the training and the test data set do not match:

Why assess the Data Quality?

Here are couple of reasons why you must assess the data quality while training one or more machine learning models:

- Achieve models having high performance: Many a times, model trained with corrupted, low-quality or incorrectly labels data samples impact the model performance in the sense the model fails to generalize for the population. In such scenarios, removing low-quality or incorrectly labeled data can often result in increase in the model performance. In addition, the scenario in which the training and test data samples do not match, one can achieve higher model performance by restricting the data samples in the training data which matches to the test data set.

- Assess whether machine learning models can be enabled in SaaS settings: In SaaS-enabled solution offerings where the models depend upon the customers’ dataset, it can only be asserted based on the quality of data whether a machine-learning based solution offerings can be provided to SaaS customers.

- Interesting use cases: Assessing data quality using some sort of quantification referring high, medium, low, very low quality data can lead to some interesting use cases.

- Determine data collection strategy: Many a times, quantifying data quality results in putting strategy for data collection for different data sources.

Data Quality Assessment Frameworks for Machine Learning

Here are some of the interesting data quality assessment frameworks which can be used to quantify the data quality in order to realise the benefits of having high quality dataset for training ML models as mentioned in previous section.

Data Valuation using Reinforcement Learning (DVRL)

Google has tested a framework called as data value estimator framework which applies reinforcement learning (instead of gradient descent methods) to estimate data values and selects the most valuable samples to train the predictor model. It is a novel meta learning framework for data valuation which determines how likely each training sample will be used in training of the predictor model. The model training process includes both the data value estimator along with the model training algorithm. With data value estimator, more valuable training samples are used time and again to train the model than less valuable samples. The animation below represents the aspect of using data value estimator being used with the predictive model. Pay attention to how data value estimator, sample and predictive model along with loss computation is used to find the data points having high value.

It is found the Google DVRL framework performs the best out of the three data quality valuation framework (such as Data Shapley, LOO & DVRL) listed in this post in terms of determining the value of each data point.

Data Shapley

Data Shapley is an equitable framework which is used to quantify the value of individual training sources. The Data Shapley framework uniquely satisfies three natural properties of equitable data valuation which related to the following:

- If adding a data point to the training data set does not change the model performance, the value of the data point is zero.

- If adding two different values does not change the model performance, then both the data points will have equal value.

Data Shapley provides a metric to evaluate each training data point with respect to the machine learning model performance.

Here is an example Python Jupyter notebook of how to use Data Shapley to evaluate the value of the data. The related paper can be found here – What is your data worth? Equitable valuation of data

Leave One Out (LOO) Technique

Leave one out method is one of the most common approach of evaluating the model performance based on the training data set. This method is most widely used method to find the value of each of the data points. In this method, the value of every data points is found out by removing each data point from the training data set and measure the model performance before and after. The value of the removed data point is calculated by subtracting the model performance before and after removing data point. In LOO method, the idea is to evaluate the model performance after removing the data point.

LOO method has the limitations in terms of computational infeasibility to re-train and re-evaluate the model on each data point or single datum.

Other limitations with LOO method is that equitable valuation conditions listed in previous section (Data Shapley) are not satisfied.

Conclusions

Here is the summary of what you learned in this post about evaluating or assessing the value of data points using different frameworks thereby determining the data quality:

- It is important to determine data quality as it helps in achieving model of high performance by removing data of low quality.

- At times, quantifying the value of data points also enable to find out interesting use cases.

- Quantifying the value of data will also help in data collection strategy.

- In SaaS based AI offerings, it is of utmost importance to determine quality of data coming from different customers and train the model appropriately or call out why the models of optimal performance can’t be achieved.

- Data Shapley can be used for determining value of data points.

- Leave one out method can also be used for determining value of data points. However, there are several limitations of using LOO method such as computational infeasibility in case of large data sets, equitable conditions being not met etc.

- Data valuation estimation framework using reinforcement learning by Google can also be used to evaluate the value of data points. Unlike other techniques, DVRL framework uses data value estimator and predictive models in conjunction to determine the value of data points.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me