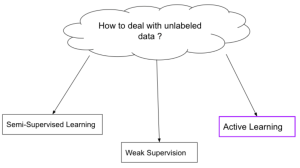

Supervised learning is a type of machine learning algorithm that uses a labeled dataset to learn and generalize from. The labels act as supervisors, providing the algorithm with feedback so it can learn to map input data to the correct output labels. In this blog post, we’ll be focusing on weak supervised learning, a subset of supervised learning that uses only partially labeled or unlabeled data. We’ll cover some of the most common weak supervision techniques and provide examples of each.

What is Weak Supervised Learning?

Weak supervised learning is a type of machine learning where the learner is only given a few labels to work with. Weak supervision is a technique for training machine learning models when only noisy or incomplete labels are available. This can be contrasted with strong supervised learning, where the learner is given many labels. For example, if a machine learning algorithm is only given a few images of dogs, it will have a harder time learning the concept of “dog” than if it was given many images of dogs. Weak supervised learning can be used in situations where there is not enough data to train a strong supervised learning algorithm. It can also be used to create more robust models that are not reliant on a single source of data.

In many real-world applications, it is often easier to obtain weak labels than strong labels. For example, Weak labels can be generated by heuristic rules, crowd-sourcing, or even transfer learning from related tasks. Weak supervision has been shown to be effective in a variety of tasks such as image classification, text classification, and object detection.

Weak supervised learning is a method of machine learning where the learner receives only partial feedback about the target function. In contrast, supervised learning involves providing the learner with complete information about the target function. One key difference between these two methods is that weak supervision allows for noisy labels, while supervised learning does not. This means that weak supervision can be used when the training data is not completely accurate. However, it also means that there is a greater risk of overfitting. In general, weak supervision is more efficient than supervised learning because it requires less labeled data. However, it is important to carefully consider whether weak supervision is appropriate for a given problem, as it can introduce significant bias if not used correctly.

Common Weak Supervision Techniques

There are a few different weak supervision techniques that are commonly used in machine learning. These techniques are able to extract labels from otherwise unlabeled data, which can be extremely helpful when labeling data is either expensive or difficult to obtain. Some of the most common weak supervision techniques are listed below:

- Bootstrapping

- Co-training

- Multi-instance learning

Bootstrapping

Bootstrapping is a technique that can be used in weak supervised learning, where the training data is not fully labeled. This is done by taking a small set of labeled data, and using it to generate additional labels for the rest of the data. This process can be repeated multiple times, until the entire dataset is labeled. Bootstrapping is an effective technique because it allows the models to learn from more data, without having to rely on manual labeling. In addition, bootstrapping can help to reduce label noise, as it is less likely that all of the generated labels will be incorrect. For these reasons, bootstrapping is a popular choice for weak supervision techniques.

Co-training

Co-training is a weak supervision technique where multiple models are trained on different subsets of the data. The labels for each instance are predicted by taking a majority vote of different models’ predictions. This can be used to improve the accuracy of the models. This technique works well when training data is abundant but label noise is present.

Multi-instance learning

Multi-instance learning is a type of weak supervised learning that has been shown to be effective in various settings. In general, weak supervision is less expensive to obtain than strong supervision, and thus can be attractive when resources are limited. Multi-instance learning algorithms learn from collections of instances, where each collection may belong to a different class. This can be challenging, as the learner must figure out which instances are relevant for each class. However, recent advances in multi-instance learning have shown that it can be an effective approach for weak supervised learning. Multi-instance learning algorithms have been successfully applied to tasks such as object detection and classification, and have the potential to be applied to other settings as well.

In multi-instance learning, an instance is not represented as a set of feature vectors, but as a bag of feature vectors. Multi-instance learning algorithms allow for the indiscriminate use of weak supervised learning models, which are usually trained on data that is annotated at the instance level, to weakly supervised models, which are usually trained on data that is annotated at the bag level. Multi-instance learning can be used in a number of different ways, but is most commonly used in computer vision and text classification. Multi-instance learning algorithms have been shown to outperform traditional supervised learning algorithms in many tasks, and are therefore an important tool for practitioners of weakly supervised learning.

Conclusion

Weak supervised learning algorithms provide a way to train models when traditional labeled datasets are either unavailable or too noisy to be useful. These algorithms have applications in many different fields, including computer vision, natural language processing, and text classification. If you’re working with limited or noisy data, consider using one of the weak supervision techniques described above to train your model.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me