In this post, you will learn concepts & examples of generative adversarial network (GAN). The idea is to put together key concepts & some of the interesting examples from across the industry to get a perspective on what problems can be solved using GAN. As a data scientist or machine learning engineer, it would be imperative upon us to understand the GAN concepts in a great manner to apply the same to solve real-world problems. This is where GAN network examples will prove to be helpful.

What is Generative Adversarial Network (GAN)?

We will try and understand the concepts of GAN with the help of a real-life example.

Imagine that you’re working for a major bank and you’re tasked with improving its fraud detection system. Your system is designed to identify fraudulent transactions from the genuine ones. Over time, you’ve trained the system to be quite accurate in identifying fraudulent activities.

However, there are cybercriminals who are continuously trying to bypass your fraud detection system. When they realize that their fraudulent transactions are being detected, they change their approach and methods, trying to mimic genuine transactions as closely as possible.

To tackle this, you further refine your fraud detection system by continuously learning from these new types of fraudulent transactions. Your system gets better and better at detecting even the most sophisticated attempts to mimic genuine transactions.

On the other hand, when the cybercriminals realize their new methods are also being detected, they adjust their tactics again, making their fraudulent transactions look even more like genuine transactions.

This dynamic represents two different kinds of networks which form the part of Generative Adversarial Network (GAN). The fraud detection system is like the discriminator in a GAN, trying to identify which transactions are real and which ones are fake, while the cybercriminals act like the generator in a GAN, trying to produce fraudulent transactions that look like the real ones.

How does the training process of GAN work?

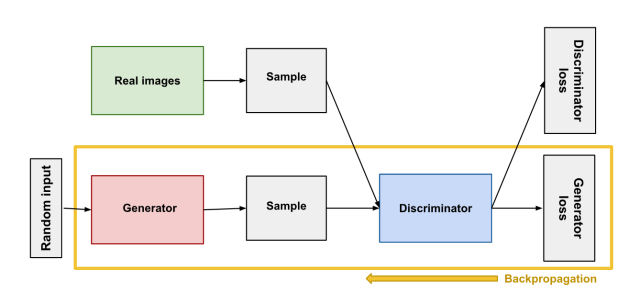

The training process of GAN looks like the iterative and competitive process which helps improve both the quality of the fraudulent transactions (making them look more like genuine transactions) and the quality of the fraud detection system. The generator tries to create data that imitate the real data while the discriminator gets better at distinguishing between the real and fake data. The following picture (Courtesy: Google) represents the training process of GAN. Two networks are trained – Discriminator network and Generator network. The one in orange box represents the training of a Generator.

In the initial stages of the process, the generator – think of it as our cybercriminals – produces transactions that are obviously fraudulent, much like noisy images in a standard GAN setup. Concurrently, the discriminator, analogous to our bank’s fraud detection system, is making predictions that could be quite off the mark because it’s still learning to distinguish between real and fraudulent transactions. Recall that the function of the generator in a GAN resembles with that of the decoder in a Variational Autoencoder (VAE). The role of the generator in a GAN is precisely akin to that of the decoder in a VAE: it transforms a vector in the latent space into an image.

The crux of GANs, however, is in the way the training alternates between the two networks. As the cybercriminals (our generator) get better at mimicking genuine transactions to fool the fraud detection system (the discriminator), the detection system needs to adapt and evolve to maintain its ability to accurately flag the fraudulent transactions.

While training a GAN, the cycle of improvement of creation and discrimination continues until an equilibrium is reached, where the fraudulent transactions are so similar to the genuine ones that it’s incredibly difficult for the fraud detection system to tell them apart, and changes to the cybercriminals’ tactics no longer result in a higher success rate. In a GAN, this would be the point where the generator creates outputs nearly indistinguishable from the real data, and the discriminator is correct about 50% of the time, akin to random guessing.

GAN Examples

Here are some examples of GAN network usage.

- Text to image translation

- Image editing / manipulating

- Creating images (2-dimensional images)

- Recreating images of higher resolution

- Creating 3-dimensional object

Text to image translation / conversion

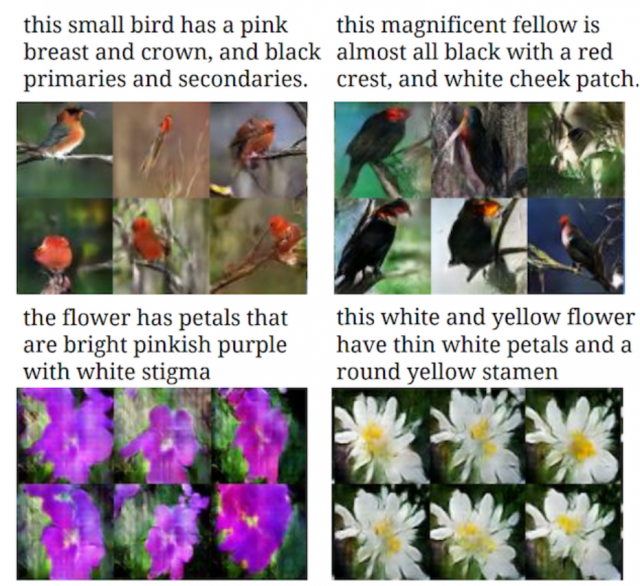

GAN can be used to convert / translate text to images. In the example below, the text is translated into images. There are several papers listed on this page in relation to text-to-image translation. Framework such as StackGAN can be used to create photos from text.

Image editing / manipulation

GANs network can be used for image editing. Here is a good read on using GAN for image editing. Here is another paperswithcode page on using GAN for image manipulation. The below picture represents how the place would have looked in winter season.

Recreate Photographs / Images

This is a very interesting use case. One can use GAN for recreating different photographs from same image. Here is a great read on creating photographs using GAN.

Creating images of higher resolution

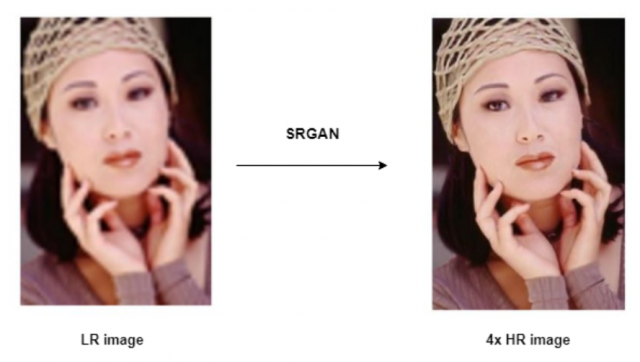

GAN can be used for creating images of higher resolutions. This can be achieved using Super Resolution GAN (SRGAN). A Super Resolution GAN (SRGAN) is used to upscale images to super high resolutions. An SRGAN uses the adversarial nature of GANs, in combination with deep neural networks, to learn how to generate upscaled images (up to four times the resolution of the original). The photo below represents the image of high resolution using SRGAN.

3D Object Generation

GAN can be used for creating 3-dimensional object. A novel framework, namely 3D Generative Adversarial Network (3D-GAN), generates 3D objects from a probabilistic space by leveraging recent advances in volumetric convolutional networks and generative adversarial nets.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me