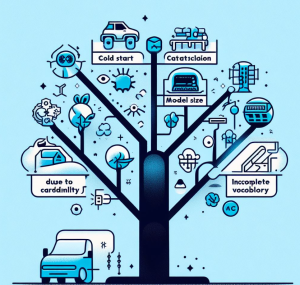

Have you ever encountered unfamiliar words while learning a new language and didn’t know their meanings? Or tried to fit all your belongings into a suitcase, only to realize it’s too full? Or started reading a book series from the third book and felt lost? These scenarios in our daily lives surprisingly resemble some challenges we face with categorical variables in machine learning. Categorical variables, while essential in many datasets, bring with them a unique set of challenges. In this article, we’ll be discussing three major problems associated with categorical features:

- Incomplete Vocabulary

- Model Size due to Cardinality

- Cold Start

Let’s explore each with real-life examples and supporting Python code snippets.

Incomplete Vocabulary

The “Incomplete Vocabulary” problem arises when handling categorical variables during the training and testing phases of machine learning model development. In the context of machine learning, the “Incomplete Vocabulary” problem refers to situations where categorical variables in the testing set contain categories that were not present in the training set. This leads to a dilemma, as the model has never encountered or learned patterns associated with these unseen categories, causing it to be uncertain or outright unable to make predictions for these categories.

The following are some implications of the incomplete vocabulary problem:

- Model Failure: Some models, especially those that rely on strict categorical encoding methods (like one-hot encoding), might throw errors when encountering categories not seen during training.

- Misleading Predictions: In cases where the model doesn’t fail, it might produce unreliable or misleading predictions for unseen categories, as it’s essentially making a “blind guess” without having been trained on those particular categories.

Imagine you’re building a model to predict the popularity of music genres. Your training dataset contains observations related to genres such as “Rock”, “Pop”, and “Jazz”. If you then have a testing dataset that includes a genre like “Reggae”, which was not present in the training dataset, the model will face the “Incomplete Vocabulary” problem. It won’t know how to interpret or make predictions for “Reggae”, since it has never seen data related to it before.

Solutions to Incomplete Vocabulary Problem

The following are some of the solutions you can adopt to deal with the problem of incomplete vocabulary:

- Regular Updates: Regularly updating and retraining the model as new categories emerge can help ensure the model’s vocabulary remains comprehensive.

- Fallback Mechanisms: Implementing mechanisms to handle unknown categories, like treating them as a special “unknown” category or using content-based methods to infer their properties, can mitigate the impact of the problem.

- Hierarchical Categorization: If possible, categorize items into broader categories to minimize the impact of new, unseen subcategories. For example, “Reggae” could fall under a broader “Music” category, which the model is familiar with.

Model Size due to Cardinality Problem with Categorical Variables

When dealing with categorical variables in machine learning, the issue of “Model Size due to Cardinality” is a significant concern that arises due to the number of unique values (or categories) a variable can take. Cardinality refers to the number of unique categories in a categorical variable. High cardinality implies that a categorical variable can take on a very large number of unique values. The “Model Size due to Cardinality” problem emerges when encoding high-cardinality categorical variables, leading to a vast increase in the dataset’s dimensionality. This enlarged dimensionality can further complicate the model, making it bulky, potentially less interpretable, and susceptible to overfitting.

Consider a feature “UserID” which has a unique ID for each user. One-hot encoding such a feature could lead to as many columns as there are users. The following Python code example demonstrates 10000 categorical variable due to cardinality. This explosion in the number of columns due to the high cardinality of the “UserID” feature can be detrimental to the model’s performance and efficiency.

import pandas as pd

# Sample data with high cardinality

data = {"UserID": [i for i in range(1, 10001)]} # 10,000 unique IDs

df = pd.DataFrame(data)

# One hot encoding

one_hot = pd.get_dummies(df, columns=["UserID"])

print("Number of columns after one-hot encoding:", one_hot.shape[1])

Solution to High Cardinality Problem

The following are some of the solutions to deal with high cardinality problem of categorical variables:

- Feature Engineering: Consider techniques like binning, where similar categories are grouped together, reducing the overall cardinality.

- Embeddings: For neural network-based models, embeddings can be used to represent high-cardinality categorical variables efficiently. Embeddings map each category to a dense vector of fixed size, avoiding the explosion in dimensionality.

- Hashing: The hashing trick maps multiple categories to a fixed number of columns, reducing dimensionality. Some information might be lost due to collisions, but it can be an effective technique for very high cardinality features.

Cold Start Problem of Categorical Variables

Remember starting a book series from a middle installment and feeling utterly lost? That’s akin to the cold start problem in machine learning. When a model encounters a new category for which it has no prior information, it faces a predicament in making decisions. The cold start problem refers to the inability of a model to handle new data entities (like new hospitals or physicians) for which it hasn’t been trained. Let’s break down the problem using the scenario of new hospitals and physicians:

Assume a machine learning model has been trained to make predictions about hospital and physician performance, using historical data from existing hospitals and physicians. This model, based on its training data, has learned patterns and intricacies about the hospitals and physicians it knows about.

However, after the model is placed into production:

- New Hospitals: If a new hospital is built, this hospital will not have historical data comparable to the existing hospitals. The model, having never seen this hospital during training, might struggle to make accurate predictions about its performance.

- New Physicians: Similarly, new physicians hired in hospitals might not have a track record (in the context of the data the model was trained on). The model will not know how to predict their performance or behavior accurately.

The crux of the cold start problem here is the model’s lack of exposure to these new entities during training. When presented with new hospitals or physicians, the model might either refuse to make predictions (if it’s strictly categorical) or might make highly uncertain or inaccurate predictions.

Solutions to the Cold Start Problem of Categorical Variables

The following are some of the solutions to deal with the cold start problem of categorical variables:

- Separate Serving Infrastructure: As mentioned in the prompt, a separate infrastructure might be set up specifically to handle predictions for these new entities. This infrastructure could:

- Use different models that are designed to handle new entities, possibly relying more on external information or meta-data.

- Allow for manual inputs or overrides. For instance, expert opinions on new hospitals or physicians could be factored in initially until sufficient data is available.

- Fallback Strategies: For new hospitals or physicians, the system could revert to simpler prediction models or heuristics. For example, it could use average performance metrics of similar hospitals or physicians until enough data is accumulated for the new entity.

- Retraining and Continuous Learning: Continually update and retrain the model with new data. As soon as a new hospital starts generating data or a new physician has some performance metrics, include that data in the training set and retrain the model. This makes the model more adaptive to changes.

- Explicit Feedback: Allow for a mechanism to gather explicit feedback or ratings about new hospitals or physicians. This can help in quickly accumulating some data about them.

Conclusion

In the intricate landscape of machine learning, categorical variables stand out as both invaluable and challenging. As we’ve unraveled, issues like incomplete vocabulary, model size due to high cardinality, and the notorious cold start problem can pose significant hurdles. But acknowledging these challenges is half the battle. By understanding their nuances, we can devise strategies to mitigate their impact, ensuring our models remain robust and efficient. As with many facets of machine learning, continuous learning and adaptability are key. So, as you navigate your data science journey, remember to approach categorical variables with both caution and curiosity.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me