In this post, you would learn about details (brief information and related URLs) on some of the research work done on AI/machine learning model ethics & fairness (bias) in companies such as Google, IBM, Microsoft, and others. This post will be updated from time-to-time covering latest projects/research work happening in various companies. You may want to bookmark the page for checking out the latest details.

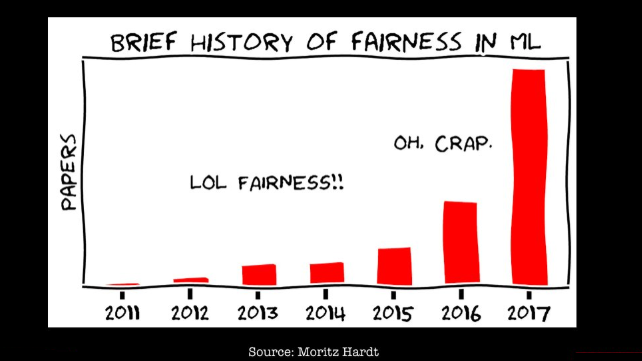

Before we go ahead, it may be worth visualizing a great deal of research happening in the field of machine learning model fairness represented using the cartoon below, which is taken from the course CS 294: Fairness in Machine Learning course taught at UC Berkley.

IBM Research for ML Model Fairness

- AI Fairness 360 – AIF360: AIF360 Toolkit is aimed to help data scientists, not only detect biases at different points (training data, classifier, and predictions) in machine learning pipeline but also apply bias mitigation strategies to handle any discovered bias. Here is the link for AIF360 Portal

- Trusted AI Research: List down research publications and related work in the following areas:

- AI Fairness Tutorials: Presents tutorials with the following projects:

- Credit scoring

- Medical expenditure

- Gender classification of face images

- AI Model Fairness research papers based on which AIF360 toolkit is created.

Google Research/Courses on ML Model Fairness

Here are some links in relation to machine learning model fairness.

- Machine learning fairness

- Google Machine Learning crash course – Fairness module: In addition, the module also presents information on some of the following:

- Types of Bias. Discussed are some of the following different types of bias:

- Selection bias (coverage bias, non-response bias, sampling bias)

- Group attribution bias (in-group bias, out-group homogeneity bias)

- Implicit bias (confirmation & experimenter’s bias)

- Identifying bias: The following are some of the topics discussed for identifying the bias:

- Missing feature values

- Unexpected feature values

- Data Skew

- Evaluating Bias: Confusion matrix (accuracy vs recall or sensitivity) could be used to evaluate bias for different groups.

- Types of Bias. Discussed are some of the following different types of bias:

- Interactive visualization on attacking discrimination with smarter machine learning

Microsoft Research on Model FATE

- FATE: Defines initiatives in relation to some of the following:

- Fairness

- Accountability

- Transparency

- Ethics

- Kate Crawford – The Rise of Autonomous Experimentation: Technical, Social, and Ethical Implications of AI. Details & some great videos could be found on Kate Crawford Website.

- Hanna Wallach – Work on FATE

Summary

In this post, you learned about details on courses and research initiatives happening in the area of machine learning model fairness in different companies such as Google, IBM, and others.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me