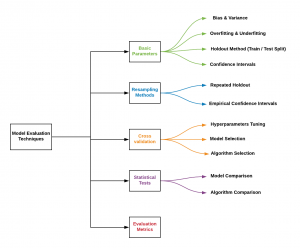

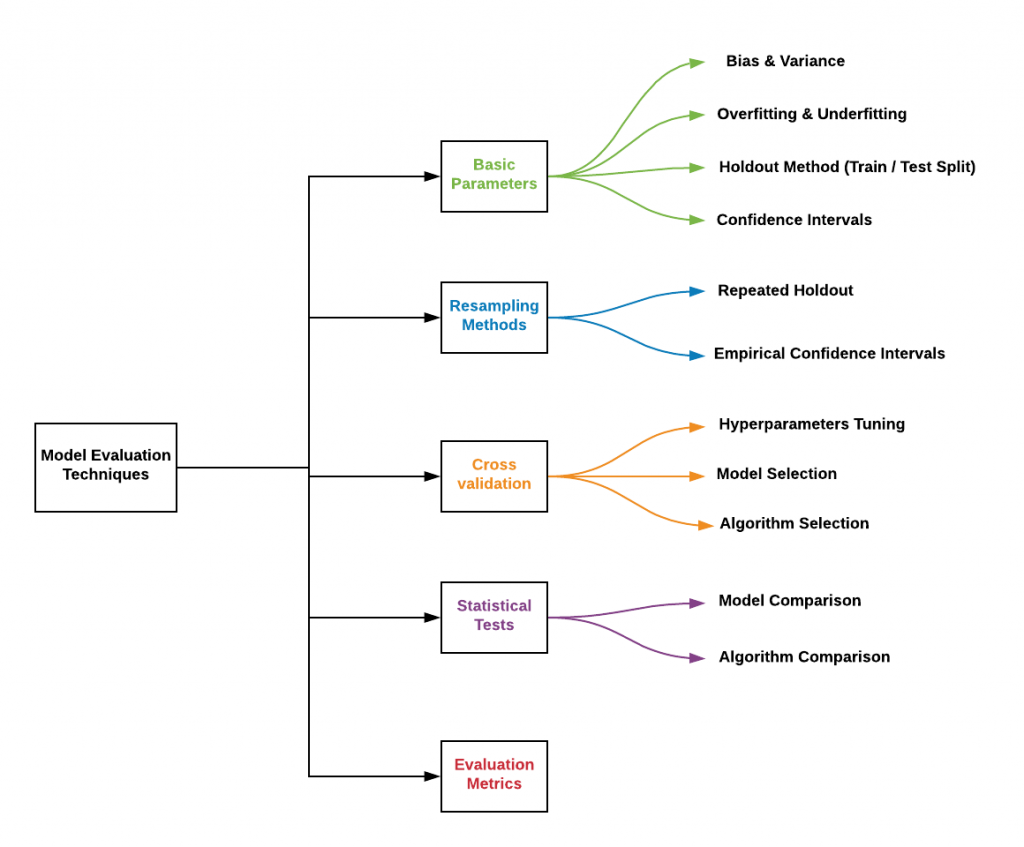

In this post, you will get an access to a self-explanatory infographics / diagram representing different aspects / techniques which need to be considered while doing machine learning model evaluation. Here is the infographics:

In the above diagram, you will notice that the following needs to be considered once the model is trained. This is required to be done to select one model out of many models which get trained.

- Basic parameters: The following need to be considered for evaluating the model:

- Bias & variance

- Overfitting & underfitting

- Holdout method

- Confidence intervals

- Resampling methods: The following techniques need to be adopted for evaluating models:

- Repeated holdout

- Empirical confidence intervals

- Cross-validation: Cross validation technique is required to be performed for achieving some of the following

- Hyperparameters tuning

- Model selection

- Algorithm selection

- Statistical tests: Statistical tests need to be performed for doing the following:

- Model comparison

- Algorithm comparison

- Evaluation metrics

The image is adopted from this page.

Latest posts by Ajitesh Kumar (see all)

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

- RAG Pipeline: 6 Steps for Creating Naive RAG App - November 1, 2025

I found it very helpful. However the differences are not too understandable for me