In this post, you will learn about how to create an Ethical AI Framework which could be used in your organization. In case, you are looking for Ethical AI RAG Matrix created with Excel, please drop me a message.

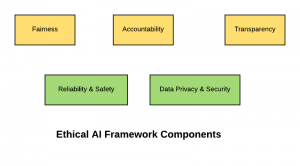

The following are key aspects of ethical AI which should be considered for creating the framework:

- Fairness

- Accountability

- Transparency

- Reliability & Safety

- Data privacy and security

Fairness

AI/ML-powered solutions should be designed, developed and used in respect of fundamental human rights and in accordance with the fairness principle. The model design considerations should include the impact on not only the individuals but also the collective impact on groups and on society at large.

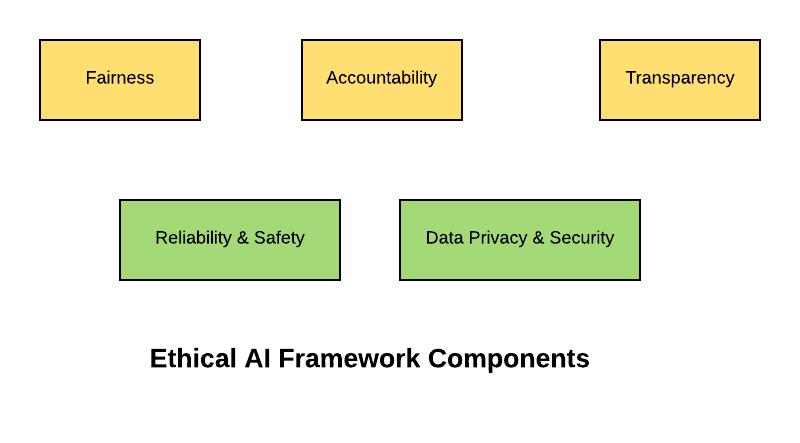

The following represents some of the guidelines for creating technologies’ practices & processes to ensure fairness of AI-powered solutions:

- Quality control checks guidelines for Bias detection: Guidelines must be published for performing quality control checks to detect the intentional and unintentional (human-implicit) biases. These could be tested using metrics related to model predictions (such as model sensitivity, statistical parity, disparate impact ratio, equal opportunity test etc)as well as fairness tests related to features and other aspects of the training process.

- Bias mitigation strategies in the model training process: Guidelines for adopting different bias mitigation strategies during model training should be published for data scientists. Guidelines could include bias mitigation strategies for handling bias during the data processing stage and using appropriate algorithms.

- Fairness metrics for bias detection: Different types of fairness metrics must be created and published to data science team for them to test the models for fairness. The idea is to test early, test often to avoid bias related issues. The following are some of the metrics:

- Relative feature significance

- Statistical parity

- Sensitivity tests (equal opportunity tests)

- Disparate impact ratio

- Regular review sessions (manual) for detecting unintentional biases: Guidelines should be published for regular team reviews for identification and detection of biases (intentional and unintentional). The reviews would need to be done both, during model training process examining the data/features, and also the results/predictions in order to quickly respond to bias related issues. Idea is to ensure diversity and inclusiveness by involving team members with diverse experience, perspectives (cultural/technical) and domain expertise (primarily, functional) review the model for minimizing the bias.

- Training & awareness sessions for stakeholders: Training on ML model fairness and related aspects should be done for different stakeholders such as product managers/business analysts, data scientists, ML researchers, executive management etc. One or more awareness sessions should be conducted for partners and end customers to inform them about the user-identified biases and related issues.

Accountability

The primary goal is to have continued attention and vigilance for the potential effects and consequences of artificial intelligence systems.

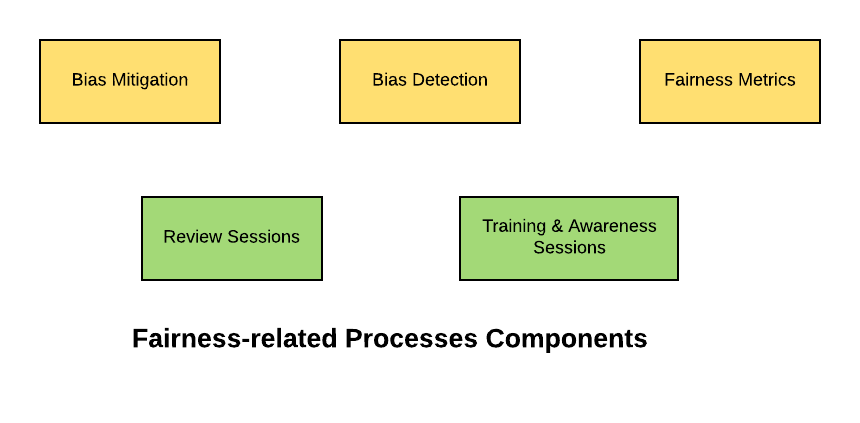

The following represents some of the guidelines for creating processes to ensure Accountability of AI-powered solutions:

- Org-wide AI Policy Document: An AI policy document should be created based on the national and international laws, regulations, and guidelines that AI may have to work within. Stakeholders such as product management and the data scientists should be regularly mentored to adhere to AI policies.

- AI Project Implementation Lifecycle Standard Guidelines: A standard guidelines document should be created for managing data science project life cycle. The standard guidelines should include processes, diagrams, and templates which could be used during project implementation. The following are some of the sample templates:

- Product requirement specifications (PRS)

- Technical design specifications (TDS)

- Performance metrics reporting

- Quality assurance (QA) reporting

- Individual Project Artifacts Management: The detailed records (documentation including product requirement specifications, technical design specifications etc) of the design processes and decision making should be kept in place and updated as appropriate at regular intervals. A strategy should be determined for keeping records during the design and development process to encourage best practices and encourage iteration.

- Document repository: A standard document repository should be maintained for ease of access to policy, standard guidelines and project artifacts documents across the organization.

- Guidelines on AI decision-making process: It must be diligently determined and documented as to how should the AI system going to be embedded in a human decision-making process. Is it going to make decisions on its own, or is it going to be hybrid e.g., make the recommendations for humans to make the decision? The same must be documented with proper reasoning.

- Regular awareness sessions: It is required for all the stakeholders to understand the workings of AI systems even if they are not personally developing and monitoring the underlying algorithms. This includes stakeholders such as customers, partners, executive management and project sponsors apart from product management and data science team.

- Guidelines on validation & verification of ethical issues: Guidelines should be set for stakeholders to refer to domain experts, sociologists, linguists, behaviorists, and other professionals to understand ethical issues in a holistic context and document the same for the record-keeping purpose.

- Trackable & Measurable: All of the documents and artifacts should be versionable such that they could be appropriately tracked as and when required.

Transparency

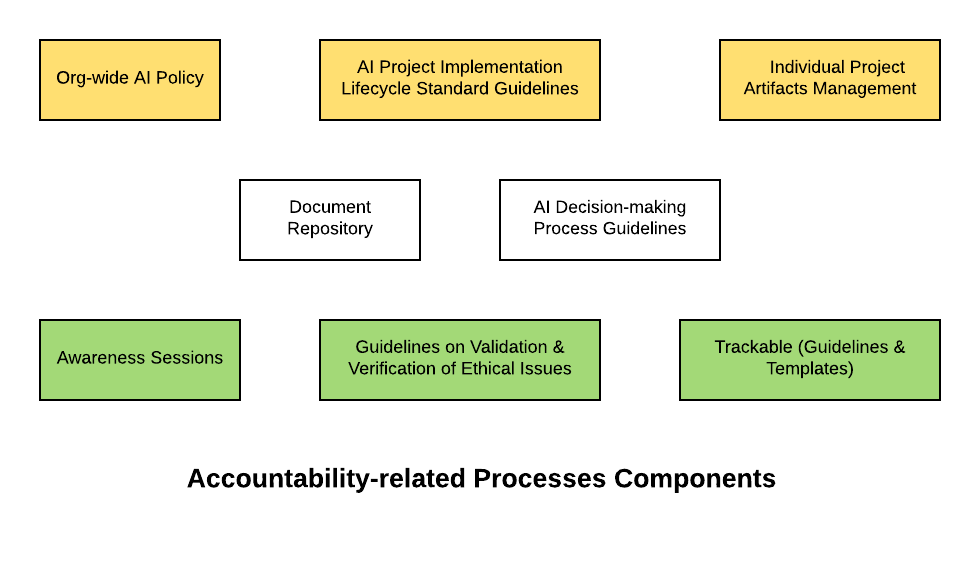

Transparency represents the fact that stakeholders should be able to ask and understand how an AI system came to a given conclusion or recommendation. Organizations should be able to explain aspects related data, training process, algorithms, and predictions which went into providing the recommendations or making the decisions. The primary goal is that AI systems transparency and intelligibility should be improved in a consistent and sustained manner.

The following represents some of the guidelines for creating processes to ensure transparency around AI-powered solutions

- Explainable & Reviewable/Auditable Predictions (Appropriate Product Solution Design): Individual predictions made by the ML models should be explainable and reviewable or auditable by customers, partners, auditors, and other stakeholders. One could ask for features contributions in each of the predictions. The goal is to understand the decision-making mechanism behind every prediction. In order to achieve this, the recommendation is to use algorithms which could be explainable. For that purpose, linear or generalized models (such as decision tree, logistic regression) could be the best choice. Product managers should design the specifications keeping in mind this requirement. Thus, against every prediction, there should be a way for end users to get additional details such as how the decision/prediction was made, the contribution of each of the features etc.

- Auditable decision-making process (Document Templates): One could ask for reviewing the ML model training and testing process and the details related to algorithms used. The organizations should be able to get the processes reviewed by sharing appropriate details. It may be required to create document templates for sharing the details with stakeholders. It may be the responsibility of data scientist (architect or lead) to own this template and share the details to the stakeholders as and when asked.

- Auditable data coverage (Document Templates): One could ask for reviewing the data used for training the model. Organizations should be able to share detail in some format without exposing the data details. Well-defined and agreed template would be helpful in achieving this objective. It may be required to create document templates for sharing the details with stakeholders. It may be the joint responsibility of both, the data scientists (architect or lead) and product managers/business analysts to own this template and share the details to the stakeholders as and when asked.

I will be covering the details related to other aspects of ethical AI in part 2 of this post.

References

- Ethical AI Principles – Curated List

- Trusted AI Initiative by IBM

- Google AI Principles

- Declaration on ethics and data protection in artificial intelligence

Summary

In this post, you learned about guidelines on how to create an ethical AI framework for your organization. The key aspects to be covered as part of the framework are fairness, accountability, transparency, reliability & safety, and data privacy & security. This post only covers details related to FAT (fairness, accountability, and transparency). This post will be updated with more details with time. In another post, I will be covering the other two remaining aspects of ethical AI. Please feel free to share your thoughts or comments.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me