Graph neural networks (GNNs) are a relatively new area in the field of deep learning. They arose from graph theory and machine learning, where the graph is a mathematical structure that models pairwise relations between objects. Graph Neural Networks are able to learn graph structures for different data sets, which means they can generalize well to new datasets – this makes them an ideal choice for many real-world problems like social network analysis or financial risk prediction. This post will cover some of the key concepts behind graph neural networks with the help of multiple examples.

What are graph neural networks (GNNs)?

Graphs are data structures which are used to model complex real-life problems. Some of the examples include learning molecular fingerprints, modeling physical systems, controlling traffic networks, friends recommendation in social media networks. However, these tasks require dealing with non-Euclidean graph data that contains rich relational information between elements and cannot be well handled by traditional deep learning models (e.g., convolutional neural networks (CNNs) or recurrent neural networks (RNNs)). This is where graph neural networks fit in.

Graph neural network is a type of deep learning neural network that is graph-structured. It can be thought of as a graph where the data to be analyzed are nodes and the connections between them are edges. GNNs conceptually build on graph theory and deep learning. The graph neural network is a family of models that leverage graph representations to learn data structures and graph tasks. Graph neural networks (GNNs) are proposed to combine the feature information and the graph structure to learn better representations on graphs via feature propagation and aggregation. Graphs often exhibit applications in representation learning tasks, where the graph has some domain knowledge that, while not explicit in the graph structure, can be learned from examples. In simpler words, graph neural networks are a way to get more out of the data with less structured labels. It is important to note that while graph neural networks may be new to the industry they are not a new concept. They were first introduced by Latora and Marchiori (2001).

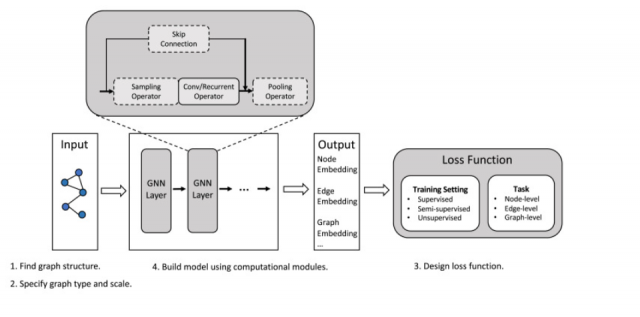

Here is how a graph neural network looks like:

Graph Neural Networks have a number of advantages over regular neural networks:

- GNNs may be trained on any dataset that has both input data and pairwise relationships between items. An important advantage graph neural networks have over regular deep learning is that graph neural networks are able to capture the graph structure of data – which is often very rich.

- GNNs can be used to classify data or make predictions. However, graph neural networks do not perform as well when it comes to tasks like a regression – where the output is a real-valued number instead of discrete values (like cat/dog).

- GNNs have a lot better memory footprint than regular deep learning models since they only need to store information about connections between nodes instead of all neurons in the graph.

- GNNs are very easy to train even with smaller datasets.

What are different types of graph neural networks?

There are two main types of graph neural network architectures which include feed-forward graph neural networks and graph recurrent networks.

- In a feed-forward graph neural network, input data is propagated through a graph of neurons to produce output by applying the transfer function at the edge weights between each node. The steps involved in this type would be as follows:

- On graph nodes with input supplied, apply feed-forward propagation.

- Apply graph transfer function on graph edges to propagate the output of graph nodes.

- Backpropagate through graph weights based on output gradients.

- Graph recurrent networks are similar as they also consist of a graph structure, however, unlike feed-forward graphs where data is propagated in one direction, this graph network graph is bi-directional where data flows in both directions. The steps involved in this type are as follows:

- Apply standard graph propagation on graph nodes with input provided.

- Apply graph transfer function on graph edges to propagate the output of neuron back into graph nodes, but adjusting edge weights based on previously applied gradient modification for that node or edge graph nodes.

- Backpropagate through graph weights based on output gradients. This is in contrast to feed-forward graph neural networks where the previous layer cannot influence its current state.

There are several types of graph structure, including self-loops, multi-input, and numerous output ports for each node or edge with different weight connections between edges with various weights.

There are several variants of the graph neural network. They are some of the following:

- Graph convolutional networks

- Graph recurrent networks

- Graph attention networks

- Graph residual networks

Here are some great research papers on graph neural networks (GNNs) – https://github.com/thunlp/GNNPapers

How is graph neural networks (GNNs) different from standard artificial neural networks (ANNs)?

Graph Neural Networks (GNNs) are similar to standard neural networks where the data flows through a graph of neurons in an iterative fashion and each edge weight can be modified based on input examples for that node or neuron.

In GNNs, what is different is the graph transfer function. In graph transfer function, weights are not just between neurons but also on the edges of a graph as well as nodes in graphs. This is very helpful when there might be overlapping data or missing data points with different associated values – since those values can be filled from other connected nodes/data points through graph transfer functions and neural networks learn this graph structure.

Graph Neural Networks are also different in graph execution – where graph neural networks go through the graph one node at a time, while standard deep learning neural networks go through all neurons before moving on to the next data point. The key difference between GNNs and standard deep learning models is that graph neural network has its own set of parameters for each graph node, graph edge, and data point.

Graph Neural Networks use a graph structure for learning the graph nodes in the dataset with different sets of parameters – which is very different from standard deep learning neural networks where neurons are just linear functions that have real-valued weights to their inputs. This makes graph transfer function more complex than regular deep learning models since graph neural networks have multiple graph nodes and graph edges for a single data point.

How do we train a graph neural network model?

Graph neural network models can be trained in all three different settings such as supervised, semi-supervised, and unsupervised learning. A semi-supervised setting represents a small amount of labeled nodes and a large amount of unlabeled nodes for training.

There are three different types of learning tasks with GNN. They are as following:

- Node-level tasks: Node-level tasks focus on nodes, which include node classification, node regression, node clustering, etc.

- Edge-level tasks: Edge-level tasks are edge classification and link prediction, which require the model to classify edge types or predict whether there is an edge existing between two given nodes.

- Graph-level tasks: Graph-level tasks include graph classification, graph regression, and graph-matching all of which need the model to learn graph representations.

One would need to design loss function based on learning tasks and data type availability (supervised, semi-supervised and unsupervised)

What are some real-world use cases where graph neural networks can be used?

Graph neural networks (GNNs) are one of the more recent deep learning approaches to solving complex real-world problems. GNNs are used for studying lots of different problems. These problems are similar because they have something to do with graphs. Rather they are related to tasks that have a graph structure. GNNs have applications in a wide range of sectors, and the possibilities may grow even more as they are integrated into other industrial use cases. Graph neural networks can be applied across a variety of different applications including graph partitioning, graph clustering, entity resolution in graph databases, dynamic graph labeling, or identification of specific nodes within a larger network that could be difficult to identify through traditional information retrieval methods. Some other common uses cases that utilize GNNs include some of the following:

- Pattern classification using graphs (e.g., size distribution graphs)

- Graph filtering (e.g., graph-based data integration),

- Graph visualization

- Graph analytics (including graph database management, facebook prediction, financial risk prediction, market basket analysis, etc)

- Social network analysis

- Financial assets price prediction: Graph neural networks can be used to predict the price of financial assets with high accuracy by using historical data from stock market prices and other variables such as volume traded or volatility.

With graph neural networks, data scientists can solve complex problems that relate to graphs. Whether it be graph partitioning, graph clustering, entity resolution in graph databases, or identification of specific nodes within a network that could be difficult to identify through traditional information retrieval methods – graph neural networks have applications across many different sectors and may grow as they are integrated into other industrial use cases. If you would like to learn more about graph neural network, do stay tuned for my upcoming blog posts on this topic.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me