If you are a data scientist or machine learning engineer, then you know that Gradient Boosting Algorithm (GBA) is one of the most powerful algorithms in predicting results from data. This algorithm has been proven to increase the accuracy of predictions and is becoming increasingly popular among data scientists. Let’s take a closer look at GBA and explore how it works with an example.

What is a Gradient Boosting Algorithm?

Gradient boosting algorithm is a machine learning technique used to build predictive models. It creates an ensemble of weak learners, meaning that it combines several smaller, simpler models in order to obtain a more accurate prediction than what an individual model would produce. Gradient boosting works by iteratively training the weak learners on gradient-based functions and incorporating them into the model as “boosted” participants. At its core, gradient boosting works by combining multiple gradient steps to build up a strong predicting model from weak estimators residing in a gradient function space with additional weak learners joining the gradient function space after each iteration of gradient boosting. At each step, gradient descent is used to identify which small components help the function most and are thus added to the overall gradient model. This allows for complicated problems such as data analysis, text processing and image recognition to be solved with greater accuracy and enhanced performance.

Gradient boosting algorithm is an effective machine learning method used to solve supervised learning problems, such as classification and regression. Gradient boosting is also referred to as gradient tree boosting, gradient descent boosting and gradient boosting machines (GBM).

How Does Gradient Boosting Algorithm Work?

GBA works by starting with a baseline prediction and then adding additional weak learners as needed. As each additional learner is added, the algorithm will make adjustments to ensure that the predictions become more accurate. This process continues until the model reaches its maximum accuracy level or until no further improvement can be made. It’s important to note that each additional learner must provide value in order for this algorithm to work properly; otherwise it may not improve the accuracy of predictions.

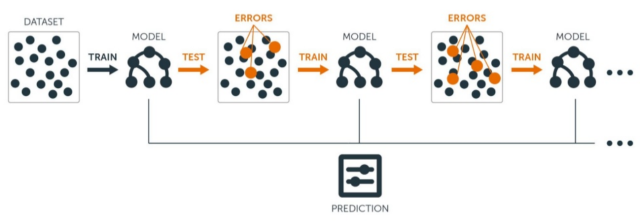

The gradient boosting algorithm works by combining weak learners (such as decision trees) into an ensemble that can make more accurate predictions than one single model alone. The gradient boosting algorithm attempts to minimize a loss function by training a sequence of models iteratively. At each stage, additional trees are added in order to improve the accuracy of the model. Each new tree is trained using gradient descent on residual errors made by the previously trained tree, resulting in a gradient estimator which is then used to identify further points where improvements can be made. This process is repeated until a stopping criteria is met or no further improvements can be found. The process is depicted in the following diagram:

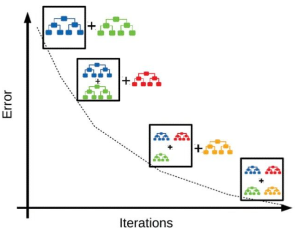

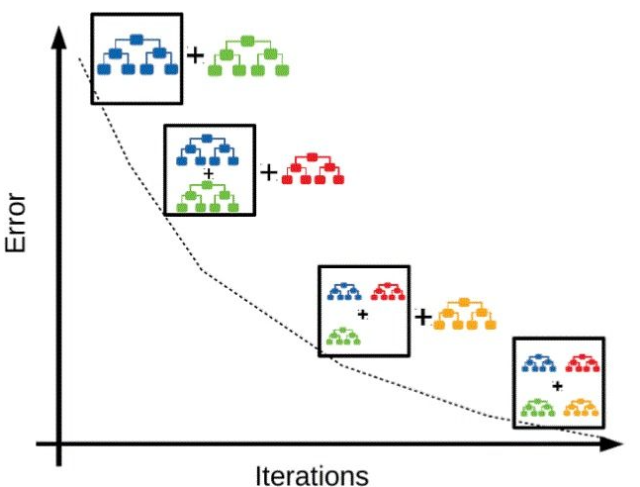

Each gradient boosting algorithm iteration consists of two steps: the first step assesses the current residuals or discrepancies between actual and predicted values, while the second applies gradient descent to optimize parameters of individual base learners. During training, gradient boosting updates both parameters and weights of every model by learning from errors made on previous steps. This iterative process continues until all training points have been covered or until it reaches its maximum number of estimations, allowing accurate predictions based on a more consistent model. The picture below represents how error reduces as the training progresses based on week learners.

Real world examples of Gradient Boosting Algorithm

Let’s say we have a dataset containing information about customers and their spending habits over time. We want to use the gradient boosting algorithm to predict how much money each customer will spend next month based on this data. To do this, we will start by creating a baseline prediction using a simple regression model such as linear regression. We can then add additional weak learners such as decision trees or random forests which will help us refine our predictions and increase accuracy levels even further. As we continue adding more weak learners, our model should become more accurate until it reaches its maximum potential accuracy level or until no further improvement can be made with additional weak learners.

While gradient boosting at its most basic level can be applied to help optimize a wide array of tasks, there are several notable cases of gradient boosting in the real world that have been successful. One example is gradient boosted trees, which can be used as an efficient method for solving complex problems such as weather prediction or medical diagnosis. Other examples include gradient boosted decision tree learning (GBDT) for detecting fraudulent credit card transactions and gradient boosting machines for facial recognition systems. The ability of gradient boosting algorithms to find unique combinations of linear models has also proven useful for predicting stock market trends. All these real-world uses demonstrate that gradient boosting can be a powerful tool for solving intricate problems and enhancing predictive accuracy.

Gradient boosting is used in marketing to optimize budget allocation across channels so as to maximize the return on investments (ROI). Additionally, gradient boosting algorithms have applications in search ranking studies, such as finding the most relevant documents from an extensive list of options. Gradient boosting has also been utilized in medical diagnoses, such as predicting the risk of cancer relapse and Heart Attack prediction. Other examples include fraud detection for loan applicants and churn analysis for customer retention initiatives. As evidenced by these real-world applications, gradient boosting algorithms are invaluable tools for accurate predictions and analytics.

Conclusion

In conclusion, gradient boosting algorithm are powerful tools for making accurate predictions from data sets and have been proven effective in many applications. By combining multiple weak learners into one strong model, GBAs are able to reach high levels of accuracy for any given task. By understanding how GBAs work and utilizing them effectively, you can gain valuable insight from your data sets and make better decisions based on your findings!

- Completion Model vs Chat Model: Python Examples - June 30, 2024

- LLM Hosting Strategy, Options & Cost: Examples - June 30, 2024

- Application Architecture for LLM Applications: Examples - June 25, 2024

Leave a Reply