In this post, you will get a quick glimpse of ethical AI principles of companies such as IBM, Intel, Google, and Microsoft.

The following represents the ethical AI principles of companies mentioned above:

- IBM Ethical AI Principles: The following represents six ethical AI principles of IBM:

- Accountability: AI designers and developers are responsible for considering AI design, development, decision processes, and outcomes.

- Value alignment: AI should be designed to align with the norms and values of your user group in mind.

- Explainability: AI should be designed for humans to easily perceive, detect, and understand its decision process, and the predictions/recommendations. This is also, at times, referred to as interpretability of AI. Simply speaking, users have all rights to ask the details on the predictions made by AI models such as which features contributed to the predictions by what extent. Each of the predictions made by AI models should be able to be reviewed.

- Fairness: AI must be designed to minimize bias and promote inclusive representation.

- User data rights: AI must be designed to protect user data and preserve the user’s power over access and uses.

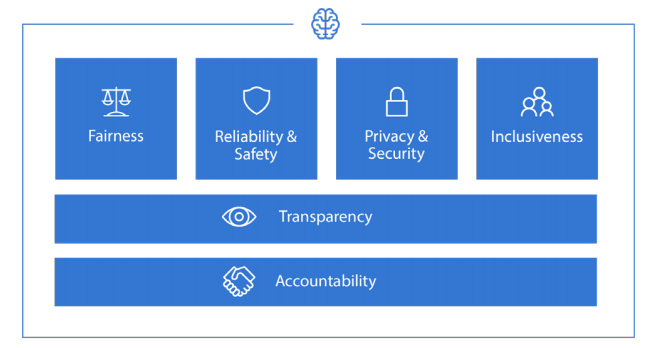

- Microsoft Ethical AI Principles: The following represents six ethical AI principles of Microsoft:

Fig: Microsoft Ethical AI Principles – Image Courtesy (Microsoft)

- Fairness: AI systems should treat everyone in a fair and balanced manner and not affect similarly situated groups of people in different ways. For example, when AI systems provide guidance on medical treatment, loan applications or employment, they should make the same recommendations for everyone with similar symptoms, financial circumstances or professional qualifications.

- Accountability: The people (& related companies) who design and deploy AI systems must be accountable for how their systems operate.

- Transparency: AI systems should be understandable. When AI systems are used to help make decisions that impact people’s lives, it is particularly important that people including ML developers and end users understand how those decisions were made.

- Inclusivity: AI systems should empower everyone and engage people.

- Reliability & safety: Systematic evaluation of the quality and suitability of the data and models used to train and operate AI-based products and services should be done at regular intervals. In addition, the information about potential inadequacies in training data should also be examined and reported at regular intervals. It must be laid out that when and how an AI system should seek human input or run alternate models/rules during critical situations.

- Privacy & security: AI systems should be secure and respect privacy.

- Google Ethical AI Principles: The following represents seven ethical AI principles of Google. The details could be found on this page, Ethical AI: Lessons from Google AI Principles.

- Be socially beneficial

- Avoid creating or reinforcing unfair bias (Fairness)

- Be built and tested for safety (Safety)

- Be accountable to people (Accountability)

- Incorporate privacy design principles (Privacy and transparency in the use of data for training)

- Uphold high standards of scientific excellence (Share AI knowledge by publishing educational materials, best practices, and research that enable more people to develop useful AI applications.)

- Be made available for uses that accord with these principles.

- Intel Ethical AI Principles: The following represents ethical AI principles of Intel:

- Liberate data responsibly: The following represents key aspects of using data responsibly:

- Create reliable data sets at regular intervals for testing the models

- Work with stakeholders across departments to provide access to data in appropriate manner (encrypted or so). This could also be termed as federated access to data.

- Rethink privacy

- Require Accountability for Ethical Design and Implementation

- Liberate data responsibly: The following represents key aspects of using data responsibly:

Common (Top) Ethical AI Principles

Based on the above, let’s look at some of the common AI principles which could become part of the code of ethics of artificial intelligence:

- Fairness: AI systems (models) should treat everyone in a fair and balanced manner and not affect similarly situated groups of people in different ways. Appropriate fairness metrics should be presented before stakeholders for evaluation of models fairness.

- Accountability: Those (architects & developers) involved in AI design and development is accountable for considering the AI system’s impact on the end users, as are the companies invested in the creation of AI-powered solutions.

- Transparency: AI systems (models) should be explainable and understandable. The decision process (including models for training and testing) and the predictions should be reviewable. End users or the stakeholders should be informed about the usage of AI and allowed to be asked how the predictions are made (which all features contributed to what extent).

- Data privacy & security

- Reliability & safety

Summary

In this post, you learned about ethical AI principles recommended by companies such as IBM, Google, Intel, Microsoft.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me