Data science/Machine learning career has primarily been associated with building models which could do numerical or class-related predictions. This is unlike conventional software development which is associated with both development and “testing” the software. And, the related career profiles are software developer/engineers and test engineers/QA professional. However, in the case of machine learning, the career profile is a data scientist. The usage of the word “testing” in relation to machine learning models is primarily used for testing the model performance in terms of accuracy/precision of the model. It can be noted that the word, “testing”, means different for conventional software development and machine learning models development.

Machine learning models would also need to be tested as conventional software development from the quality assurance perspective. Techniques such as blackbox and white box testing would, thus, apply to machine learning models as well for performing quality control checks on machine learning models. Looks like there look to be a career for test engineers / QA professionals in the field of artificial intelligence.

In this post, you will learn about different Blackbox Testing techniques for testing machine learning (ML) models. The following will be described:

- A quick introduction to Machine Learning

- What is Blackbox testing?

- Challenges with Blackbox testing for machine learning models

- Blackbox testing techniques for ML models

- Skills needed for Blackbox testing of ML models

One of the key objectives behind doing blackbox testing of machine learning model is to ensure the quality of the models in a sustained manner.

A Quick Introduction to Machine Learning

If you are new to machine learning, here is a quick introduction.

Simply speaking, machine learning (models) represents a class of software which learns from a given set of data, and then, make predictions on the new data set based on its learning. In other words, the machine learning models are trained with an existing data set in order to make the prediction on a new data set. For example, a machine learning model could be trained with patients’ data (diagnostic report) suffering from a cardiac disease in order to predict whether a patient is suffering from the cardiac disease given his diagnostic data is fed into the model. This class of learning algorithm is also called supervised learning.

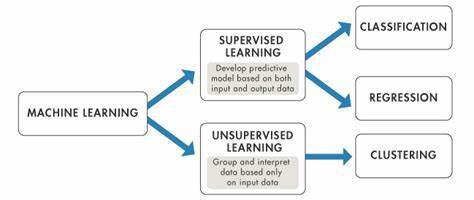

The following represent different classes of machine learning algorithms:

- Supervised learning

- Regression: Regression models are used to make numerical predictions. For example, what would be the price of the stock on a given day?

- Classification: Classification models are used to predict the class of a given data. For example, whether a person is suffering from a disease or not.

- Unsupervised learning

- Clustering: Clustering models are used to learn different classes (cluster) from a given data set without being fed with any kind of label information, unlike supervised learning. Once the model is learned, the model is used to predict the class of the new data set. For example, grouping news in different classes and associating the labels with the learned classes.

- Reinforcement learning

The following diagram represents the supervised and unsupervised learning aspects of machine learning:

Fig. Supervised & Unsupervised Learning

What is Blackbox Testing?

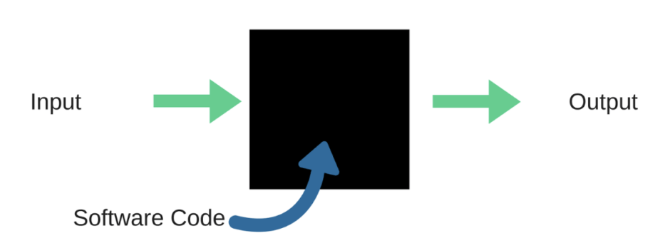

Blackbox testing is testing the functionality of an application without knowing the details of its implementation including internal program structure, data structures etc. Test cases for blackbox testing are created based on the requirement specifications. Therefore, it is also called as specification-based testing. The following diagram represents the blackbox testing:

Fig 1. Blackbox testing

When applied to machine learning models, blackbox testing would mean testing machine learning models without knowing the internal details such as features of the machine learning model, the algorithm used to create the model etc. The challenge, however, is to identify the test oracle which could verify the test outcome against the expected values known beforehand. This is discussed in the following section.

Challenges with Blackbox Testing of Machine Learning Models

Given that machine learning models have been categorized as non-testable, it throws a challenge to perform Blackbox testing of ML models. Let’s try and understand why ML models are termed as non-testable. Also, how could they be made testable?

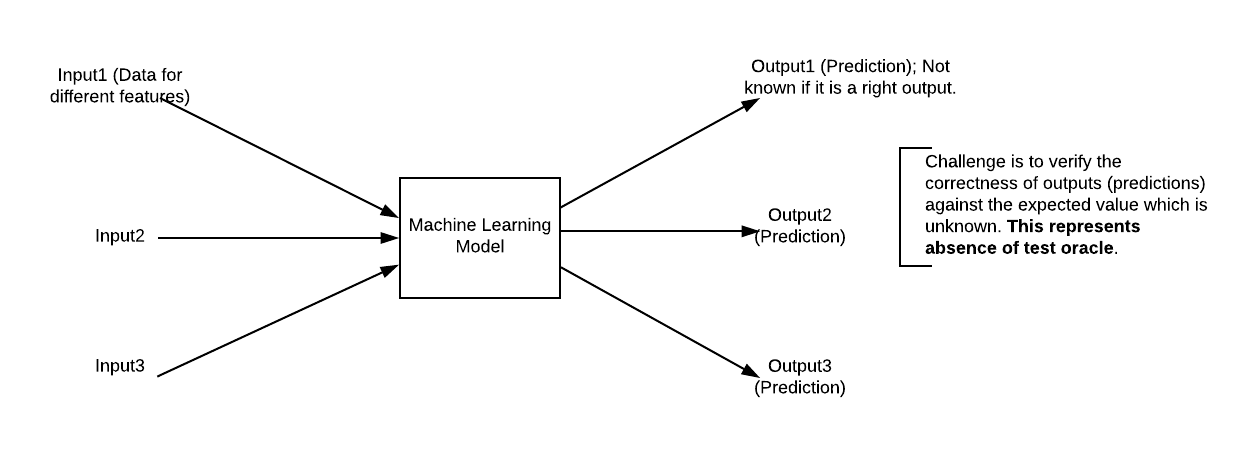

In conventional software development, a frequently invoked assumption is the presence of a test oracle which is nothing but testers/test engineers (human) or some form of testing mechanisms including the testing program which could verify the output of the computer program against the expected value which is known beforehand. In the case of machine learning models, there are no expected values beforehand as ML models output is some sort of prediction. Given the outcome of machine learning models is a prediction, it is not easy to compare or verify the prediction against some kind of expected value which is not known beforehand. That said, during the development (model building) phase, data scientists test the model performance by comparing the model outputs (predicted values) with the actual values. This is not same as testing the model for any input where the expected value is not known.

Fig 2. The absence of Test Oracle

In such cases where test oracles are not found, the concept of pseudo oracles is introduced. Pseudo oracles represent the scenario when the outputs for the given set of inputs could be compared with each other and the correctness could be determined. Consider the following scenario. A program for solving a problem is coded using two different implementations with one of them to be chosen as the main program. The input is passed through both the implementations. If the output comes out to be same or comparable (falling within a given range or more/less than a predetermined value), the main program could be said to working as expected or correct program. The goal is to find different such techniques to test or perform quality control checks on machine learning models from a quality assurance perspective.

Blackbox Testing Techniques for Machine Learning Models

The following represents some of the techniques which could be used to perform blackbox testing on machine learning models:

- Model performance

- Metamorphic testing

- Dual coding

- Coverage guided fuzzing

- Comparison with simplified, linear models

- Testing with different data slices

Model Performance

Testing model performance is about testing the models with the test data/new data sets and comparing the model performance in terms of parameters such as accuracy/recall etc., to that of pre-determined accuracy with the model already built and moved into production. This is the most trivial of different techniques which could be used for blackbox testing.

Metamorphic Testing

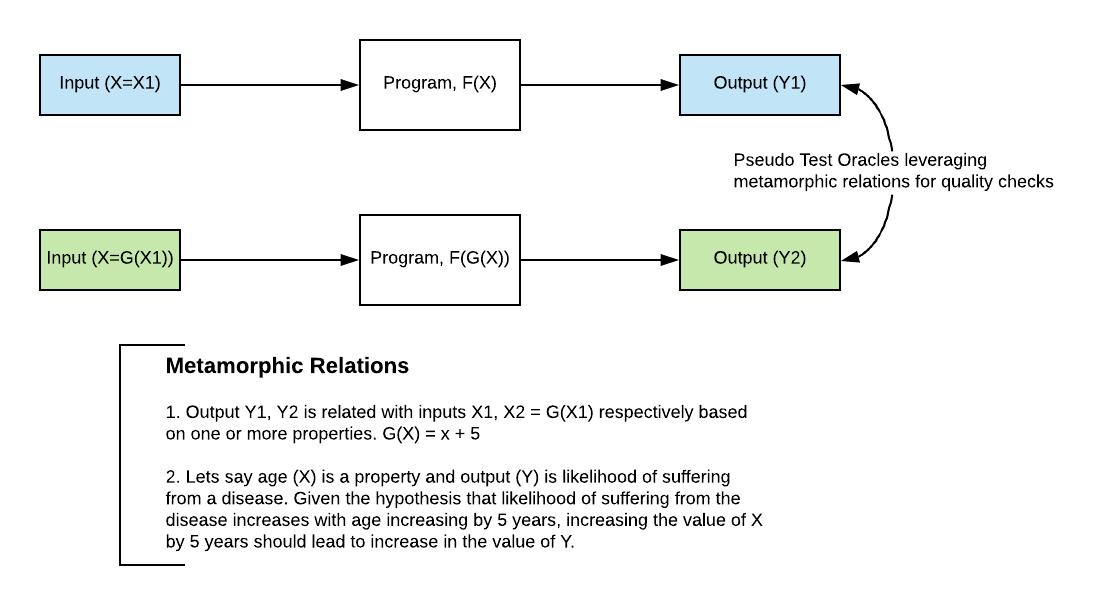

In metamorphic testing, one or more properties are identified that represent the metamorphic relationship between input-output pairs. For example, hypothetically speaking, an ML model is built which predicts the likelihood of a person suffering from a particular disease based on different predictor variables such as age, smoking habit, gender, exercise habits etc. Based on the detailed analysis, it is derived that given the person is a smoker and a male, the likelihood of the person suffering from the disease increases by 5% with an increase in his age by 3 years. This could be used to perform metamorphic testing as the property, age, represents the metamorphic relationship between inputs and outputs. The following diagram represents metamorphic testing:

Fig 3 – Metamorphic testing of machine learning models

In metamorphic testing, the test cases that result in success lead to another set of test cases which could be used for further testing of machine learning models. The following represents a sample test plan:

- Given the person is a male and a smoker, determine the likelihood of the person suffering from the disease when his age is 30 years.

- Increase the age by 5 years. The likelihood should increase by more than 5%.

- Increase the age by 10 years. The likelihood should increase by more than 15% but less than 20%.

Test cases such as above can be executed until all results in success or failure at any step. In case, one of the test cases fail, it could result in the logging of a defect which could be dealt with, by data scientists.

Further details could be found on this blog, metamorphic testing of machine learning models.

Dual Coding

With dual coding technique, the idea is to build different models based on different algorithms and comparing the prediction from each of these models given a particular input data set. Let’s day, a classification model is built with different algorithms such as random forest, SVM, neural network. All of them demonstrate a comparative accuracy of 90% or so with random forest showing the accuracy of 94%. This results in the selection of random forest. However, during testing, the model for quality control checks, all of the above models are preserved and input is fed into all of the models. For inputs where the majority of remaining models other than random forest gives a prediction which does not match with that of the model built with random forest, a bug/defect could be raised in the defect tracking system. These bugs could later be prioritized and dealt with by data scientists.

Coverage guided Fuzzing

Coverage guided fuzzing is a technique where data to be fed into the machine learning models could be planned appropriately such that all of the features activations get tested. Take for an instance, the models built with neural networks, decision trees, random forest etc. Let’s say the model is built using neural networks. The idea is to come up with data sets (test cases) which could result in the activation of each of the neurons present in the neural network. This technique sounds more like a white-box testing. However, the way it becomes part of the blackbox testing is the feedback which is obtained from the model which is then used to guide the further fuzzing and hence, the name – Coverage guided fuzzing. This is a work in progress. I would be posting a detailed write-up on this along with sample test cases.

Skills needed for Blackbox testing of ML Models

Given the testing techniques mentioned earlier, the following are some of the techniques which could be required to have for test engineers or QA professional to play a vital role in performing quality control checks on AI or machine learning models:

- Introductory knowledge of machine learning concepts (Different kind of learning tasks (such as regression, classification etc) and related algorithms.

- Knowledge of terminologies such as features, feature importance etc.

- Knowledge of terminologies related to model performance (Precision, recall, accuracy etc)

- General data analytics skills

- Scripting knowledge with one or more scripting language.

References

- Introduction to machine learning

- Blackbox testing

- Metamorphic testing

- Debugging neural networks with coverage guided fuzzing

Summary

If you are a QA professional or test engineer working in QA organization/department in your company, you could explore the career in the field of data science/machine learning for testing / performing quality control checks on machine learning models from QA perspective. And, blackbox testing of machine learning models is one of the key areas that you would be doing when assuring the quality of the ML models. Please feel free to comment or suggest or ask for clarifications.

- Agentic Reasoning Design Patterns in AI: Examples - October 18, 2024

- LLMs for Adaptive Learning & Personalized Education - October 8, 2024

- Sparse Mixture of Experts (MoE) Models: Examples - October 6, 2024

Very informative blogs to understand the ML model testing fundamental.