Today, when organization is aiming to become data-driven, it is imperative that their data science and product management teams understand the importance of using A/B testing technique for validating or supporting their decisions. A/B testing is a powerful technique that allows product management and data science teams to test changes to their products or services with a small group of users before implementing them on a larger scale. In data science projects, A/B testing can help measure the impact of machine learning models and the content driven based on the their predictions, and other data-driven changes.

This blog explores the principles of A/B testing and its applications in data science. Product managers and data scientists can learn how to design effective experiments, calculate statistical significance, and avoid common pitfalls. By the end of this blog, you’ll have a solid understanding of how A/B testing can help optimize your data science projects and drive growth for your business.

A/B Testing in Data Science Projects

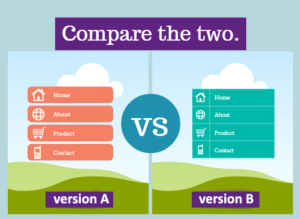

A/B testing is a technique used to compare two different versions of a product, webpage, or marketing campaign to determine which one performs better. It involves randomly dividing a sample group of users into two equal-sized groups called as control group and treatment group. The users belonging to the control group is put to experience the existing version (called version A) of the product, webpage or marketing campaign. The users belonging to the treatment or experimental group is put to experience the new or another version (called version B). By analyzing metrics from both groups, product teams and business owners can determine which version performs better and make data-driven decisions to use appropriate product or campaign version in production.

Here’s an example of A/B testing in the real world: Imagine an e-commerce website that wants to improve the conversion rate of its checkout page. The website’s product team decides to test two different versions of the page – one with a green “Buy Now” button and one with a blue “Buy Now” button. They randomly assign half of their users (let’s say control group) to see the green button (version A) and the other half (treatment group) to see the blue button (version B). After a period of time, they compare the conversion rates of both groups and find that the group that saw the green button had a higher conversion rate. As a result, the product team decides to implement the green “Buy Now” button on the checkout page for all users.

Designing experiments for A/B testing in data science projects involves several key steps such as the following. Product managers / business analysts and data scientists would, together, play key role designing A/B test plan.

- Identify the specific change that we want to test. This would be the change in terms of using new machine learning models and the related personalized content strategy, marketing campaign, etc. It’s important to define the change as precisely as possible to ensure that the experiment is focused and meaningful.

- Once we’ve identified the change we want to test, the next step is to define the treatment / experimental and control groups of users. The experimental group would receive the change we want to test, while the control group would not. It’s important to ensure that the groups are randomly assigned and that the sample size is large enough to provide statistically significant results. It may be good idea to consider segmenting the groups based on relevant factors such as user demographics or behavior.

- We’ll then need to define the metrics that will be used to evaluate the impact of the change. These metrics should be specific, measurable, and relevant to our business goals. Common metrics include click-through rate, conversion rate, and user engagement.

- Finally, after the metrics is gathered, we’ll calculate the statistical significance of the results to ensure that any differences between the treatment and control groups are not due to chance.

By following the above steps, we can design effective experiments for A/B testing in data science projects that provide valuable insights and drive growth for our business.

Examples of Data Science Projects Leveraging A/B Testing

In data science projects, A/B testing can help optimize machine learning models and related content strategies, marketing campaigns, etc. By randomly assigning users to experimental and control groups and comparing their behavior, product & business teams can gain valuable insights and make data-driven decisions. Here are some of the real-world examples representing business use cases where the effectiveness of data science projects involving machine learning models can be ascertained using A/B testing techniques.

Improving the effectiveness of personalized email campaigns

Lets take an example of improving the effectiveness of personalized email campaigns. The proposed approach can be to build one or more machine learning models which can predict which products a customer would most likely purchase based on their purchase history and browsing behavior. These predictions can then be used to personalize the content of the email campaigns.

In order to adopt the proposed solution in production, it would be required to evaluate the effectiveness of the personalized email campaigns based on the predictions made by the machine learning models. This is where we can use A/B testing technique. As part of the A/B test plan, we will randomly assign a portion of the email list to the treatment group, which will receive personalized email content generated by the machine learning models (version B). The other portion of the email list will be assigned to the control group, which received generic email content (version A).

The A/B testing will then be executed and metrics such as the open rate, click-through rate, conversion rate, etc will be tracked for both groups over a period of several weeks. After analyzing the data, it can be found whether the treatment group have a statistically significant increase in conversion rate compared to the control group. Appropriately, this can then suggest that whether the personalized email campaigns were able to indeed improve the effectiveness of the marketing campaigns created based on the prediction of machine learning models. We can then decide to implement them for all customers.

Increasing the number of users clicks in a custom search engine

Let’s say we have a search engine in place and we want to increase the number of clicks by users after they performed some query. The existing search engine, let’s say, is built on top of elastic search. We come up with a hypothesis that a solution built on top of a few machine learning models could improve the ranking of search results based on their relevance to users’ query. This, in turn, can improve the number of clicks by the end users. One approach can be to build the solution and deploy. This may succeed or fail as we don’t test it enough in the product environment. Another approach is use the A/B testing technique.

To evaluate the effectiveness of solution based on the machine learning models, we can design an A/B test plan. We can randomly assign a portion of the user base to the experimental or treatment group, which received search results ranked using our new machine learning models. The other portion of the user base was assigned to the control group, which continued to receive search results ranked using the existing algorithm such as elastic search.

We can track metrics such as click-through rate and user satisfaction for both groups over a period of several weeks. After analyzing the data, we need to assert that the experimental group has a statistically significant increase in click-through rate compared to the control group. This can help us decide that the hypothesized solution based on machine learning model indeed improved the relevance of search results for this type of query. And, this can result in the production deployment of the new solution created using machine learning models.

In this manner, by using A/B testing to evaluate the effectiveness of our machine learning models, we can ensure that our changes are data-driven and have a positive and desired impact on user satisfaction and engagement with our search engine.

Conclusion

A/B testing is a powerful technique for optimizing data science projects. By measuring the impact of changes with a small group of users before rolling them out to a larger audience, businesses can make data-driven decisions that drive growth and improve user engagement. We explored the principles of A/B testing and how it can be applied to data science projects, from improving the effectiveness of marketing campaigns to increasing number of user clicks in search engine. We also discussed the key steps involved in designing effective experiments for A/B testing, including defining the change to be tested, defining the experimental and control groups, and defining relevant metrics.

Whether you’re a product manager or a data scientist, understanding the principles of A/B testing is essential for making data-driven decisions that have a measurable impact on your business metrics. By leveraging A/B testing in your data science projects, you can optimize machine learning models, personalized content strategies, and marketing campaigns to improve user engagement and drive growth.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me