In machine learning, confounder features or variables can significantly affect the accuracy and validity of your model. A confounder feature is a variable that influences both the predictor and the outcome or response variables, creating a false impression of causality or correlation. This makes it harder to determine whether the observed relationship between two variables is genuine or merely due to some external factor.

Example of Confounder Features

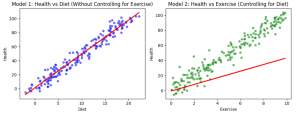

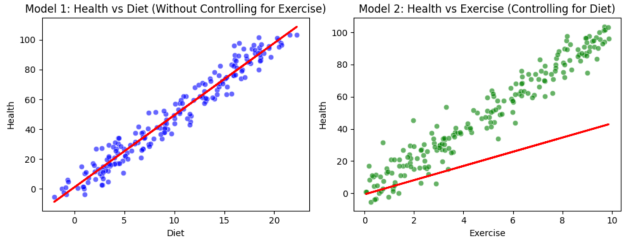

For instance, consider a model that predicts a person’s likelihood of heart disease based on their diet. You may conclude that people eating a balanced diet are less likely to have heart disease, but this relationship could be confounded by exercise habits. People who eat well are also more likely to exercise, and exercise itself is an important factor influencing heart health. If exercise is not properly accounted for in the model, the impact of diet might be overestimated. This is a classic example of a confounding feature: exercise influences both the diet and the outcome (heart health), leading to a potential bias in your conclusions. The following plot represents the impact of including confounder features while training machine learning models:

Make a note of the following in above pictures:

- The first scatter plot shows a positive correlation between Diet and Health, but this relationship is partially due to the confounder (Exercise). The model does ignore the confounding effect of Exercise. In other words, it is not included as one of the features while training the model. The relationship between diet and health appears stronger than it is. The effect of diet on health is overestimated.

- The second scatter plot shows how Exercise feature also strongly influences Health. The second model is trained using both Diet and Exercise as features. In other words, in second model, the confounder features got controlled. By including Exercise as one of the features, we get more accurate estimate of how diet impacts health, as we account the influence of exercise on health.

Let’s look at another example. In a predictive model for housing prices, both the proximity to good schools and neighborhood affluence might influence the price. If you’re interested in the effect of school proximity on housing prices, affluence is a confounder that needs to be accounted for, as it is related to both variables.

Why Is It Important to Consider Confounders?

Incorporating the concept of confounder features into your ML model building is beneficial for several reasons:

- Improves Accuracy: It helps improve the accuracy of your predictions by preventing overfitting to spurious correlations.

- Ensures Reliable Conclusions: Controlling confounders ensures that the conclusions drawn from the model are more reliable, making them actionable for decision-making.

- Better Causal Inferences: By controlling for confounders, you increase the validity of causal inferences, especially in domains like healthcare, economics, and social sciences, where understanding cause-and-effect relationships is critical.

How to Identify Confounding Features?

Identifying whether features are confounding in nature can be done through various methods:

-

Correlation Analysis: This is one of the most common approaches. It involves measuring the statistical relationships between different features and the target variable to identify indirect influences.

-

Causal Diagrams: Another effective approach is the use of causal diagrams or Directed Acyclic Graphs (DAGs), which help visualize relationships between different variables and identify possible confounding paths.

Machine learning models often need to separate the predictor feature from the confounders to ensure accurate results. By understanding and addressing the confounders, data scientists and researchers can make more precise and unbiased models, enabling better decision-making and insights in complex environments.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me