In this post, you will learn about the concepts of precision, recall, and accuracy when dealing with the machine learning classification model. Given that this is Covid-19 age, the idea is to explain these concepts in terms of a machine learning classification model predicting whether the patient is Corona positive or not based on the symptoms and other details. The following model performance concepts will be described with the help of examples.

- What is the model precision?

- What is the model recall?

- What is the model accuracy?

- What is the model confusion matrix?

- Which metrics to use – Precision or Recall?

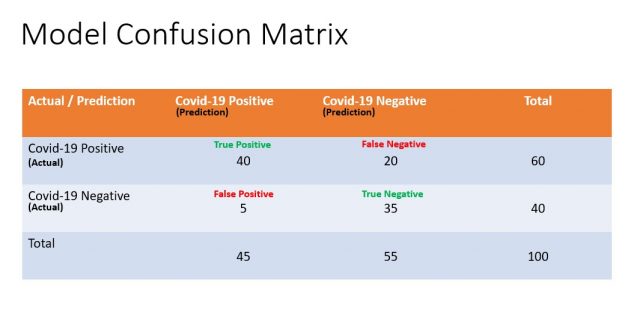

Before getting into learning the concepts, let’s look at the data (hypothetical) derived out of model predictions for 100 prospective patients:

- No. of positive predictions = 45

- No. of true positive out of 45 positive predictions = 40

- No. of negative predictions = 55

- No. of true negative out of 55 negative predictions = 35

- No. of actual positive = 60

- No. of actual negative = 40

The data is represented as a confusion matrix in the later section.

What is Model Precision?

A machine learning model is highly precise in its prediction means that of all the predictions it makes about something being positive, most of them turn out to be true. Model precision is also called a positive predicted value (PPV).

For example, let’s say a classification machine learning model is trained to predict whether a person is Covid-19 positive or not out of 100 people. It predicted that a total of 45 people is Covid-19 positive. The model will be said to have high precision if 40 out of the 45 predictions are true. This means that there are 5 cases in which the model falsely predicted as positive. The cases which are falsely predicted as positives can be termed as “False Positives“. These 5 cases can be termed false positives.

The precision of the model is calculated as around 89% (40 divided by 45)

Based on the above, the formula of precision can be stated as the following:

Precision = True Positive / Total Positive Predictions

Total positive prediction is sum of true positives and false positives.

Higher model precision will mean that most of the Covid-19 positive predictions were actually found to be positive or truly positive. Thus, the false positive is very less.

What is Model Recall?

In the above example, the number of actual positive cases was 60. Out of actual Covid-19 cases of 60, the model was able to make 40 correct predictions. The rest of 20 was falsely predicted as negative. The predictions predicted falsely as negative can be termed as “False Negatives“. The model recall is also termed model sensitivity.

The recall of the model can be calculated as 66.7% or so (40 divided by 60).

Based on the above, the formula of recall can be stated as the following:

Recall = True Positives / Actual Positive Cases = True Positives / (True Positives + False Negatives)

True positive represents the number of correct positive predictions which in this example is 40.

The higher model recall will mean that the model correctly made positive predictions out of all the actual Covid-19 positive cases.

What is Model Accuracy?

The accuracy of the model can be calculated as the number of correct Covid-19 predictions including both true positive and negative divided by all predictions made.

The formula of model accuracy can be stated as the following:

Accuracy = (True positive + True negative) / Total predictions or populations = (40 + 35)/100 = 0.75 (75%)

What is Model Confusion Matrix?

The confusion matrix can be defined as a specific table layout that allows visualization of the performance of the machine learning model. It is also termed the error matrix.

The model confusion matrix can be represented as the following:

Which metrics to use – Precision vs Recall?

In the example used in this post, the model recall is found to be 66.7% and the model precision is found to be 89%. The question that arises is this – which metrics would you optimize the model for – Recall or Precision? It does depend upon the answer to the following questions:

- Is it okay if the model misses predicting those cases as positive which are actually positive? Essentially, this means that model will have a lower recall. Alternatively, this also means that the model will have higher instances of false negatives. What we are saying is that there are cases predicted as negative which ain’t actually negative, and hence, false negative. This would mean that many prospective patients who are actually Covid-19 positive would be predicted negative and this could mean danger to their life as they won’t take appropriate precautions and medicines.

- Is it okay even if there are few falsely predicted positive cases? This means that there will be few patients which may be advised to take a Covid-19 test to make sure he/she is really positive or otherwise. The model will have higher instances of false positives.

In the case of the model predicting Covid-19 positive, what is desired is a very high recall or in other words, very few instances of false negatives.

In order to achieve high model recall, the number of false positives would also tend to increase. This would mean that the model precision would decrease.

Precision-Recall Tradeoff

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

I found it very helpful. However the differences are not too understandable for me